A gray-haired man walks by way of an workplace foyer holding a espresso cup, staring forward as he passes the entryway.

He seems unaware that he’s being tracked by a community of cameras that may detect not solely the place he has been but in addition who has been with him.

Surveillance know-how has lengthy been in a position to establish you. Now, with assist from synthetic intelligence, it’s making an attempt to determine who your mates are.

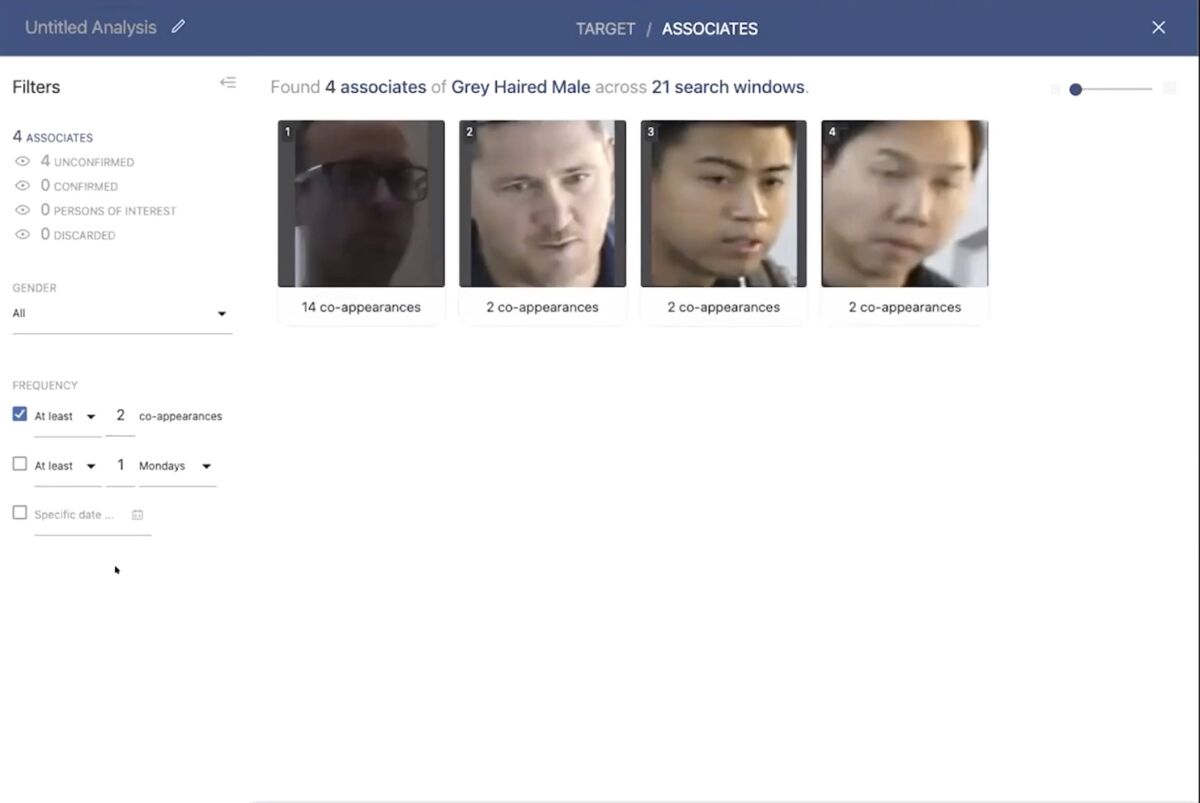

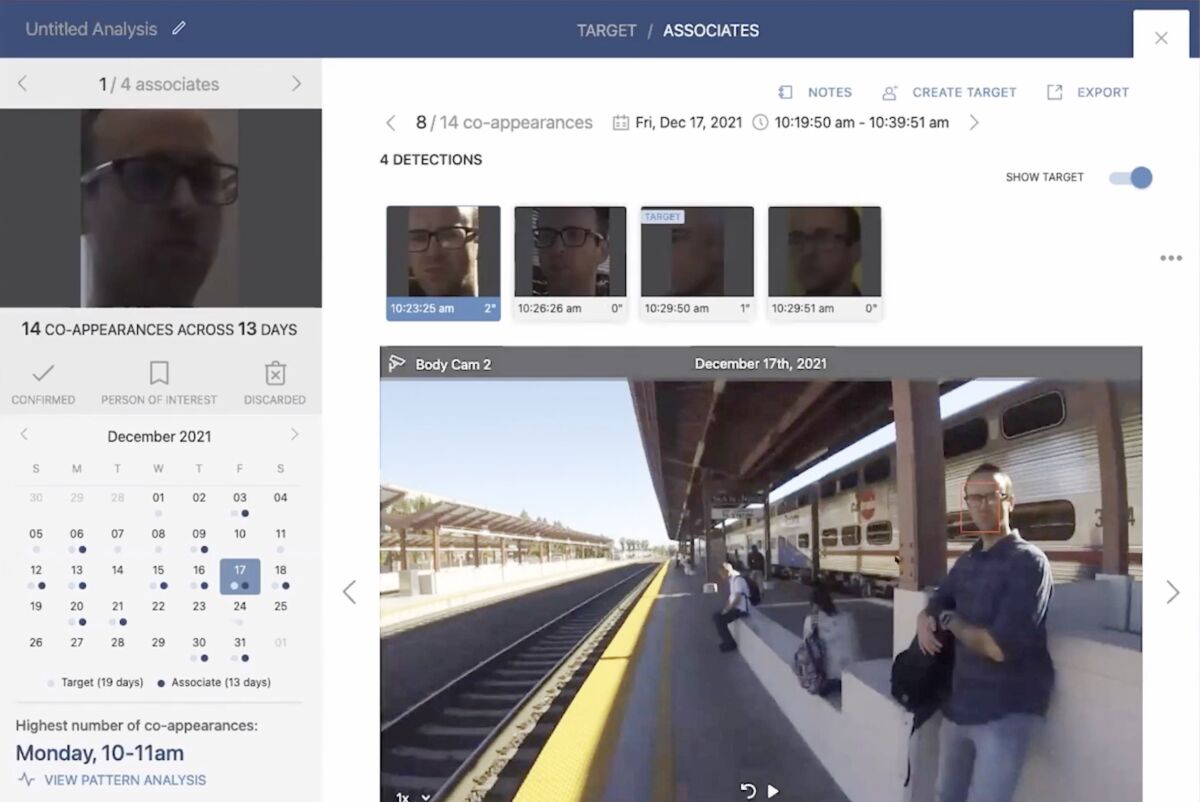

With a number of clicks, this “co-appearance” or “correlation evaluation” software program can discover anybody who has appeared on surveillance frames inside a couple of minutes of the gray-haired male during the last month, strip out those that could have been close to him a time or two, and 0 in on a person who has appeared 14 occasions. The software program can instantaneously mark potential interactions between the 2 males, now deemed seemingly associates, on a searchable calendar.

Vintra, the San Jose-based firm that confirmed off the know-how in an industry video presentation final 12 months, sells the co-appearance characteristic as a part of an array of video evaluation instruments. The agency boasts on its website about relationships with the San Francisco 49ers and a Florida police division. The Inside Income Service and extra police departments throughout the nation have paid for Vintra’s companies, in accordance with a authorities contracting database.

Though co-appearance know-how is already utilized by authoritarian regimes resembling China’s, Vintra appears to be the primary firm advertising it within the West, business specialists say.

Within the first body, the presenter identifies a “goal.” Within the second, he finds individuals who have appeared in the identical body as him inside 10 minutes. Within the third, a digicam picks up an “affiliate” of the primary particular person.

(IPVM)

However the agency is one in all many testing new AI and surveillance purposes with little public scrutiny and few formal safeguards in opposition to invasions of privateness. In January, for instance, New York state officers criticized the firm that owns Madison Square Garden for utilizing facial recognition know-how to ban staff of regulation corporations which have sued the corporate from attending occasions on the area.

Business specialists and watchdogs say that if the co-appearance device is just not in use now — and one analyst expressed certainty that it’s — it’s going to most likely turn into extra dependable and extra broadly accessible as synthetic intelligence capabilities advance.

Not one of the entities that do enterprise with Vintra that have been contacted by The Occasions acknowledged utilizing the co-appearance characteristic in Vintra’s software program package deal. However some didn’t explicitly rule it out.

China’s authorities, which has been probably the most aggressive in utilizing surveillance and AI to regulate its inhabitants, makes use of co-appearance searches to identify protesters and dissidents by merging video with an enormous community of databases, one thing Vintra and its shoppers wouldn’t be capable to do, stated Conor Healy, director of presidency analysis for IPVM, the surveillance analysis group that hosted Vintra’s presentation final 12 months. Vintra’s know-how may very well be used to create “a extra fundamental model” of the Chinese language authorities’s capabilities, he stated.

Some state and native governments within the U.S. limit the usage of facial recognition, particularly in policing, however no federal regulation applies. No legal guidelines expressly prohibit police from utilizing co-appearance searches resembling Vintra’s, “however it’s an open query” whether or not doing so would violate constitutionally protected rights of free meeting and protections in opposition to unauthorized searches, in accordance with Clare Garvie, a specialist in surveillance know-how with the Nationwide Assn. of Felony Protection Legal professionals. Few states have any restrictions on how non-public entities use facial recognition.

The Los Angeles Police Division ended a predictive policing program, often called PredPol, in 2020 amid criticism that it was not stopping crime and led to heavier policing of Black and Latino neighborhoods. This system used AI to investigate huge troves of knowledge, together with suspected gang affiliations, in an effort to foretell in actual time the place property crimes may occur.

Within the absence of nationwide legal guidelines, many police departments and personal corporations should weigh the steadiness of safety and privateness on their very own.

“That is the Orwellian future come to life,” stated Sen. Edward J. Markey, a Massachusetts Democrat. “A deeply alarming surveillance state the place you’re tracked, marked and categorized to be used by public- and private-sector entities — that you haven’t any data of.”

Markey plans to reintroduce a invoice within the coming weeks that might halt the usage of facial recognition and biometric applied sciences by federal regulation enforcement and require native and state governments to ban them as a situation of profitable federal grants.

For now, some departments say they don’t have to choose due to reliability issues. However as know-how advances, they may.

Vintra, a San Jose-based software program firm, introduced “correlation evaluation” to IPVM, a subscriber analysis group, final 12 months.

(IPVM)

Vintra executives didn’t return a number of calls and emails from The Occasions.

However the firm’s chief govt, Brent Boekestein, was expansive about potential makes use of of the know-how in the course of the video presentation with IPVM.

“You may go up right here and create a goal, primarily based off of this man, after which see who this man’s hanging out with,” Boekestein stated. “You may actually begin constructing out a community.”

He added that “96% of the time, there’s no occasion that safety’s eager about however there’s at all times info that the system is producing.”

4 companies that share the San Jose transit station utilized in Vintra’s presentation denied that their cameras have been used to make the corporate’s video.

Two corporations listed on Vintra’s web site, the 49ers and Moderna, the drug firm that produced one of the broadly used COVID-19 vaccines, didn’t reply to emails.

A number of police departments acknowledged working with Vintra, however none would explicitly say that they had carried out a co-appearance search.

Brian Jackson, assistant chief of police in Lincoln, Neb., stated his division makes use of Vintra software program to avoid wasting time analyzing hours of video by looking out shortly for patterns resembling blue vehicles and different objects that match descriptions used to unravel particular crimes. However the cameras his division hyperlinks into —together with Ring cameras and people utilized by companies — aren’t adequate to match faces, he stated.

“There are limitations. It’s not a magic know-how,” he stated. “It requires exact inputs for good outputs.”

Jarod Kasner, an assistant chief in Kent, Wash., stated his division makes use of Vintra software program. He stated he was not conscious of the co-appearance characteristic and must take into account whether or not it was authorized in his state, one of some that restricts the usage of facial recognition.

“We’re at all times on the lookout for know-how that may help us as a result of it’s a drive multiplier” for a division that struggles with staffing points, he stated. However “we simply wish to ensure that we’re throughout the boundaries to verify we’re doing it proper and professionally.”

The Lee County Sheriff’s Workplace in Florida stated it makes use of Vintra software program solely on suspects and never “to trace folks or automobiles who are usually not suspected of any prison exercise.”

The Sacramento Police Division stated in an e-mail that it makes use of Vintra software program “sparingly, if in any respect” however wouldn’t specify whether or not it had ever used the co-appearance characteristic.

“We’re within the strategy of reviewing our Vintra contract and whether or not to proceed utilizing its service,” the division stated in a press release, which additionally stated it couldn’t level to cases wherein the software program helped clear up crimes.

The IRS stated in a press release that it makes use of Vintra software program “to extra effectively evaluate prolonged video footage for proof whereas conducting prison investigations.” Officers wouldn’t say whether or not the IRS used the co-appearance device or the place it had cameras posted, solely that it adopted “established company protocols and procedures.”

Jay Stanley, an American Civil Liberties Union legal professional who first highlighted Vintra’s video presentation final 12 months in a blog post, stated he’s not stunned some corporations and departments are cagey about its use. In his expertise, police departments typically deploy new know-how “with out telling, not to mention asking, permission of democratic overseers like metropolis councils.”

The software program may very well be abused to watch private and political associations, together with with potential intimate companions, labor activists, anti-police teams or partisan rivals, Stanley warned.

Danielle VanZandt, who analyzes Vintra for the market analysis agency Frost & Sullivan, stated the know-how is already in use. As a result of she has reviewed confidential paperwork from Vintra and different corporations, she is underneath nondisclosure agreements that prohibit her from discussing particular person corporations and governments which may be utilizing the software program.

Retailers, that are already gathering huge knowledge on individuals who stroll into their shops, are additionally testing the software program to find out “what else can it inform me?” VanZandt stated.

That might embody figuring out relations of a financial institution’s greatest clients to make sure they’re handled properly, a use that raises the likelihood that these with out wealth or household connections will get much less consideration.

“These bias issues are big within the business” and are actively being addressed by way of requirements and testing, VanZandt stated.

Not everybody believes this know-how shall be broadly adopted. Legislation enforcement and company safety brokers typically uncover they’ll use much less invasive applied sciences to acquire related info, stated Florian Matusek of Genetec, a video analytics firm that works with Vintra. That features scanning ticket entry techniques and cellphone knowledge which have distinctive options however are usually not tied to people.

“There’s a giant distinction between, like product sheets and demo movies and really issues being deployed within the discipline,” Matusek stated. “Customers typically discover that different know-how can clear up their drawback simply as properly with out going by way of or leaping by way of all of the hoops of putting in cameras or coping with privateness regulation.”

Matusek stated he didn’t know of any Genetec shoppers that have been utilizing co-appearance, which his firm doesn’t present. However he couldn’t rule it out.

Source link