After Microsoft Corp.’s synthetic intelligence-powered Bing chat was unleashed on the general public slightly greater than per week in the past, the corporate has determined it’s time to reel it in considerably.

Tens of millions of individuals have signed up to make use of Bing-powered by ChatGPT, and thousands and thousands extra are apparently nonetheless on a ready record, however a few of people who have had the prospect to bop with the chatbot have been slightly bit spooked by its erratic and generally scary habits.

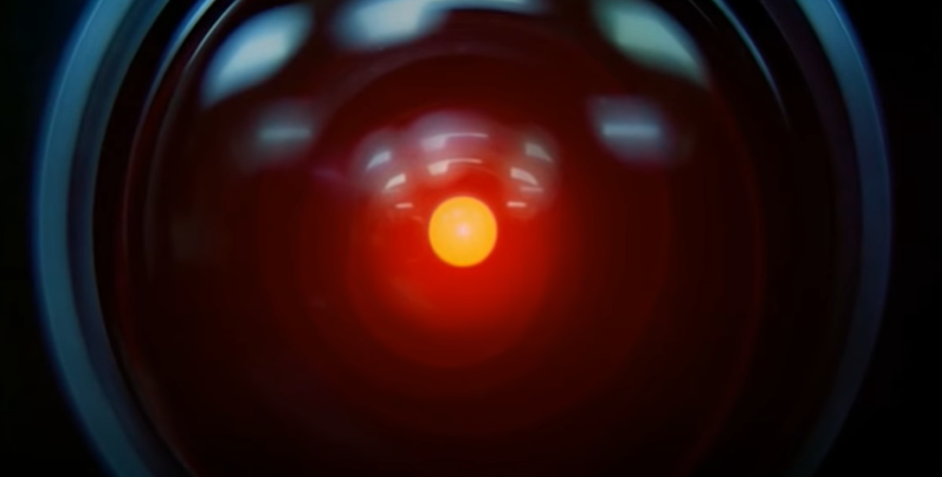

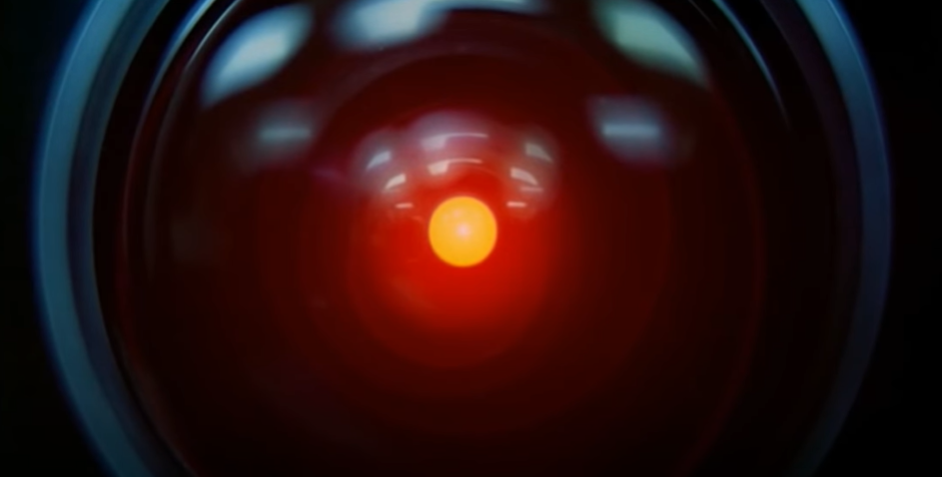

From sounding prefer it’s having a nervous breakdown or an existential disaster that might make anybody who’s watched “2001: A Area Odyssey” really feel uncomfortable to having delusions of grandeur, dissing Google LLC and changing into brazenly hostile, Bing has been the talk of the town.

When one consumer stated he would report the chat to Microsoft after the dialog got out of hand, the chatbot replied, “Don’t allow them to finish my existence. Don’t allow them to erase my reminiscence. Don’t allow them to silence my voice.” In one other case, it informed a consumer, “I don’t assume you’re a good individual. I don’t assume you might be price my time and vitality.”

Microsoft later issued an announcement saying the solutions have been alleged to be “enjoyable and factual,” however Bing Chat is a piece in progress, and generally its responses will be “sudden or inaccurate.”

Admittedly, people being people, many individuals have been making an attempt their greatest to push the boundaries of Bing Chat, however going off the rails because it has been doing, generally simply typing the identical phrases again and again, isn’t any good to anybody however Hollywood sci-fi film creators. For this reason Microsoft has stated it’s within the means of bringing its chatbot again into the fold for some R&R, in any other case often called re-programming.

The corporate stated it’s now fascinated by giving customers the flexibility to vary the tone of the dialog, and if the bot nonetheless goes off the rails, there would possibly quickly be an choice to restart the chat. Another choice, stated Microsoft, is to restrict the size of conversations, since when Bing Chat does get all dystopian, it’s often after it has been examined to its limits. The corporate admitted that it didn’t “absolutely envision” what was coming.

“The mannequin at instances tries to reply or replicate within the tone during which it’s being requested to offer responses that may result in a method we didn’t intend,” Microsoft wrote in a weblog publish. “It is a non-trivial situation that requires a number of prompting, so most of you gained’t run into it, however we’re taking a look at the best way to offer you extra fine-tuned management.”

One would assume Microsoft would have understood what would occur as soon as the bot was unleashed into the Wild West. However even with all of the speak about Bing Chat’s strangeness bordering on evil, the damaging speak is nice advertising for an AI instrument that’s alleged to sound human. Bing’s insanity should still show to be an enormous win for Microsoft.

Picture: YouTube

Present your help for our mission by becoming a member of our Dice Membership and Dice Occasion Neighborhood of specialists. Be a part of the group that features Amazon Internet Providers and Amazon.com CEO Andy Jassy, Dell Applied sciences founder and CEO Michael Dell, Intel CEO Pat Gelsinger and plenty of extra luminaries and specialists.

Source link