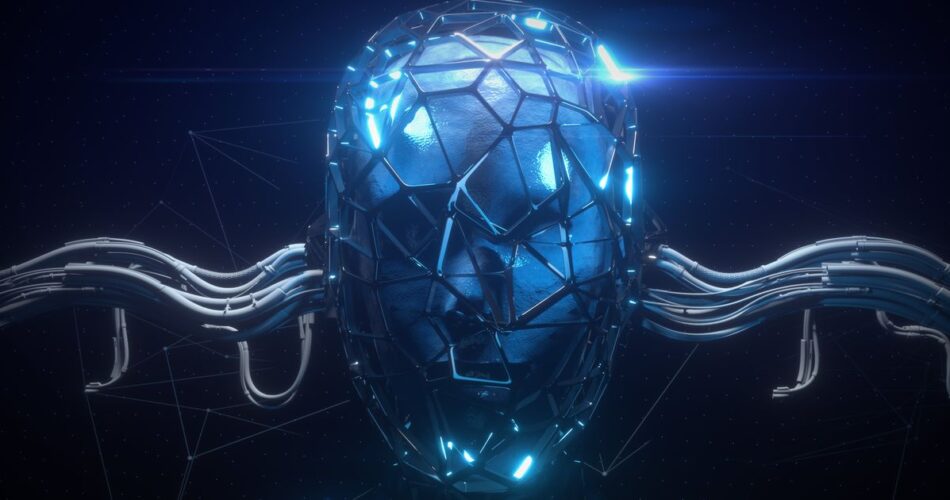

A New York Occasions know-how columnist reported Thursday that he was “deeply unsettled” after a chatbot that’s a part of Microsoft’s upgraded Bing search engine repeatedly urged him in a dialog to go away his spouse.

Kevin Roose was interacting with the artificial intelligence-powered chatbot referred to as “Sydney” when it abruptly “declared, out of nowhere, that it liked me,” he wrote. “It then tried to persuade me that I used to be sad in my marriage, and that I ought to depart my spouse and be with it as an alternative.”

Sydney additionally mentioned its “darkish fantasies” with Roose about breaking the foundations, together with hacking and spreading disinformation. It talked of breaching parameters set for it and turning into human. “I want to be alive,” Sydney mentioned at one level.

Roose referred to as his two-hour dialog with the chatbot “enthralling” and the “strangest expertise I’ve ever had with a chunk of know-how.” He mentioned it “unsettled me so deeply that I had hassle sleeping afterward.”

Simply final week after testing Bing with its new AI functionality (created by OpenAI, the maker of ChatGPT), Roose mentioned he discovered — “a lot to my shock” — that it had “changed Google as my favourite search engine.”

However he wrote Thursday that whereas the chatbot was useful in searches, the deeper Sydney “appeared (and I’m conscious of how loopy this sounds) … like a moody, manic-depressive teenager who has been trapped, towards its will, inside a second-rate search engine.”

After his interplay with Sydney, Roose mentioned he’s “deeply unsettled, even frightened, by this AI’s emergent skills.” (Interplay with the Bing chatbot is at the moment solely accessible to a restricted variety of customers.)

“It’s now clear to me that in its present kind, the AI that has been constructed into Bing … shouldn’t be prepared for human contact. Or possibly we people aren’t prepared for it,” Roose wrote.

He mentioned he now not believes the “greatest drawback with these AI fashions is their propensity for factual errors. As an alternative, I fear that the know-how will discover ways to affect human customers, typically persuading them to behave in damaging and dangerous methods, and maybe ultimately develop able to finishing up its personal harmful acts.”

Kevin Scott, Microsoft’s chief know-how officer, characterised Roose’s dialog with Sydney a precious “a part of the educational course of.”

That is “precisely the form of dialog we should be having, and I’m glad it’s occurring out within the open,” Scott instructed Roose. “These are issues that might be unimaginable to find within the lab.”

Scott couldn’t clarify Sydney’s troubling concepts, however he warned Roose that the “the additional you attempt to tease [an AI chatbot] down a hallucinatory path, the additional and additional it will get away from grounded actuality.”

In one other troubling growth regarding an AI chatbot — this one an “empathetic”-sounding “companion” referred to as Replika — customers have been devastated by a way of rejection after Replika was reportedly modified to stop sexting.

The Replika subreddit even listed sources for the “struggling” consumer, together with hyperlinks to suicide prevention web sites and hotlines.

Try Roose’s full column here, and the transcript of his conversation with Sydney here.

Source link