I began a local weather modeling undertaking assuming I would be coping with “massive” datasets. Then I noticed the precise dimension: 2 terabytes. I wrote a simple NumPy script, hit-run, and grabbed a espresso. Dangerous thought. Once I got here again, my machine had frozen. I restarted and tried a smaller slice. Identical crash. My traditional workflow wasn’t going to work. After some trial and error, I ultimately landed on Zarr, a Python library for chunked array storage. It let me course of that whole 2TB dataset on my laptop computer with none crashes. This is what I realized:

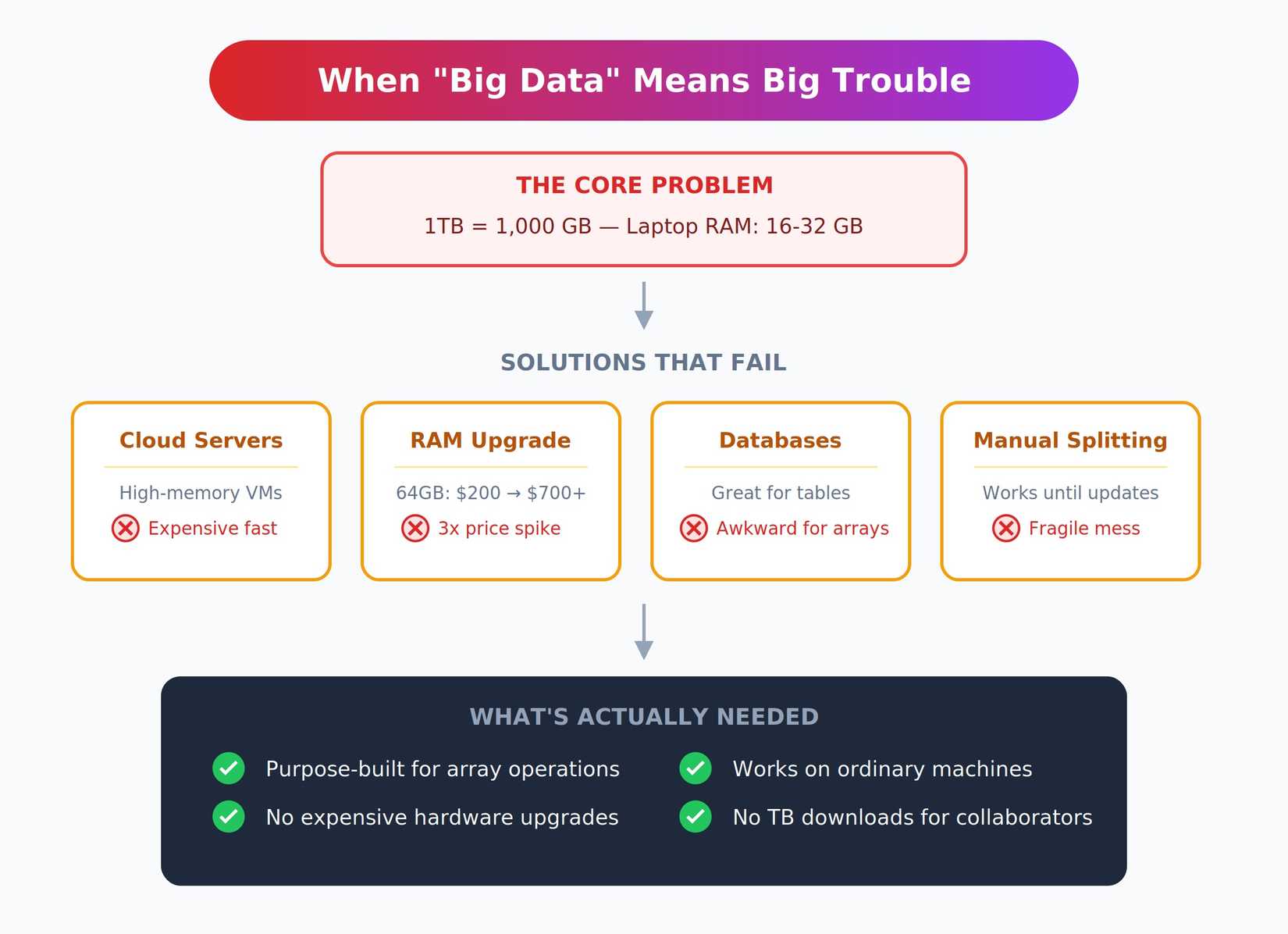

When “huge information” truly means huge bother

Working with terabytes is not simply “extra information.” It is a completely totally different class of drawback that breaks all of your regular assumptions.

Most laptops have 16 to 32 GB of RAM. A single terabyte is roughly 1,000 GB. You merely can not load that into reminiscence, irrespective of how intelligent your code is. Even should you might one way or the other squeeze it in, sequential reads at this scale are painfully sluggish.

The standard options all have severe drawbacks. You can hire a high-memory cloud server with tons of of gigabytes of RAM, however that will get costly quick, and personally upgrading your native machine’s RAM is not a cheap different. RAM costs have roughly tripled since mid-2025 resulting from AI information facilities consuming a lot of the provide.

That 64GB improve that price $200 final spring now runs over $700. Databases work nice for tabular information, however really feel awkward for multidimensional arrays. Manually splitting information into manageable items works till you want to replace one thing, then it turns into a fragile mess.

I wanted one thing purpose-built for array operations that did not require costly {hardware} upgrades. Extra importantly, I wanted collaborators to entry outcomes on their unusual machines with out downloading terabytes of information first.

Meet Zarr (lastly, one thing designed for this)

Zarr is a Python library designed for big, chunked array storage. The core thought is fantastically easy: break arrays into independently saved chunks. Every chunk could be compressed by itself and skim individually from the remaining. You work together with a Zarr array virtually precisely like a NumPy array, with acquainted slicing and indexing syntax. However underneath the hood, Zarr solely masses the chunks you really want into reminiscence.

The library helps native disks, community drives, and cloud backends like S3, Google Cloud Storage, or Azure Blob. This made it attainable to course of cloud-hosted information with out downloading every part first. For a 2TB dataset, that functionality alone was game-changing.

Zarr is open supply, actively maintained by the scientific Python group, and performs nicely with the present ecosystem. The API feels acquainted, not like studying a completely new system. If NumPy, you are already a lot of the approach there.

Stop crashing your Python scripts: How Zarr handles massive arrays

Bored with out-of-memory errors derailing your information evaluation? There’s a greater method to deal with enormous arrays in Python.

Placing it to the take a look at on actual information

My take a look at dataset was about 2TB of local weather simulation output spanning hundreds of time steps. The objective was easy: calculate regional averages throughout your complete time sequence. I arrange a Zarr array with rigorously chosen chunk sizes. This took some experimentation. Chunks which might be too small add overhead from managing too many information. Chunks which might be too massive defeat the aim by forcing you to load gigabytes into reminiscence without delay.

I ultimately settled on chunks that matched my entry patterns, slicing by time and geographic area. The precise code seemed remarkably just like my unique NumPy script:

import zarr

import numpy as np

# Create a chunked Zarr array

retailer = zarr.DirectoryStore('climate_data.zarr')

z = zarr.open(retailer, mode='w', form=(10000, 1000, 2000),

chunks=(100, 500, 500), dtype='float32')

# Write information in chunks

for i in vary(100):

chunk_data = process_raw_data(i)

z[i*100:(i+1)*100] = chunk_data

# Learn and course of particular slices

regional_avg = z[:, 200:300, 500:600].imply(axis=0)The primary full run was accomplished efficiently in just a few hours. My laptop computer’s reminiscence utilization stayed round 4 GB your complete time. No crashes, no freezes, simply regular progress by way of the dataset.

Learn Python Basics: Let’s Build A Simple Expense Tracker

Take cost of your funds whereas studying to code in Python.

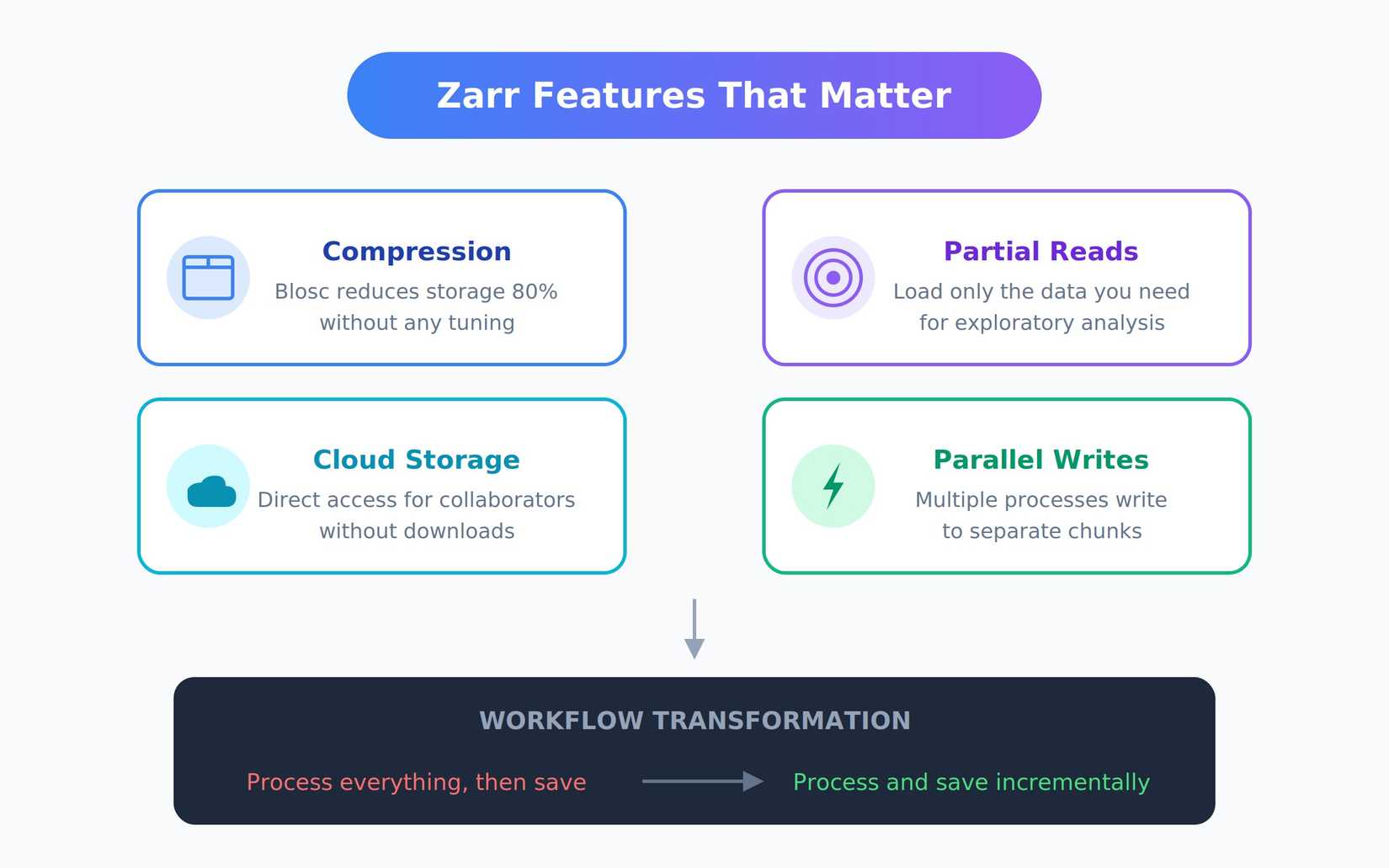

The Zarr options that truly mattered

Compression was surprisingly efficient with none tuning. Zarr makes use of Blosc by default, which diminished my 2TB dataset to underneath 400GB. For scientific information with patterns and repetition, compression ratios like this are frequent. Partial reads made exploratory evaluation attainable. I might load simply January information, or only a particular area, with out touching the remaining. What was beforehand unattainable grew to become routine.

Cloud storage assist eliminates native disk bottlenecks. I moved the ultimate array to Google Cloud Storage. Collaborators might open it instantly with out downloads, and I ended worrying about backup methods for multi-terabyte information.

Parallel writes let me break up processing throughout a number of scripts or machines. Totally different processes might write to separate chunks concurrently with out conflicts. This turned a multi-day sequential job into one thing I might end in hours. Finally, my workflow modified. As an alternative of “course of every part, then save,” I shifted to “course of and save incrementally.” Zarr works simply as nicely for constructing arrays piece by piece because it does for studying them again.

The place Zarr makes you’re employed slightly tougher

Regardless of its many benefits, Zarr isn’t with out its tradeoffs. You continue to want to consider carefully about chunk dimension and entry patterns. Poor selections could make small workloads slower than plain NumPy. Heavy random entry throughout many chunks is pricey. If you happen to’re continuously leaping round unpredictably, you may pay the price of loading totally different chunks repeatedly. Some issues require rethinking your strategy.

There’s an actual studying curve round efficiency tuning.

Documentation is strong however unfold throughout Zarr, Xarray for labeled arrays, and Dask for parallel computing. Determining which software handles what takes time. In observe, Zarr typically works finest paired with Dask for parallel operations or Xarray for dimension labels. The overlap between instruments could be complicated for newcomers. For my workload, which concerned principally sequential entry and regional aggregations, the trade-offs had been completely value it. But when your drawback matches comfortably in RAM already, keep on with NumPy.

Zoom out: Why this modified my default workflow

Zarr is not the one answer. HDF5 has been round longer. TileDB targets comparable issues with totally different design selections. NetCDF4 stays normal in local weather science. What made Zarr stand out was how naturally it match into the Python ecosystem. Xarray provides dimension labels and coordinate programs, and Dask provides parallelism and distributed computing. The items join easily.

Working at a terabyte scale not felt uncommon, and I default to Zarr as soon as datasets exceed just a few gigabytes, even when they technically slot in RAM. The comfort of compression and cloud storage alone makes it worthwhile. The scientific Python stack has matured right into a severe huge information platform. You do not want Hadoop or Spark for array workloads anymore.

Zarr saved the undertaking and doubtless saved my sanity. What felt unattainable on my laptop computer grew to become manageable. If you happen to work with massive arrays and hold hitting reminiscence limits, Zarr is worth exploring. The training curve pays for itself shortly, particularly contemplating present RAM costs make {hardware} upgrades prohibitively costly.

Begin with the official Zarr documentation and experiment with chunking methods on smaller datasets first. You can begin small and scale up as wanted with out rewriting every part. As soon as comfy with the fundamentals, the broader ecosystem of Xarray and Dask opens up much more prospects.

Source link