Market analysis in 2026 feels much less like a gradual march ahead and extra like navigating a shoreline in a heavy fog. You’ll be able to hear the engines of GenAI buzzing, however you possibly can’t fairly inform in case you are steering towards open water or jagged rocks. In Part 1 of this sequence, we mapped the forces pushing AI from a laboratory experiment to a each day expectation. That exploration made one fact simple: the trade has reached a delta the place the trail splits in a dozen instructions.

The pure intuition is to guess which channel is the “proper” one and wager your entire ship on that single heading. Nevertheless, in an period of agentic workflows, artificial customers, and quickly shifting belief, the standard urge to foretell is a entice. It creates false confidence, like following navigation charts that haven’t been up to date for the present situations.

Success on this surroundings requires a navigation system constructed for uncertainty itself. That is the place we flip to state of affairs planning.

Why Situation Planning Works When Prediction Fails

Right here, we’re utilizing the exploratory method of state of affairs planning developed by Thomas J. Chermack in Situation Planning in Organizations and practiced by corporations like Chermack Scenarios.

Conventional strategic planning asks: “What’s most probably to occur?” It locks you into one forecast, usually too early, with an excessive amount of confidence. Exploratory state of affairs planning asks completely different questions:

- “What might occur?”

- “How would we have to function in every case?”

- “Which capabilities hold us aggressive throughout a number of futures?”

Situation planning doesn’t predict the long run. It prepares you for it. You stress-test choices in opposition to a number of believable outcomes earlier than committing sources, so that you construct capabilities that stay useful irrespective of which future unfolds. You cease drifting and begin steering.

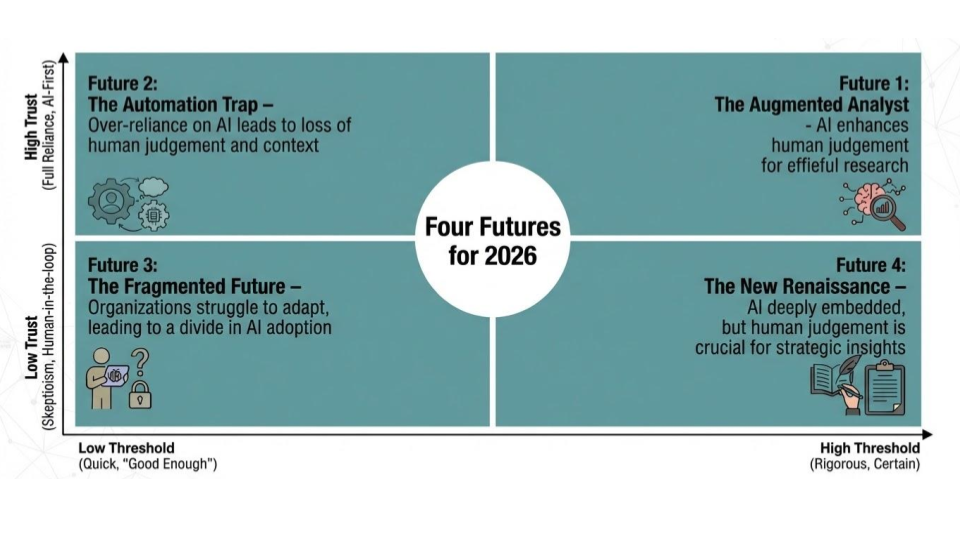

Two Uncertainties That Will Outline 2026

The longer term isn’t a single vacation spot, however quite a variety of attainable programs formed by how key uncertainties resolve. By means of in depth conversations with shoppers, analysts, and analysis leaders, two vital questions hold surfacing:

- “How a lot will we belief AI-generated insights to drive actual choices?”

- “How rigorous does analysis should be earlier than we’re prepared to behave on it?”

How your group solutions these questions by way of coverage, funding, and follow will decide which of 4 distinct futures you navigate towards by late 2026.

A notice on customization: If completely different uncertainties really feel extra pressing to your group, like regulatory modifications, expertise retention, or consumer focus, the identical exploratory framework applies. In Half 3, we’ll present you how you can determine your individual vital uncertainties and construct eventualities tailor-made to your particular context. The methodology is common; the variables are yours to outline.

Uncertainty #1: Belief in AI-Derived Outputs

How a lot will organizations belief AI-derived insights to tell actual choices?

This uncertainty isn’t about whether or not AI will probably be used. That’s already occurring. It’s about whether or not insights generated largely by fashions themselves (prompt-based syntheses, LLM solutions, artificial outputs) will probably be trusted sufficient to behave on with out in depth human validation.

Why this stays unsure:

- Validation and transparency requirements are nonetheless rising

- Legal responsibility and accountability issues stay unresolved

- Tolerance for threat varies by resolution kind and consequence

- Early failures can shortly erode confidence

What this implies for insights professionals:

Low belief positions researchers as validators and interpreters. Excessive belief shifts the position towards designing prompts, curating knowledge, and overseeing AI-driven workflows.

What this implies for stakeholders:

Low belief slows supply however will increase confidence. Excessive belief delivers velocity and value effectivity, with higher acceptance of probabilistic and revisable insights.

Low belief: AI outputs handled as exploratory inputs

Excessive belief: AI-derived perception accepted as decision-ready

Uncertainty #2: The Threshold for “Good Sufficient” Analysis

What stage of rigor and certainty will organizations require earlier than performing on perception?

As GenAI reduces the time and value to supply insight-like outputs, groups are re-evaluating what qualifies as enough proof for decision-making. The unresolved query: will speed-driven organizations settle for directionally helpful perception, or will high-stakes choices proceed to demand deep, human-led analysis?

Why this stays unsure:

- Pace and value pressures are intensifying

- Aggressive dynamics reward sooner movers

- Poor choices can reinforce demand for depth

- Organizations differ of their potential to evaluate perception high quality

What this implies for insights professionals:

A low threshold favors fast sense-making and iteration. A excessive threshold elevates researchers into strategic designers of rigorous, defensible research.

What this implies for stakeholders:

A low threshold permits sooner motion with larger threat. A excessive threshold delivers stronger conviction at the price of time and funding.

Low threshold: Directionally helpful perception is sufficient

Excessive threshold: Deep, validated understanding is required

From Uncertainty to 4 Believable Futures

Plotting these two uncertainties collectively creates a 2×2 framework that yields 4 believable futures for market analysis in 2026. Every future displays a distinct mixture of belief in AI-derived outputs and tolerance for “adequate” perception.

These futures should not predictions. They’re navigation instruments. They’re structured thought experiments designed that can assist you stress-test at the moment’s choices in opposition to a number of attainable outcomes and make clear what capabilities matter most below completely different situations.

The 4 futures that observe discover what every of those worlds might seem like for analysis groups, shoppers, and particular person researchers.

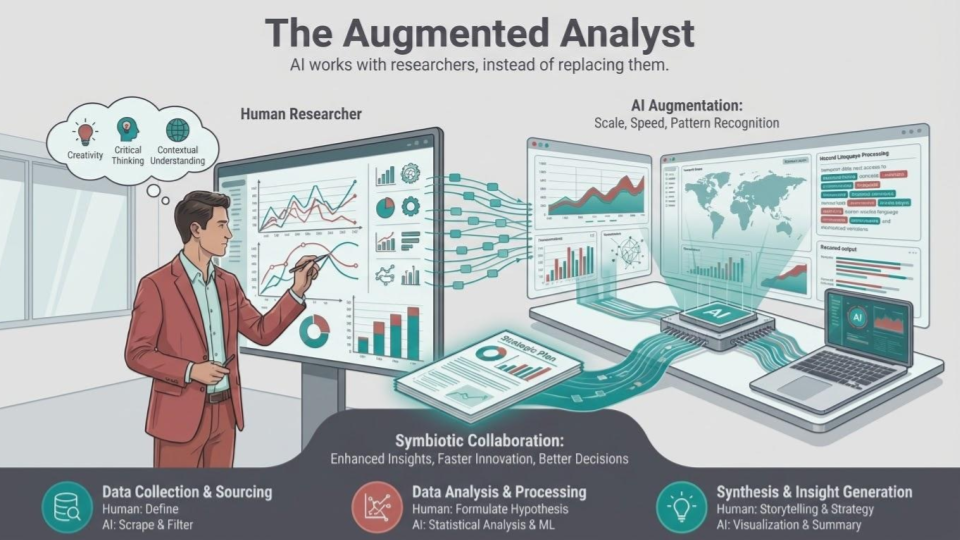

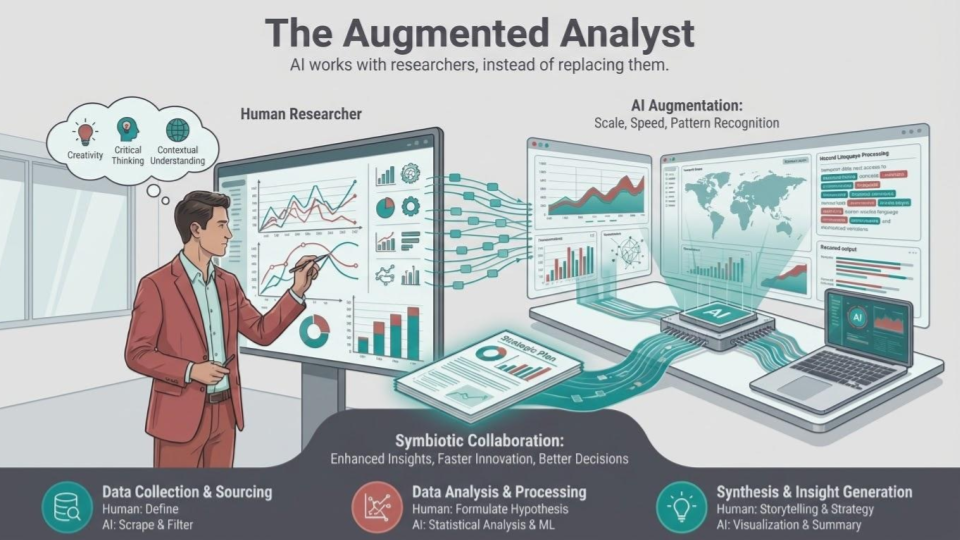

Situation 1: The Augmented Analyst

On this future, GenAI is in every single place however by no means unchecked. Groups undertake it the place it clearly accelerates work and intentionally keep away from utilizing it the place judgment, context, and accountability matter most. Analysis is quicker, however it’s also extra cautious. The position of the researcher is just not lowered. It’s clarified.

A day within the life:

Sarah, a senior insights supervisor, begins her Monday reviewing tracker knowledge that has already been cleaned, normalized, and flagged by AI for anomalies. As a substitute of scanning spreadsheets, she focuses on deciding which alerts deserve consideration and that are noise.

By Tuesday, she’s getting ready for qualitative follow-ups. Some preliminary interviews are performed by AI interviewers utilizing a structured information, permitting Sarah to shortly take a look at hypotheses and determine themes at scale. She then refines the following spherical of probes, including questions formed by her information of the class, the consumer’s inside dynamics, and what failed final quarter.

By Thursday, interviews are full. Transcripts are processed. Draft themes are ready. Sarah spends her time pressure-testing interpretations, debating implications with product and advertising leaders, and shaping a story that connects buyer friction to concrete go-to-market choices.

What as soon as took six to eight weeks now takes two to 3, not as a result of corners have been reduce, however as a result of execution was compressed and judgment was protected.

The dynamic:

- AI removes friction from the work

- People provide which means

- The job turns into extra fascinating

- Analysis retains its strategic seat

The danger: Complacency. Groups that cease evolving could discover themselves overtaken by organizations prepared to rethink analysis extra radically.

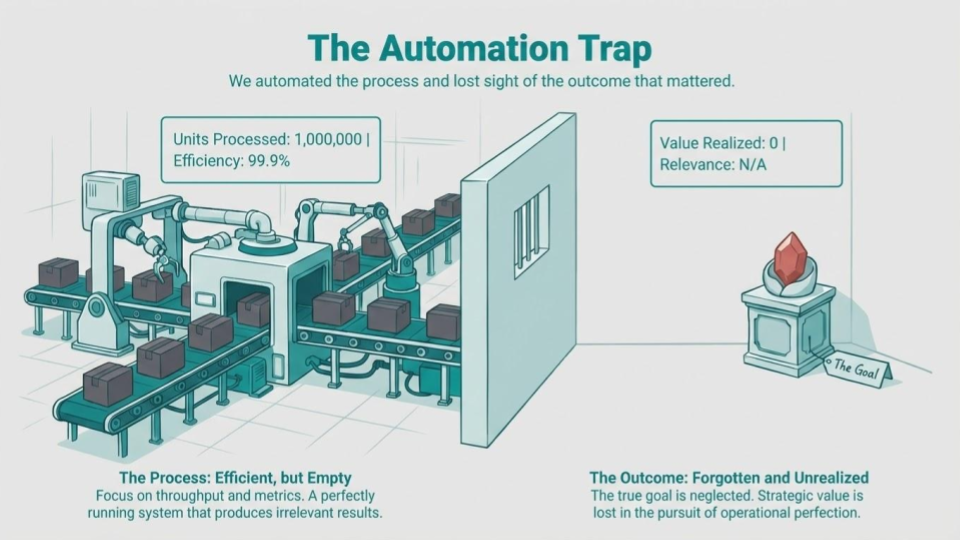

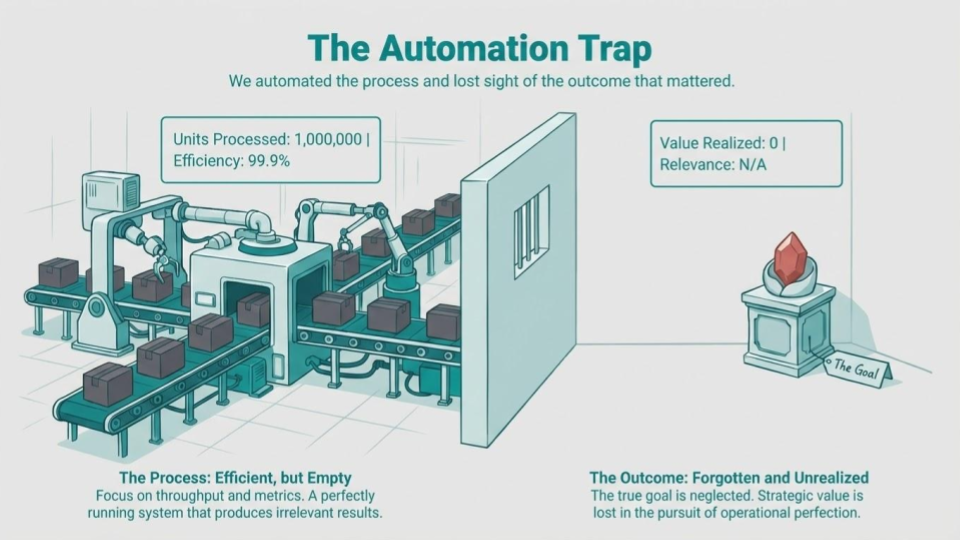

Situation 2: The Automation Lure

This future begins with enthusiasm. A management staff watches an AI platform generate a sophisticated report in below an hour. The charts look sharp. The language sounds assured. The associated fee financial savings are not possible to disregard. Budgets shift shortly. Headcount quietly shrinks.

The way it unravels:

A researcher logs in to assessment an AI-generated abstract earlier than sending it to stakeholders. There’s little time or incentive to problem the output. Context is lacking, however nobody has budgeted time so as to add it again. The system flags developments, nevertheless it doesn’t find out about:

- The competitor recall that skewed sentiment

- The inner reorg that modified shopping for habits

- The pricing experiment that distorted baseline metrics

These particulars reside in folks’s heads, and lots of of these individuals are gone.

The gradual decline:

- Suggestions change into obscure and interchangeable

- Stories sound cheap however not often decisive

- Stakeholders cease asking follow-up questions

- Choice high quality declines slowly, then visibly

- When outcomes disappoint, nobody is aware of if the difficulty was the info, the mannequin, or the choice itself

The exodus: Probably the most skilled researchers depart first. Those that stay handle instruments quite than form pondering. Analysis turns into a field to examine quite than a accomplice in technique.

Why that is laborious to reverse: As soon as belief erodes and tacit experience walks out the door, rebuilding takes years. Expectations reset to quick and low cost, even when quick and low cost now not delivers worth.

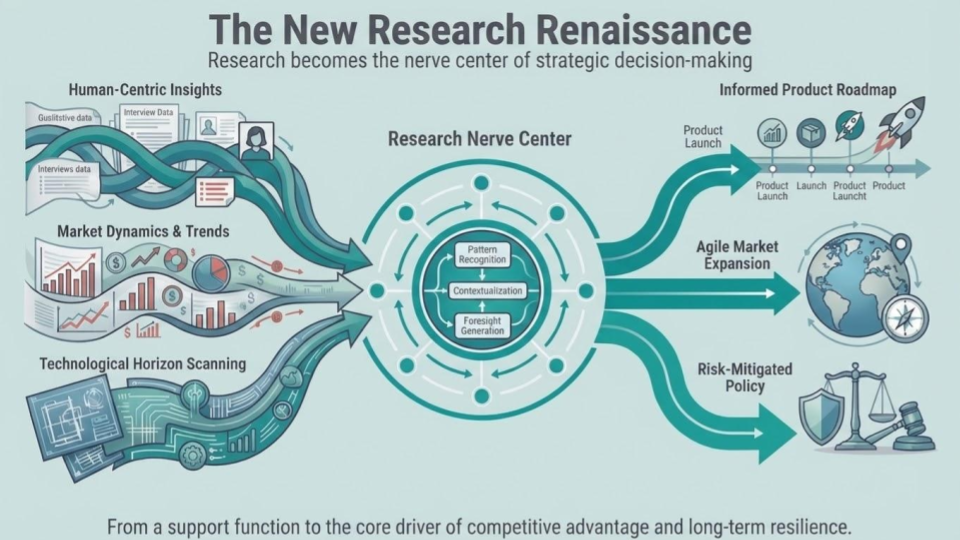

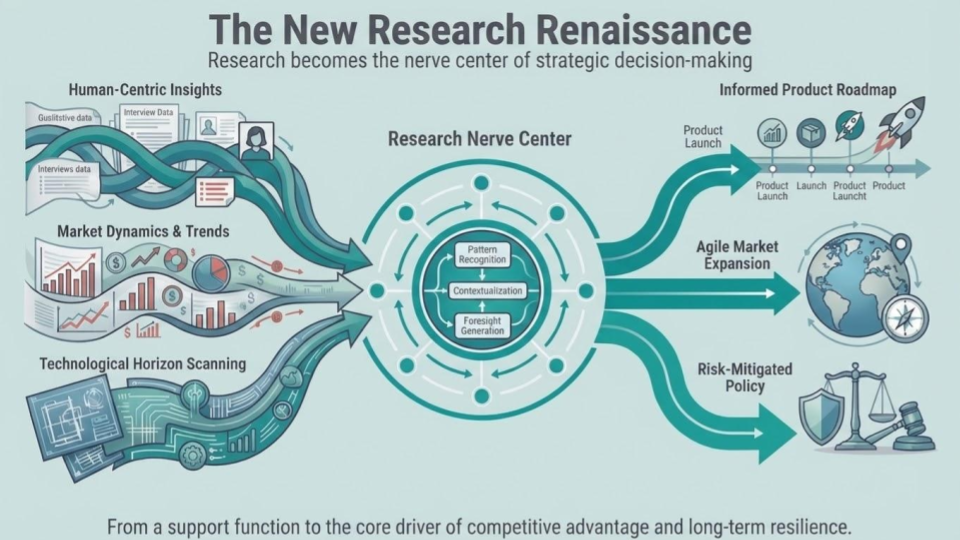

Situation 3: The New Analysis Renaissance

On this future, GenAI is deeply embedded, however so are researchers. Market analysis now not operates as a sequence of episodic initiatives. It features as a dwelling perception system that repeatedly integrates quantitative knowledge, qualitative enter, behavioral alerts, and market intelligence. AI connects the dots. People determine which dots matter.

A day within the life:

A product chief asks throughout a Tuesday standup: “Our enterprise churn is up 12% this quarter. Is that this a pricing concern, a product hole, or one thing else?”

As a substitute of commissioning a two-month research, the perception platform instantly:

- Pulls three years of churn knowledge segmented by buyer profile

- Surfaces patterns from current exit interviews and help tickets

- Identifies analogous conditions from adjoining product traces

- Flags contradictory alerts that want human interpretation

- Recommends three focused interviews with at-risk accounts

Inside 48 hours, researchers ship a grounded reply validated by human judgment and enriched by AI velocity. The staff discovers it’s not pricing; it’s a spot in onboarding for a selected purchaser persona that emerged post-acquisition.

How researchers spend their time:

- Designing the appropriate questions, not simply answering them

- Validating alerts and stress-testing interpretations

- Translating patterns into technique

- Socializing insights throughout product, advertising, and company technique groups

Perception now not arrives on the finish of a venture. It’s embedded instantly into on a regular basis resolution workflows.

The enterprise affect:

- Merchandise land higher

- Advertising and marketing turns into extra exact

- Groups pivot earlier, earlier than metrics drift

- Analysis turns into a defensible benefit (instruments commoditize, however studying programs don’t)

The danger: Complexity. Programs can sprawl, and know-how can outpace understanding. Groups should frequently spend money on folks, course of, and tradition to maintain the system wholesome. After they do, analysis turns into indispensable.

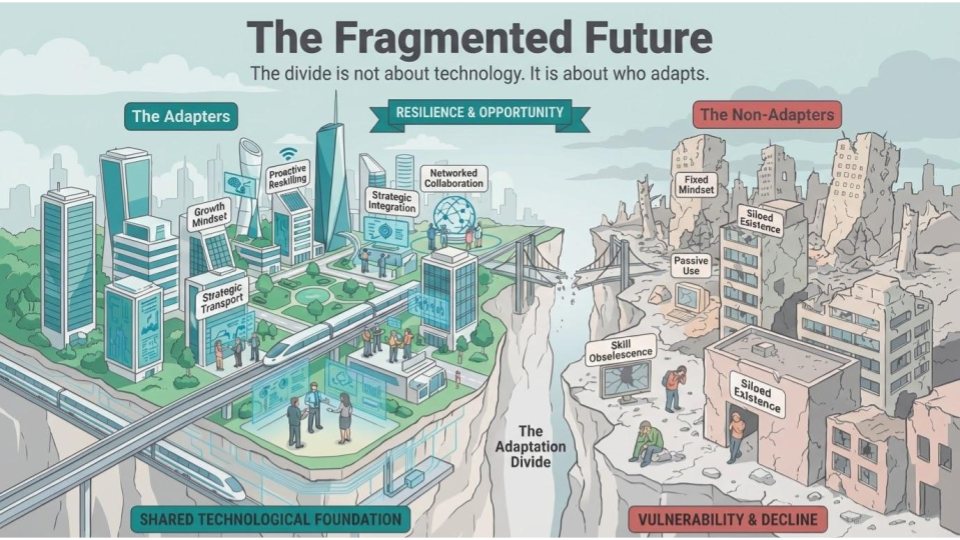

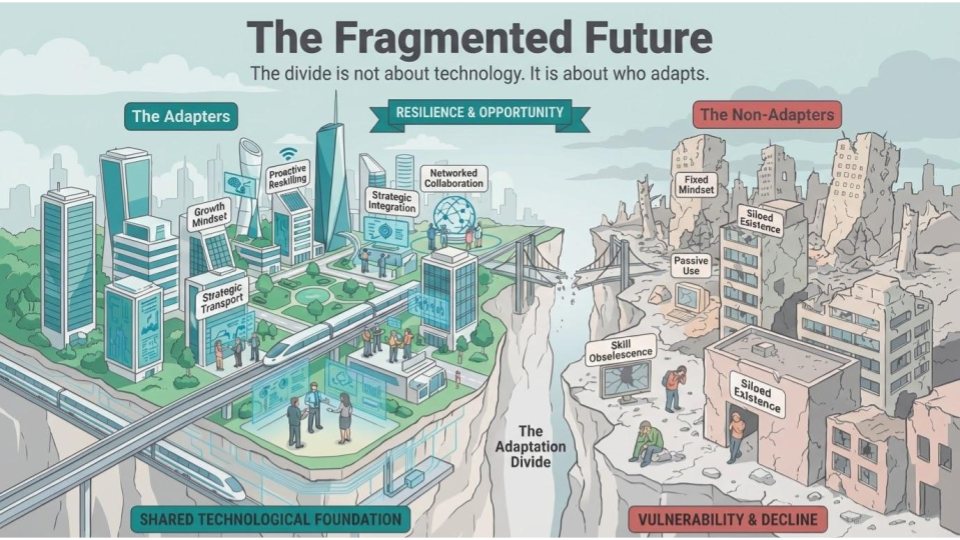

Situation 4: The Fragmented Future

On this future, the trade doesn’t transfer collectively. It splits. Some organizations redesign workflows, retrain expertise, and combine AI deeply. Others stay caught in pilots, debates, and partial adoption. A shrinking center struggles to serve each worlds.

The slow-moving group:

A researcher spends a lot of her week managing workarounds:

- Instruments are evaluated however by no means absolutely applied

- Management stays cautious, however shoppers should not

- Turnaround instances stretch

- Bids are misplaced to sooner, extra built-in opponents

- Proficient colleagues depart, usually publicly

- Recruiting turns into tougher every quarter

The AI-native group:

In the meantime, AI-native groups compound their benefit. They transfer sooner, study sooner, and place analysis as a strategic asset. The hole widens not due to entry to know-how, however due to willingness to vary.

The remaining choices:

Organizations on this future nonetheless have selections:

- Commit absolutely and transfer towards the Augmented Analyst or Analysis Renaissance paths

- Slim focus and change into boutique specialists

- Companion, merge, or be acquired

- Exit gracefully

What they can not do is wait. On this future, indecision is a choice, and the price of delay rises each quarter.

Why These Futures Matter (And Why You Can’t Afford to Ignore Them)

None of those futures is hypothetical. Components of all 4 are already seen at the moment, usually inside the similar group, and typically on the identical staff. What issues is recognizing which future you’re drifting towards, and whether or not that path is intentional or unintentional.

The trade-offs are actual, they usually have an effect on everybody otherwise.

For enterprise leaders: Some futures optimize for velocity and value on the expense of resolution high quality. Others demand higher upfront funding however create extra sturdy strategic benefit. Are you constructing a system that delivers sooner solutions, or one that permits higher choices?

For researchers: Some paths increase affect, creativity, {and professional} progress. Others slender the position to execution, with restricted room for judgment or affect. Are you designing for human experience to matter extra, or much less?

For shoppers and stakeholders: The distinction reveals up in belief. Do insights genuinely enhance outcomes, or merely arrive sooner and look polished? Are you shopping for velocity, or certainty?

The dangers are already seen. The Automation Lure guarantees effectivity and decrease prices, however over time erodes belief, flattens perception, and strips away the context that makes analysis useful. The Fragmented Future creates widening gaps like shoppers obtain uneven experiences, high expertise leaves, and organizations discover themselves unable to compete on both velocity or depth.

The Augmented Analyst and Analysis Renaissance futures demand extra, like funding in folks, governance, and alter administration. However they protect what has at all times made analysis matter: judgment, context, and the power to show data into understanding. They don’t simply make analysis sooner. They make it extra consequential.

Situation planning exists to show the results of at the moment’s choices earlier than they quietly lock in tomorrow’s actuality. Are we making deliberate selections, or drifting right into a future we wouldn’t select?

What Situation Planning Reveals About Your Subsequent Transfer

The price of drift is larger than the price of resolution. When change accelerates, inaction can really feel secure. In actuality, it merely locks in default choices about velocity versus rigor, automation versus judgment, and the position analysis will play within the group. Over time, these defaults harden into technique, whether or not they have been chosen intentionally or not.

The organizations that succeed received’t be those with essentially the most assured predictions. They’ll be those that keep alert, keep their heading, and intervene early sufficient to steer, earlier than comfort begins masquerading as technique.

Earlier than transferring on, take a second to mirror:

- Which of the 4 futures feels closest to the place you’re already heading?

- The place is belief in AI assumed quite than explicitly determined?

- Which choices are being optimized for velocity, and which actually require certainty?

- If nothing modifications over the following 12 months, the place do you realistically find yourself?

These questions are the actual worth of state of affairs planning. They make trade-offs seen and floor selections which might be already being made quietly.

Coming Subsequent: Constructing Your Personal Situation Plan

In Half 3, we’ll present you how you can apply this framework inside your individual group. You’ll discover ways to:

- Establish the uncertainties that matter most to you

- Map present practices in opposition to a number of believable futures

- Make near-term choices that maintain up below uncertainty

As a result of the way forward for market analysis received’t be determined by GenAI alone. Will probably be formed by how deliberately leaders select to answer it.

Source link