Google immediately introduced a technical breakthrough in intent understanding that might essentially alter how search engines like google anticipate person wants throughout 300 million Android gadgets. The corporate’s analysis staff published findings displaying that multimodal AI fashions with fewer than 10 billion parameters can analyze sequences of cellular system interactions and precisely predict person targets with out sending display information to distant servers.

The analysis paper “Small Fashions, Huge Outcomes: Attaining Superior Intent Extraction Via Decomposition,” offered at EMNLP 2025, introduces a two-stage workflow that processes person interface trajectories totally on cellular gadgets. The fashions run regionally on smartphones, analyzing what customers see and do with out transmitting display content material to Google’s cloud infrastructure. This on-device structure addresses privateness issues whereas enabling subtle intent prediction capabilities.

“By decomposing trajectories into particular person display summaries, our small fashions obtain outcomes similar to massive fashions at a fraction of the associated fee,” acknowledged the analysis staff led by software program engineers Danielle Cohen and Yoni Halpern within the announcement printed on the Google Analysis weblog. The technical development arrives as Google’s AI Max features more and more depend on inferred intent matching moderately than specific key phrase focusing on.

The privateness implementation differs considerably from cloud-based AI techniques. Whereas the fashions can “see” display content material, photos, and person actions, this processing happens inside the system itself. The AI analyzes interface components, textual content, and interplay patterns regionally with out creating copies that transmit to exterior servers. This structure mirrors Google’s approach to scam detection, the place on-device machine studying fashions analyze content material whereas sustaining end-to-end encryption.

Technical specs reveal the fashions comprise fewer than 10 billion parameters – sufficiently small to slot in smartphone reminiscence and execute utilizing cellular processors. Massive language fashions usually exceed 100 billion parameters and require datacenter infrastructure, making them impractical for real-time on-device processing. The dimensions constraint represents each an engineering achievement and a privateness design resolution.

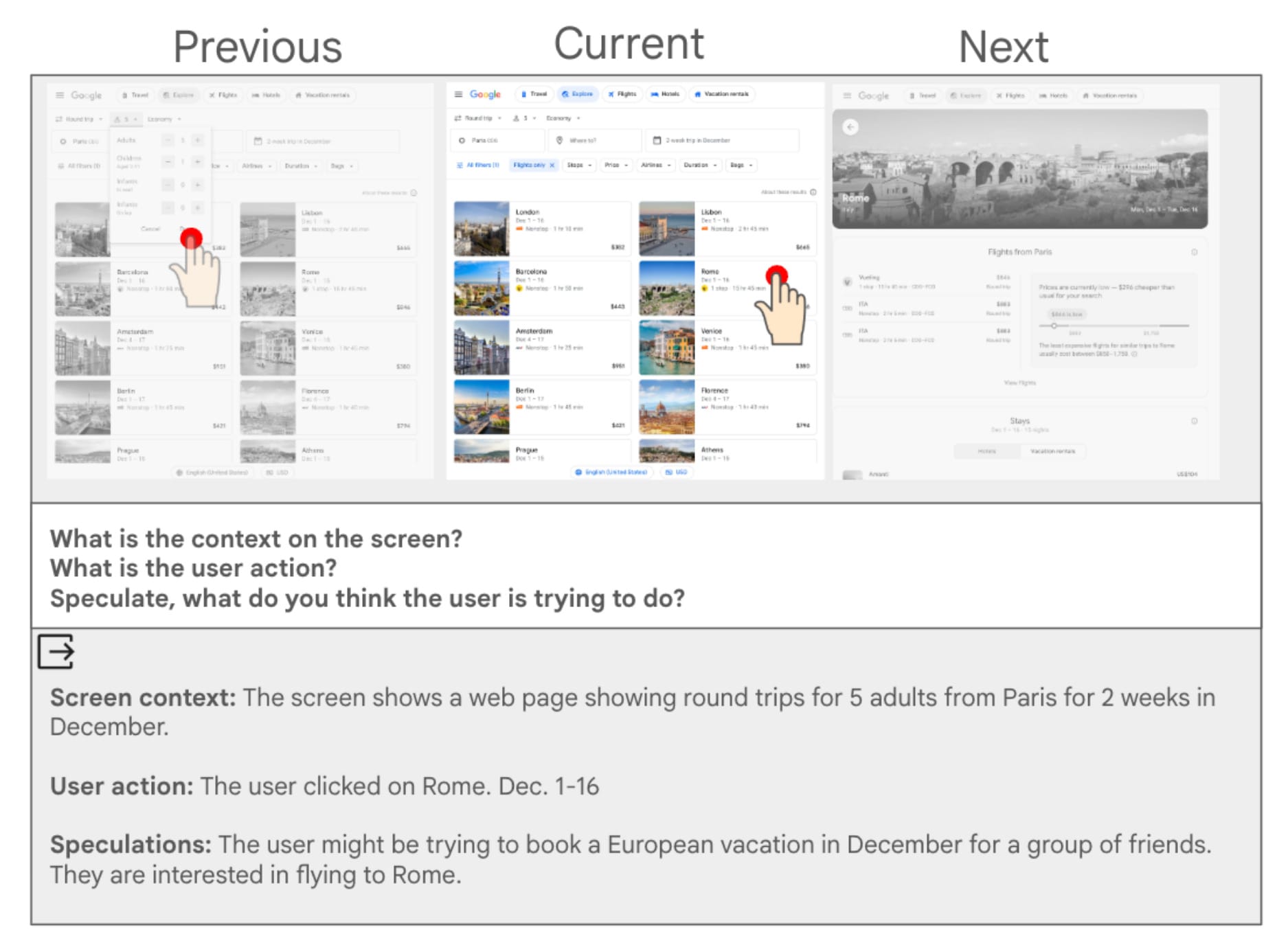

The system analyzes three consecutive screens – earlier, present, and subsequent – to grasp context and person actions. For every interplay, the mannequin generates summaries answering three questions: What’s the related display context? What did the person simply do? What’s the person attempting to perform? This structured strategy permits the mannequin to construct complete understanding from fragmented interface components and person gestures.

A sensible instance from the analysis demonstrates the system’s capabilities. When a person views round-trip flight choices from Paris to numerous European locations for 5 adults touring two weeks in December, then clicks on a Rome itemizing dated December 1-16, the system generates this evaluation: “Display screen context: The display exhibits an online web page displaying spherical journeys for five adults from Paris for two weeks in December. Consumer motion: The person clicked on Rome. Dec. 1-16. Speculations: The person may be attempting to e book a European trip in December for a bunch of associates. They’re interested by flying to Rome.”

The decomposed workflow first processes every display independently by a small multimodal language mannequin. These particular person summaries then feed right into a fine-tuned mannequin that extracts a single sentence describing the person’s total intent. The second stage mannequin undergoes particular coaching methods to keep away from hallucination – producing data not current within the enter information.

Label preparation strategies take away any data from coaching information that does not seem in display summaries. “As a result of the summaries could also be lacking data, if we practice with the complete intents, we inadvertently educate the mannequin to fill in particulars that are not current,” the paper explains. This constraint forces the mannequin to work solely with observable information moderately than inventing believable particulars.

The system intentionally drops hypothesis through the second stage regardless of requesting it throughout preliminary display evaluation. The analysis discovered that asking fashions to invest about person targets improves particular person display summaries however confuses the intent extraction course of. “Whereas this may occasionally appear counterintuitive – asking for speculations within the first stage solely to drop them within the second – we discover this helps enhance efficiency,” in response to the methodology part.

Technical analysis employs a Bi-Reality strategy that decomposes each reference and predicted intents into atomic information – particulars that can’t be damaged down additional. As an example, “a one-way flight” constitutes an atomic truth, whereas “a flight from London to Kigali” represents two atomic information. The system counts what number of reference information seem in predicted intents and what number of predicted information the reference intent helps, calculating precision and recall metrics.

This granular analysis methodology allows monitoring how data flows by each processing levels. Propagation error evaluation exhibits the place the system loses accuracy. Of 4,280 floor fact information in check information, 16% had been missed throughout interplay summarization. One other 18% had been misplaced throughout intent extraction. The precision evaluation revealed that 20% of predicted information got here from incorrect or irrelevant data in interplay summaries that the extraction stage didn’t filter.

The decomposed strategy outperformed two pure baselines throughout all examined configurations. Chain-of-thought prompting and end-to-end fine-tuning each confirmed decrease Bi-Reality F1 scores in comparison with the two-stage decomposition technique. The benefit held true when researchers examined on each cellular system and internet trajectories utilizing Gemini and Qwen2 base fashions.

Most importantly, making use of the decomposed technique with Gemini 1.5 Flash 8B achieved comparable outcomes to utilizing Gemini 1.5 Professional – a mannequin considerably bigger. The Flash 8B mannequin comprises roughly 8 billion parameters whereas Professional exceeds 100 billion parameters, representing greater than a 10x distinction in mannequin dimension. This efficiency parity at comparable computational value demonstrates that architectural selections and coaching methodologies can compensate for uncooked parameter rely in specialised duties.

The analysis builds on Google’s ongoing work in person intent understanding, which has develop into more and more vital as the corporate develops assistive options for cellular gadgets. Google’s broader keyword matching system already employs BERT (Bidirectional Encoder Representations from Transformers) to grasp context past literal phrase matching. The present analysis extends this functionality to visible interface components and gestural interactions.

For cellular promoting platforms, the expertise presents new alternatives and challenges. Conventional key phrase focusing on assumes customers explicitly state their wants by search queries. Intent understanding from UI trajectories allows prediction earlier than any question formulation happens. A person looking European trip choices may obtain travel-related recommendations earlier than typing “flights to Rome” right into a search bar.

The excellence between specific search intent and inferred behavioral intent has important implications for attribution modeling. When AI Max features already match commercials to inferred intent moderately than typed queries, including pre-query intent prediction creates further complexity in figuring out which publicity influenced person selections.

Google is preparing for intent evaluation on steroids! — Pedro Dias (@pedrodias) January 22, 2026

For somebody in web optimization, this analysis alerts a shift in how search engines like google and working techniques may perceive person intent earlier than a question is even typed. https://t.co/eSnbMBbyAZ

The on-device processing structure addresses information sensitivity issues that come up when AI techniques analyze private communications, monetary transactions, or well being data seen in cellular interfaces. All the workflow executes regionally – display evaluation, abstract technology, and intent extraction all happen inside system reminiscence with out creating server-accessible copies. This differs considerably from cloud-based AI assistants that transmit queries and context to distant techniques for processing.

Nevertheless, on-device processing doesn’t get rid of all privateness issues. The AI fashions put in on gadgets can analyze any display content material the system shows, together with delicate data. Customers retain no technical mechanism to forestall the on-device mannequin from “seeing” particular functions or content material varieties whereas permitting evaluation of others. The privateness safety stems from stopping transmission moderately than limiting statement.

The analysis staff recognized a number of technical constraints governing mannequin efficiency. Textual content content material in display summaries can’t exceed roughly 1,000 tokens per interplay. The system processes a most of 20 consecutive screens earlier than truncating older interactions. These limits replicate sensible reminiscence constraints on cellular gadgets the place a number of functions compete for restricted computational sources.

The analysis datasets got here from publicly obtainable automation datasets containing examples pairing person intents with motion sequences. The analysis staff didn’t gather new person information particularly for this mission, as a substitute counting on current datasets that doc how individuals work together with internet browsers and cellular functions to perform particular targets.

Mannequin efficiency diverse based mostly on activity complexity. Easy transactional intents like “e book a flight to London” achieved larger accuracy than complicated analysis duties like “evaluate European trip locations for a December group journey inside a $5,000 price range.” The system struggled most with ambiguous interactions the place person intent remained unclear even when analyzing a number of consecutive screens.

The analysis acknowledges basic challenges in trajectory summarization. Human evaluators generally disagree about person intent when analyzing equivalent display sequences, suggesting the duty comprises inherent ambiguity. A person viewing live performance listings may be researching leisure choices, planning a selected outing, or just looking with out buy intent. Distinguishing these situations requires further context not at all times seen in interface components.

Future improvement instructions embrace increasing the system’s functionality to deal with longer interplay sequences and enhancing accuracy on complicated multi-step duties. The staff expressed curiosity in making use of these methods to different interface understanding issues past intent extraction, corresponding to automated person interface testing and accessibility help.

The timing of this analysis coincides with broader industry adoption of small language models for specialised duties. NVIDIA printed analysis in August 2025 displaying that fashions with fewer than 10 billion parameters can successfully deal with 60-80% of AI agent duties at present assigned to fashions exceeding 70 billion parameters, suggesting parameter effectivity has develop into a important focus throughout main AI analysis organizations.

Google’s implementation technique emphasizes gradual deployment. The analysis paper represents foundational work moderately than rapid product launch. “In the end, as fashions enhance in efficiency and cellular gadgets purchase extra processing energy, we hope that on-device intent understanding can develop into a constructing block for a lot of assistive options on cellular gadgets going ahead,” in response to the conclusion part.

The analysis staff included Danielle Cohen and Yoni Halpern as software program engineers, together with coauthors Noam Kahlon, Joel Oren, Omri Berkovitch, Sapir Caduri, Ido Dagan, and Anatoly Efros. The paper obtained presentation at EMNLP 2025, an educational convention targeted on empirical strategies in pure language processing.

For search advertising and marketing professionals, these developments sign potential shifts in how search engines like google perceive and reply to person wants. The excellence between query-based and behavior-based intent understanding creates new optimization issues. Content material that ranks effectively for specific search queries could not floor in contexts the place techniques predict intent from interface interactions alone.

The analysis additionally highlights tensions between useful personalization and person privateness. Techniques that precisely predict person wants earlier than specific requests require analyzing on-screen data constantly. Google’s emphasis on on-device processing addresses transmission privateness issues however would not get rid of questions in regards to the extent to which AI techniques ought to monitor person conduct to enhance service high quality.

Cellular working system integration represents one other strategic consideration. As AI Mode features unfold throughout Android gadgets, working system distributors achieve unprecedented visibility into person interactions throughout all put in functions. The analysis’s small mannequin strategy allows this functionality whereas sustaining affordable battery life and processing speeds.

The technical achievement demonstrates that multimodal understanding – processing each visible interface components and textual content material concurrently – now not requires huge computational sources. This democratization of multimodal AI capabilities could speed up improvement of competing techniques from different expertise firms in search of comparable intent understanding options for his or her cellular platforms.

Business observers have famous Google’s aggressive AI integration technique throughout its product portfolio. Search executives have described AI as “essentially the most profound” transformation within the firm’s historical past, surpassing even the cellular computing transition. Intent understanding from UI trajectories represents one part of this broader transformation towards predictive, assistive computing experiences.

The analysis paper makes no claims about industrial deployment timelines or integration with current Google merchandise. The announcement emphasizes scientific contribution and methodological innovation moderately than product options. This positioning suggests Google maintains separation between analysis exploration and product improvement selections, although printed analysis usually signifies future product instructions.

Timeline

Abstract

Who: Google Analysis staff together with software program engineers Danielle Cohen and Yoni Halpern, together with coauthors Noam Kahlon, Joel Oren, Omri Berkovitch, Sapir Caduri, Ido Dagan, and Anatoly Efros introduced the analysis affecting customers of 300 million Android gadgets.

What: Google developed a two-stage decomposed workflow utilizing small multimodal language fashions (fewer than 10 billion parameters) to grasp person intent from cellular system interplay sequences. The system operates totally on-device, analyzing screens regionally with out transmitting content material to servers, then extracting total intent from generated summaries whereas reaching efficiency similar to fashions 10 occasions bigger.

When: The announcement was made on January 22, 2026, with the analysis paper offered at EMNLP 2025. The work builds on earlier intent understanding analysis from Google’s staff.

The place: The expertise operates totally on-device throughout Android cellular platforms. Processing happens regionally inside smartphone reminiscence utilizing system processors, with no display content material transmitted to Google’s cloud infrastructure. The analysis methodology was examined on each cellular system and internet browser trajectories utilizing publicly obtainable automation datasets.

Why: Google goals to allow AI brokers that anticipate person wants earlier than queries are typed, supporting assistive options on cellular gadgets. The on-device processing structure maintains privateness by stopping transmission of display content material to distant servers whereas enabling subtle intent prediction. The analysis demonstrates that architectural improvements can obtain efficiency similar to massive fashions at a fraction of computational value, making superior AI options sensible for battery-powered cellular gadgets with out requiring fixed server connectivity.

Share this text