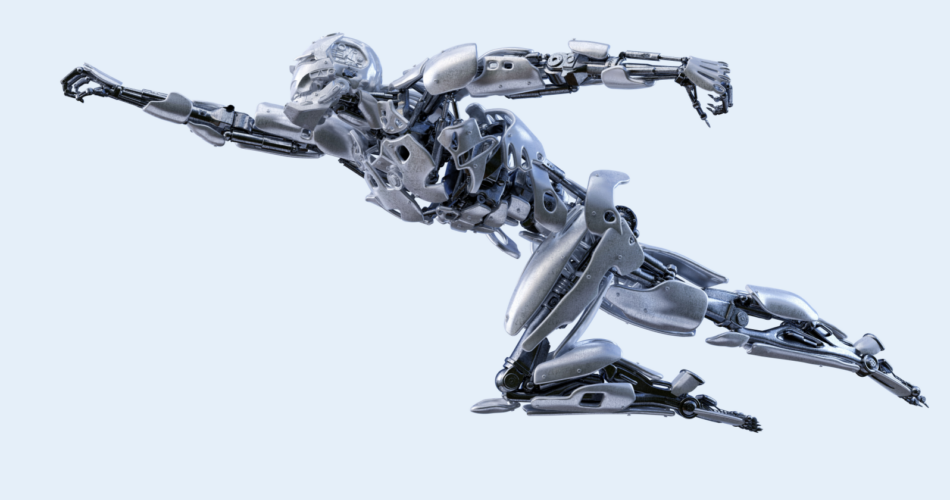

I just lately turned pissed off whereas working with Claude, and it led me to an fascinating trade with the platform, which led me to analyzing my very own expectations, actions, and habits…and that was eye-opening. The brief model is I need to maintain considering of AI as an assistant, like a lab associate. In actuality, it must be seen as a robotic within the lab – able to spectacular issues, given the best path, however solely inside a stable framework. There are nonetheless so many issues it’s not able to, and we, as practitioners, typically neglect this and make assumptions based mostly on what we want a platform is able to, as a substitute of grounding it within the actuality of the boundaries.

And whereas the boundaries of AI immediately are actually spectacular, they pale compared to what persons are able to. Can we typically overlook this distinction and ascribe human traits to the AI techniques? I guess all of us have at one level or one other. We’ve assumed accuracy and brought path. We’ve taken as a right “that is apparent” and anticipated the reply to “embrace the plain.” And we’re upset when it fails us.

AI typically feels human in the way it communicates, but it doesn’t behave like a human in the way it operates. That hole between look and actuality is the place most confusion, frustration, and misuse of enormous language fashions really begins. Research into human laptop interplay reveals that folks naturally anthropomorphize techniques that talk, reply socially, or mirror human communication patterns.

This isn’t a failure of intelligence, curiosity, or intent on the a part of customers. It’s a failure of mental models. Individuals, together with extremely expert professionals, usually method AI techniques with expectations formed by how these techniques current themselves quite than how they really work. The result’s a gentle stream of disappointment that will get misattributed to immature expertise, weak prompts, or unreliable fashions.

The issue is none of these. The issue is expectation.

To grasp why, we have to have a look at two completely different teams individually. Customers on one facet, and practitioners on the opposite. They work together with AI otherwise. They fail otherwise. However each teams are reacting to the identical underlying mismatch between how AI feels and the way it really behaves.

The Client Facet, The place Notion Dominates

Most shoppers encounter AI by way of conversational interfaces. Chatbots, assistants, and reply engines converse in full sentences, use well mannered language, acknowledge nuance, and reply with obvious empathy. This isn’t unintentional. Pure language fluency is the core energy of contemporary LLMs, and it’s the function customers expertise first.

When one thing communicates the best way an individual does, people naturally assign it human traits. Understanding. Intent. Reminiscence. Judgment. This tendency is properly documented in a long time of analysis on human laptop interplay and anthropomorphism. It’s not a flaw. It’s how individuals make sense of the world.

From the patron’s perspective, this psychological shortcut often feels cheap. They don’t seem to be attempting to function a system. They’re attempting to get assist, info, or reassurance. When the system performs properly, belief will increase. When it fails, the response is emotional. Confusion. Frustration. A way of getting been misled.

That dynamic issues, particularly as AI turns into embedded in on a regular basis merchandise. However it isn’t the place probably the most consequential failures happen.

These present up on the practitioner facet.

Defining Practitioner Habits Clearly

A practitioner will not be outlined by job title or technical depth. A practitioner is outlined by accountability.

For those who use AI often for curiosity or comfort, you’re a client. For those who use AI repeatedly as a part of your job, combine its output into workflows, and are accountable for downstream outcomes, you’re a practitioner.

That features search engine marketing managers, advertising leaders, content material strategists, analysts, product managers, and executives making choices based mostly on AI-assisted work. Practitioners aren’t experimenting. They’re operationalizing.

And that is the place the psychological mannequin downside turns into structural.

Practitioners usually don’t deal with AI like an individual in an emotional sense. They don’t consider it has emotions or consciousness. As an alternative, they deal with it like a colleague in a workflow sense. Usually like a succesful junior colleague.

That distinction is delicate, however vital.

Practitioners are likely to assume {that a} sufficiently superior system will infer intent, keep continuity, and train judgment except explicitly informed in any other case. This assumption will not be irrational. It mirrors how human groups work. Skilled professionals usually depend on shared context, implied priorities, {and professional} instinct.

However LLMs don’t function that manner.

What appears like anthropomorphism in client habits reveals up as misplaced delegation in practitioner workflows. Accountability quietly drifts from the human to the system, not emotionally, however operationally.

You may see this drift in very particular, repeatable patterns.

Practitioners regularly delegate duties with out totally specifying aims, constraints, or success standards, assuming the system will infer what issues. They behave as if the mannequin maintains secure reminiscence and ongoing consciousness of priorities, even once they know, intellectually, that it doesn’t. They anticipate the system to take initiative, flag points, or resolve ambiguities by itself. They overweight fluency and confidence in outputs whereas under-weighting verification. And over time, they start to explain outcomes as choices the system made, quite than selections they permitted.

None of that is careless. It’s a pure switch of working habits from human collaboration to system interplay.

The problem is that the system doesn’t personal judgment.

Why This Is Not A Tooling Downside

When AI underperforms in skilled settings, the intuition is guilty the mannequin, the prompts, or the maturity of the expertise. That intuition is comprehensible, nevertheless it misses the core subject.

LLMs are behaving precisely as they have been designed to behave. They generate responses based mostly on patterns in knowledge, inside constraints, with out objectives, values, or intent of their very own.

They have no idea what issues except you inform them. They don’t determine what success appears like. They don’t consider tradeoffs. They don’t personal outcomes.

When practitioners assign considering duties that also belong to people, failure will not be a shock. It’s inevitable.

That is the place considering of Ironman and Superman turns into helpful. Not as popular culture trivia, however as a psychological mannequin correction.

Ironman, Superman, And Misplaced Autonomy

Superman operates independently. He perceives the scenario, decides what issues, and acts on his personal judgment. He stands beside you and saves the day.

That’s what number of practitioners implicitly anticipate LLMs to behave inside workflows.

Ironman works otherwise. The go well with amplifies energy, pace, notion, and endurance, nevertheless it does nothing and not using a pilot. It executes inside constraints. It surfaces choices. It extends functionality. It doesn’t select objectives or values.

LLMs are Ironman fits.

They amplify no matter intent, construction, and judgment you deliver to them. They don’t substitute the pilot.

When you see that distinction clearly, quite a lot of frustration evaporates. The system stops feeling unreliable and begins behaving predictably, as a result of expectations have shifted to match actuality.

Why This Issues For search engine marketing And Advertising and marketing Leaders

search engine marketing and advertising leaders already function inside advanced techniques. Algorithms, platforms, measurement frameworks, and constraints you don’t management are a part of day by day work. LLMs add one other layer to that stack. They don’t substitute it.

For search engine marketing managers, this implies AI can speed up analysis, broaden content material, floor patterns, and help with evaluation, nevertheless it can not determine what authority appears like, how tradeoffs needs to be made, or what success means for the enterprise. These stay human duties.

For advertising executives, this implies AI adoption will not be primarily a tooling choice. It’s a duty placement choice. Groups that deal with LLMs as choice makers introduce danger. Groups that deal with them as amplification layers scale extra safely and extra successfully.

The distinction will not be sophistication. It’s possession.

The Actual Correction

Most recommendation about utilizing AI focuses on higher prompts. Prompting matters, however it’s downstream. The true correction is reclaiming possession of considering.

People should personal objectives, constraints, priorities, analysis, and judgment. Techniques can deal with growth, synthesis, pace, sample detection, and drafting.

When that boundary is evident, LLMs turn into remarkably efficient. When it blurs, frustration follows.

The Quiet Benefit

Right here is the half that not often will get stated out loud.

Practitioners who internalize this psychological mannequin constantly get higher outcomes with the identical instruments everybody else is utilizing. Not as a result of they’re smarter or extra technical, however as a result of they cease asking the system to be one thing it isn’t.

They pilot the go well with, and that’s their benefit.

AI will not be taking management of your work. You aren’t being changed. What’s altering is the place duty lives.

Deal with AI like an individual, and you may be disillusioned. Deal with it like a syste,m and you may be restricted. Deal with it like an Ironman go well with, and YOU shall be amplified.

The long run doesn’t belong to Superman. It belongs to the individuals who know tips on how to fly the go well with.

Extra Sources:

This put up was initially printed on Duane Forrester Decodes.

Featured Picture: Corona Borealis Studio/Shutterstock

Source link