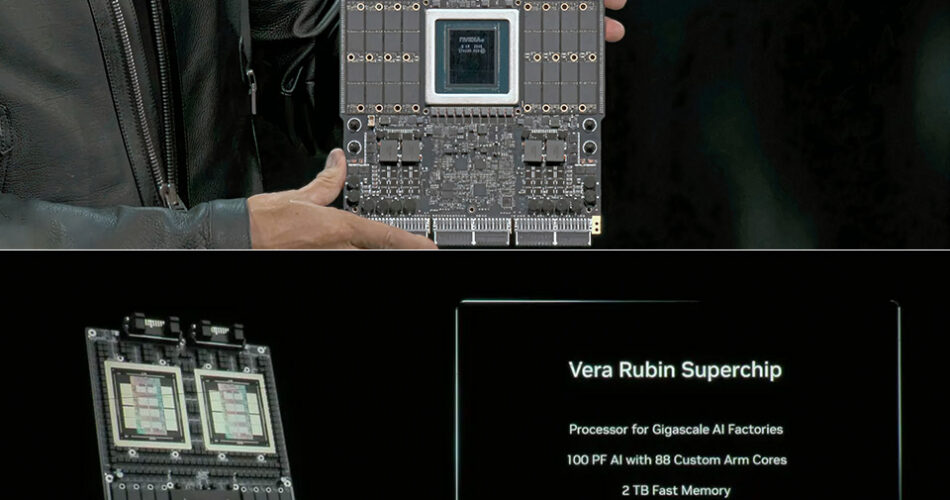

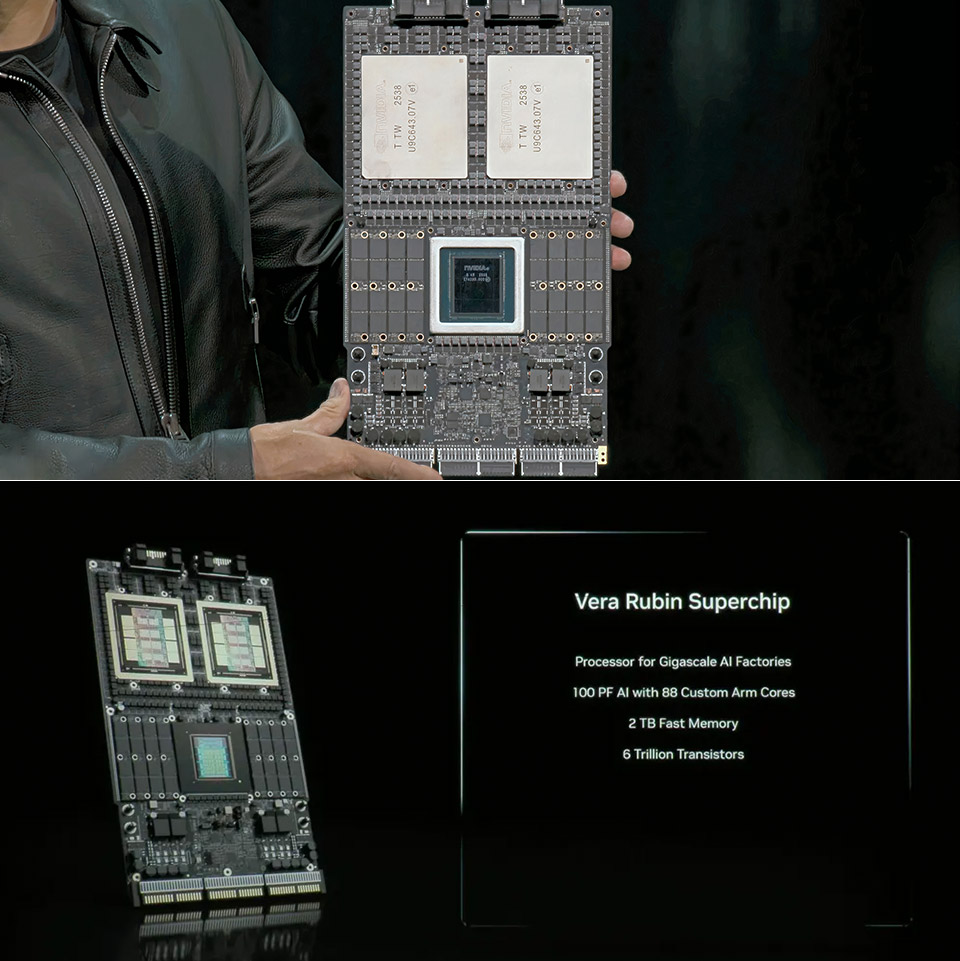

NVIDIA CEO Jensen Huang walked onto the GTC stage in Washington this week holding what seemed like a blueprint for the long run, not a pc half. This was the Vera Rubin Superchip, a flat expanse of circuitry the dimensions of a giant dinner plate with sufficient oomph to deal with the heaviest AI workloads.

Huang known as it a gorgeous laptop and from the close-up pictures on the massive screens behind him, it’s onerous to disagree. On the heart is the Vera CPU, a sq. chip with 88 cores constructed on Arm designs customised by NVIDIA. These cores can deal with 176 threads without delay, to allow them to juggle a number of streams of information with out breaking a sweat. Flanking it are two Rubin GPUs, every one a monster constituted of two massive silicon dies – the sort that stretch to the complete dimension of the instruments used to etch them at factories like TSMC. Every GPU has 288 gigabytes of high-bandwidth HBM4 reminiscence, the quickest accessible, stacked in eight tall towers proper subsequent to the dies.

Across the edges are eight SOCAMM2 slots for LPDDR modules, layers of quick-access system reminiscence that may be swapped out or expanded as wanted. No cables cling off the edges; as a substitute, 5 connectors hug the borders – two up prime for NVLink switches that tie racks collectively, and three under for energy, PCIe lanes and CXL hooks to share knowledge throughout machines.The Rubin GPUs arrived in NVIDIA’s labs simply weeks in the past, stamped from Taiwan in late September. Their cooling plates match the footprint of the Blackwell chips however inside issues get extra advanced. Every GPU breaks down into two compute sections, plus I/O helpers that handle the flood of alerts. The Vera CPU exhibits its personal intelligent meeting: faint traces throughout its floor trace at a number of chiplets pieced collectively, with an additional I/O block tucked close by and odd inexperienced traces spilling from its pads – maybe ties to hidden helpers beneath. This enables the entire package deal to hyperlink the CPU to its GPUs at 1.8 terabytes per second by NVLink-C2C paths, three separate brains into one.

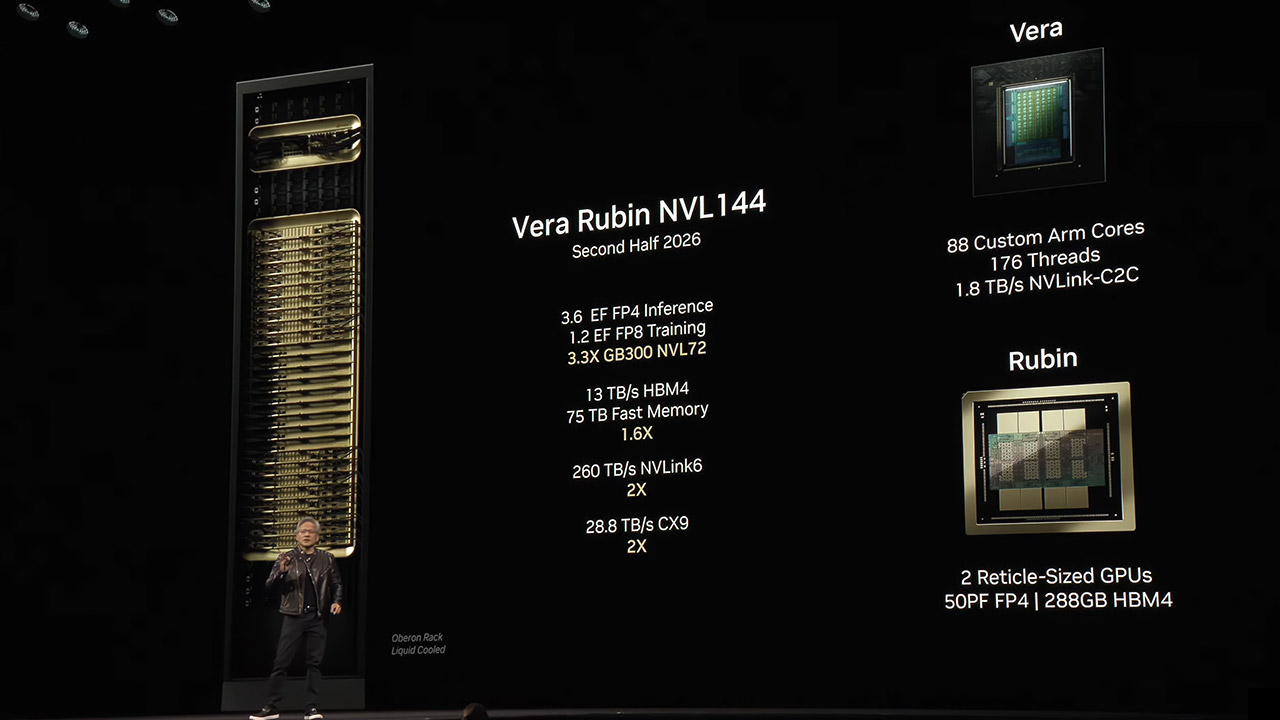

In a full rack, known as NVL144, 144 of those GPU dies workforce up throughout trays that additionally pack eight ConnectX-9 community playing cards and a Bluefield-4 knowledge processor to maintain every thing in sync. Huang famous the rack’s bandwidth is equal to a full second of worldwide web visitors, a scale that turns odd servers into one thing a lot larger.

A single Vera Rubin system manages 100 petaflops of FP4 calculations, the sort that give AI pattern-matching a efficiency increase with out sacrificing accuracy. Once you scale that as much as the NVL144 rack, nevertheless, issues get actually loopy – 3.6 exaflops for inference – the bit the place fashions in your favorite chatbots or picture mills truly strive to determine what they’re – and 1.2 exaflops for FP8 coaching, the place the system tries to study one thing from a gazillion datasets. And get this – reminiscence flows in at a blinding 13 terabytes per second due to the large HBM4 stacks, with a whopping 75 terabytes of quick storage stashed away for emergencies. Then there’s the NVLink connections, which are available in at an eye-popping 260 terabytes per second, and the CX9 traces aren’t too shabby both – 28.8 terabytes per second. Now, while you pit this towards the GB300 Blackwell racks which are simply beginning to roll out, issues develop into fairly clear – you’re thrice the uncooked velocity, 60% extra reminiscence and double the bandwidth for ferrying knowledge across the system. Early builders who’ve received their palms on the expertise are already getting a very good take a look at simply how a lot it could possibly lower down wait occasions on huge simulations – assume drug discovery and local weather modeling.

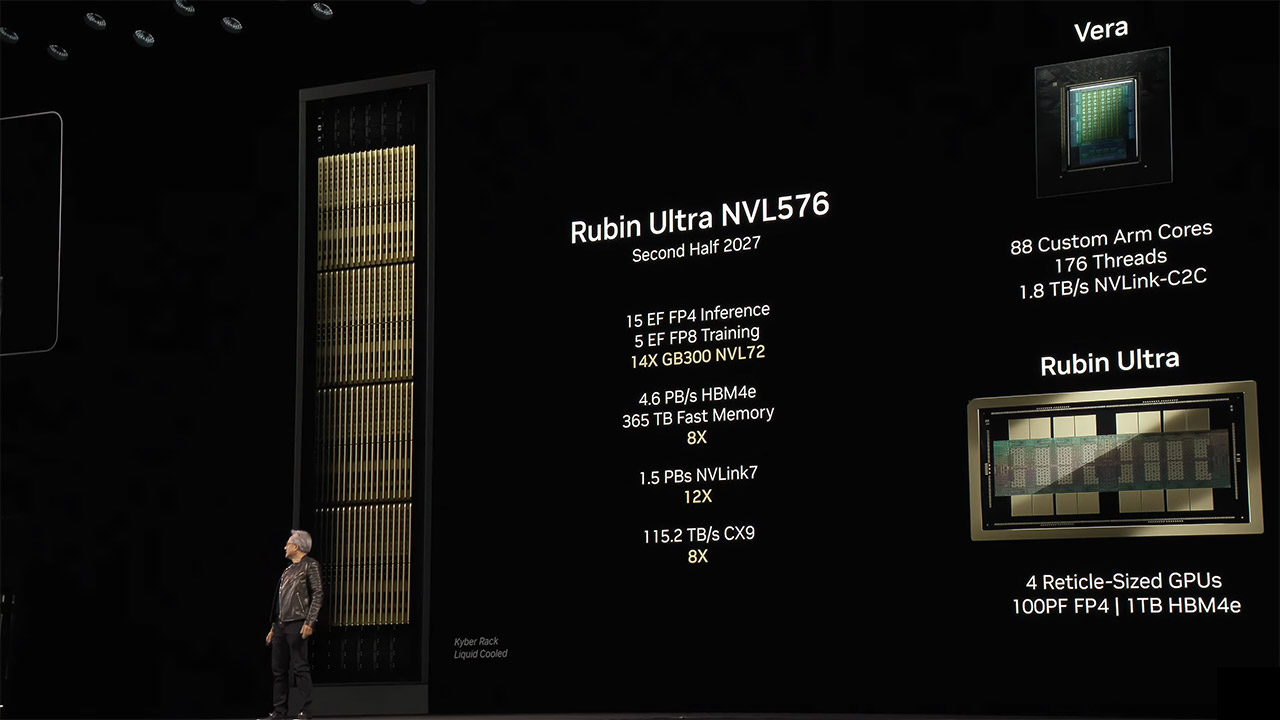

Mass manufacturing of Vera Rubin is ready to kick-off someday within the third or fourth quarter of 2026, across the similar time because the Blackwell Extremely begins hitting the cabinets. Then, in late 2027, comes Rubin Extremely, an NVL576 rack that places 4 GPUs on every package deal as a substitute of two – with a cool terabyte of HBM4e reminiscence unfold out over 16 stacks. It’s a giant deal – we’re speaking 15 exaflops in FP4 inference and 5 exaflops in FP8 coaching – that’s 14 occasions the facility of immediately’s GB300 setups. And get this – reminiscence bandwidth is an insane 4.6 petabytes per second, and complete storage is a whoppin 365 terabytes. NVLink and CX9 connections are additionally seeing a critical increase, as much as 1.5 petabytes per second and 115.2 terabytes per second respectively, which ought to let whole knowledge centres run like a well-oiled machine. And hey, should you thought that was thrilling, NVIDIA’s received much more methods up its sleeve – together with the Feynman chips which are speculated to arrive in 2028, operating on even finer silicon processes than earlier than.

[Source]

Source link