- Anthropic’s Claude chatbot now has an on-demand reminiscence characteristic

- The AI will recall previous chats solely when a person particularly asks

- The characteristic is rolling out first to Max, Group, and Enterprise subscribers earlier than increasing to different plans

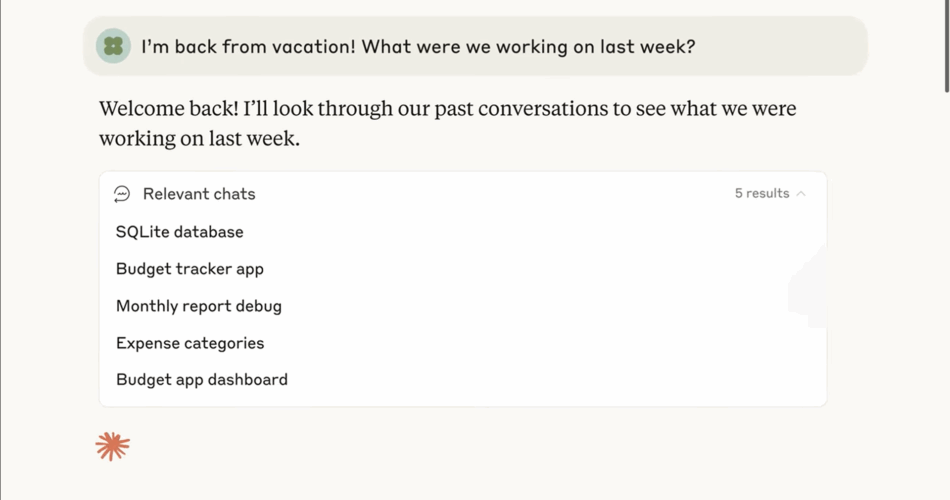

Anthropic has given Claude a reminiscence improve, however it’ll solely activate once you select. The brand new characteristic permits Claude to recall previous conversations, offering the AI chatbot with data to assist proceed earlier tasks and apply what you have mentioned earlier than to your subsequent dialog.

The replace is coming to Claude’s Max, Group, and Enterprise subscribers first, although it’ll doubtless be extra extensively obtainable in some unspecified time in the future. If in case you have it, you’ll be able to ask Claude to seek for earlier messages tied to your workspace or undertaking.

However, unless you explicitly ask, Claude won’t cast an eye backward. That means Claude will maintain a generic sort of personality by default. That’s for the sake of privacy, according to Anthropic. Claude can recall your discussions if you want, without creeping into your dialogue uninvited.

By comparison, OpenAI’s ChatGPT robotically shops previous chats except you choose out, and makes use of them to form its future responses. Google Gemini goes even additional, using each your conversations with the AI and your Search historical past and Google account knowledge, at the least when you let it. Claude’s strategy does not choose up the breadcrumbs referencing earlier talks with out you asking it to take action.

Claude remembers

Adding memory may not seem like a big deal. Still, you’ll feel the impact immediately if you’ve ever tried to restart a project interrupted by days or weeks without a helpful assistant, digital or otherwise. Making it an opt-in choice is a nice touch in accommodating how comfortable people are with AI currently.

Many may want AI help without surrendering control to chatbots that never forget. Claude sidesteps that tension cleanly by making memory something you summon deliberately.

But it’s not magic. Since Claude doesn’t retain a personalized profile, it won’t proactively remind you to prepare for events mentioned in other chats or anticipate style shifts when writing to a colleague versus a public business presentation, unless prompted mid-conversation.

Further, if there are issues with this approach to memory, Anthropic’s rollout strategy will allow the company to correct any mistakes before it becomes widely available to all Claude users. It will also be worth seeing if building long-term context like ChatGPT and Gemini are doing is going to be more appealing or off-putting to users compared to Claude’s way of making memory an on-demand aspect of using the AI chatbot.

And that assumes it works perfectly. Retrieval depends on Claude’s ability to surface the right excerpts, not just the most recent or longest chat. If summaries are fuzzy or the context is wrong, you might end up more confused than before. And while the friction of having to ask Claude to use its memory is supposed to be a benefit, it still means you’ll have to remember that the feature exists, which some may find annoying. Even so, if Anthropic is right, a little boundary is a good thing, not a limitation. And users will be happy that Claude remembers that, and nothing else, without a request.

You might also like

Source link