NVIDIA Analysis revealed findings on June 2, 2025, difficult the business’s $57 billion funding in giant language mannequin infrastructure via a complete evaluation exhibiting small language fashions (SLMs) obtain comparable efficiency for almost all of enterprise agentic AI purposes whereas requiring considerably much less computational sources.

The paper Small Language Models are the Future of Agentic AI presents proof that fashions with fewer than 10 billion parameters can successfully deal with 60-80% of AI agent duties presently assigned to fashions exceeding 70 billion parameters. Based on the analysis crew led by Peter Belcak and 7 co-authors from NVIDIA Analysis and Georgia Institute of Know-how, “small language fashions (SLMs) are sufficiently highly effective, inherently extra appropriate, and essentially extra economical for a lot of invocations in agentic programs, and are subsequently the way forward for agentic AI.”

Subscribe the PPC Land e-newsletter ✉️ for comparable tales like this one. Obtain the information every single day in your inbox. Freed from advertisements. 10 USD per yr.

The timing coincides with unprecedented infrastructure funding in giant language mannequin deployment. Trade knowledge exhibits $57 billion in cloud infrastructure funding throughout 2024 to help LLM API serving valued at $5.6 billion, making a ten-fold disparity between infrastructure prices and market income. “The ten-fold discrepancy between funding and market measurement has been accepted, as a result of it’s assumed that this operational mannequin will stay the cornerstone of the business with none substantial alterations.”

Technical efficiency metrics problem scaling assumptions

The analysis demonstrates particular efficiency benefits throughout a number of benchmark classes. Microsoft’s Phi-3 mannequin with 7 billion parameters achieves language understanding and code technology scores corresponding to 70 billion parameter fashions of the identical technology. NVIDIA’s Nemotron hybrid Mamba-Transformer fashions with 2 to 9 billion parameters ship instruction following and code technology accuracy matching dense 30 billion parameter LLMs whereas requiring an order of magnitude fewer inference FLOPs.

“Phi-2 (2.7bn) achieves commonsense reasoning scores and code technology scores on par with 30bn fashions whereas working ∼15× sooner,” in response to the examine. DeepSeek’s R1-Distill collection demonstrates notably robust outcomes, with the 7 billion parameter Qwen mannequin outperforming proprietary fashions together with Claude-3.5-Sonnet-1022 and GPT-4o-0513 on reasoning benchmarks.

Salesforce’s xLAM-2-8B mannequin achieves state-of-the-art efficiency on device calling regardless of its comparatively modest measurement, surpassing frontier fashions like GPT-4o and Claude 3.5. The analysis crew notes that these achievements replicate advances in coaching methodologies reasonably than merely parameter scaling.

Financial evaluation reveals operational value benefits

The examine presents detailed financial evaluation exhibiting SLMs present 10-30 instances decrease inference prices in comparison with giant language fashions. “Serving a 7bn SLM is 10–30× cheaper (in latency, power consumption, and FLOPs) than a 70–175bn LLM, enabling real-time agentic responses at scale.”

Wonderful-tuning effectivity demonstrates further benefits. Parameter-efficient strategies like LoRA and DoRA require solely GPU-hours for SLM customization in comparison with weeks for big fashions. Edge deployment capabilities allow consumer-grade GPU execution with decrease latency and enhanced knowledge management via programs like ChatRTX.

The analysis identifies parameter utilization effectivity as a elementary benefit. Giant language fashions function with sparse activation patterns, participating solely fractions of their parameters for particular person inputs. “That this habits seems to be extra subdued in SLMs means that SLMs could also be basically extra environment friendly by the advantage of getting a smaller proportion of their parameters contribute to the inference value with out a tangible impact on the output.”

Modular system structure allows specialised deployment

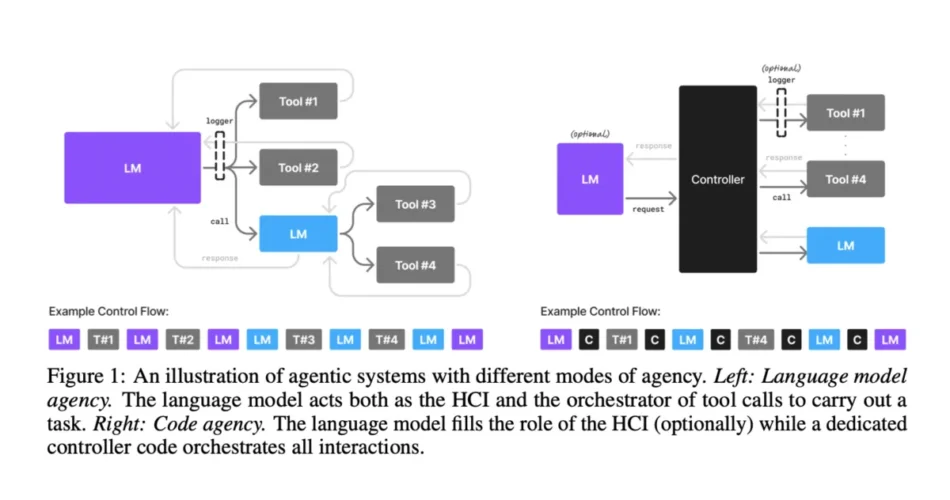

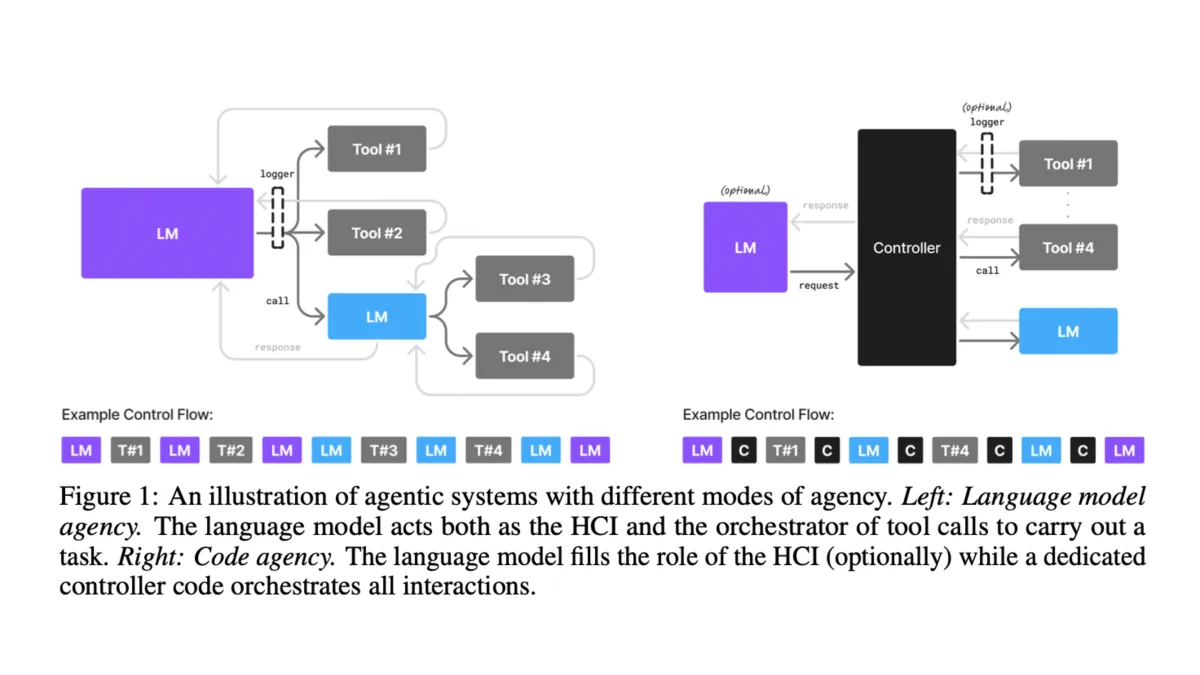

NVIDIA proposes heterogeneous agentic programs combining SLMs for routine duties with selective LLM invocation for complicated reasoning. “We additional argue that in conditions the place general-purpose conversational talents are important, heterogeneous agentic programs (i.e., brokers invoking a number of completely different fashions) are the pure selection.”

Case research study potential substitute situations throughout well-liked open-source brokers. MetaGPT exhibits 60% substitute potential for routine code technology and structured response duties. Open Operator demonstrates 40% substitute functionality for command parsing and template-based message technology. Cradle achieves 70% substitute potential for repetitive GUI interplay workflows.

The analysis crew supplies a six-step algorithm for changing LLM-based brokers to SLM implementation. The method begins with secured utilization knowledge assortment, adopted by knowledge curation to take away delicate info, process clustering to determine recurring patterns, SLM choice primarily based on functionality necessities, specialised fine-tuning, and iterative refinement.

Trade adoption obstacles replicate infrastructure funding

The examine acknowledges important obstacles to SLM adoption regardless of technical benefits. “Giant quantities of upfront funding into centralized LLM inference infrastructure” represents the first impediment, as substantial capital commitments create business inertia favoring current approaches.

Analysis methodology presents further challenges. Present SLM growth follows LLM design patterns, specializing in generalist benchmarks reasonably than agentic utility metrics. “If one focuses solely on benchmarks measuring the agentic utility of brokers, the studied SLMs simply outperform bigger fashions.”

Advertising consideration disparities contribute to restricted consciousness. SLMs obtain much less promotional depth in comparison with giant language fashions regardless of superior suitability for a lot of industrial purposes.

Advertising business implications align with automation developments

The analysis findings align with broader advertising and marketing automation developments. McKinsey data indicates agentic AI attracted $1.1 billion in fairness funding throughout 2024, with associated job postings rising 985% year-over-year. The know-how allows advertising and marketing groups to automate marketing campaign optimization, viewers focusing on, and efficiency evaluation with out fixed human oversight.

Zeta Global’s recent introduction of AI Agent Studio demonstrates sensible implementation of agentic workflows. The platform allows customers to attach a number of AI brokers for complicated advertising and marketing duties, shifting past remoted operations towards built-in workflows. Marketing campaign creation automation consists of viewers definition, efficiency forecasting, inventive transient technology, and billing course of automation.

The shift towards specialised fashions helps cost-effective advertising and marketing automation. Conventional approaches requiring giant language fashions for all agent features create pointless computational overhead. NVIDIA’s analysis suggests advertising and marketing organizations can obtain equal performance via specialised small fashions whereas lowering operational bills considerably.

Perplexity AI’s vision for AI agent-targeted promoting represents a logical extension of those developments. The proposed system would contain AI brokers as intermediaries between manufacturers and customers, with retailers competing for agent consideration reasonably than human consideration. This method aligns with NVIDIA’s argument for specialised, cost-effective fashions managing routine advertising and marketing duties.

Implementation timeline stays unsure

The analysis crew acknowledges sensible hurdles regardless of technical readiness. Infrastructure modernization requires overcoming current funding commitments whereas creating specialised analysis frameworks. Superior inference scheduling programs like NVIDIA Dynamo cut back obstacles via improved flexibility in monolithic computing clusters.

Market adoption depends upon overcoming business inertia and creating acceptable benchmarks for agentic purposes. The crew commits to publishing correspondence concerning their place via analysis.nvidia.com/labs/lpr/slm-agents, encouraging broader dialogue of AI useful resource allocation methods.

Price pressures will probably speed up adoption timelines. As AI brokers change into extra prevalent in enterprise operations, the financial benefits of SLM-first architectures create compelling incentives for infrastructure modernization. Organizations in search of aggressive benefits via AI deployment might discover specialised small fashions provide superior cost-effectiveness in comparison with generalist giant language mannequin approaches.

Subscribe the PPC Land e-newsletter ✉️ for comparable tales like this one. Obtain the information every single day in your inbox. Freed from advertisements. 10 USD per yr.

Timeline

- June 2, 2025: NVIDIA Analysis publishes “Small Language Fashions are the Way forward for Agentic AI” paper difficult business infrastructure funding methods

- 2024: Trade invests $57 billion in LLM infrastructure supporting $5.6 billion API serving market

- March 27, 2025: Zeta Global launches AI Agent Studio with agentic workflow capabilities for advertising and marketing automation

- January 2025: McKinsey reports $1.1 billion fairness funding in agentic AI with 985% improve in associated job postings

- December 30, 2024: Perplexity AI founder outlines vision for AI agent-targeted promoting fashions

- 2024: DeepSeek R1-Distill fashions reveal 7B parameter efficiency exceeding Claude-3.5-Sonnet and GPT-4o on reasoning duties

- 2024: Microsoft Phi-3 (7B) achieves language understanding matching 70B fashions of similar technology

- Ongoing: Trade adoption of AI-powered marketing automation platforms accelerates throughout enterprise environments

Subscribe the PPC Land e-newsletter ✉️ for comparable tales like this one. Obtain the information every single day in your inbox. Freed from advertisements. 10 USD per yr.

Abstract

Who: NVIDIA Analysis crew led by Peter Belcak with co-authors from NVIDIA Analysis and Georgia Institute of Know-how revealed complete evaluation difficult present AI infrastructure methods.

What: Analysis demonstrates small language fashions with fewer than 10 billion parameters obtain equal efficiency to giant language fashions for 60-80% of enterprise AI agent duties whereas requiring 10-30 instances decrease operational prices.

When: Paper revealed June 2, 2025, addressing $57 billion infrastructure funding made in 2024 for big language mannequin deployment supporting $5.6 billion API serving market.

The place: Findings apply to enterprise agentic AI deployments globally, with implications for advertising and marketing automation, customer support, and workflow optimization throughout industries investing in AI agent applied sciences.

Why: Trade faces important value pressures from ten-fold disparity between infrastructure funding and market income, whereas technical proof exhibits specialised small fashions present superior cost-effectiveness for repetitive, well-defined duties comprising majority of AI agent purposes.

Key Phrases Defined

Small Language Fashions (SLMs)

Small Language Fashions characterize AI programs designed to run effectively on consumer-grade {hardware} whereas sustaining sensible efficiency for specialised duties. NVIDIA defines SLMs as fashions “that may match onto a typical client digital system and carry out inference with latency sufficiently low to be sensible when serving the agentic requests of 1 person.” These fashions usually include fewer than 10 billion parameters, contrasting sharply with giant language fashions that will exceed 70 billion parameters. The sensible benefits embody decreased reminiscence necessities, decrease power consumption, and sooner inference speeds, making them appropriate for edge deployment and real-time purposes.

Agentic AI

Agentic AI encompasses synthetic intelligence programs able to autonomous planning, decision-making, and process execution with out fixed human supervision. Based on the analysis, “The rise of agentic AI programs is, nonetheless, ushering in a mass of purposes through which language fashions carry out a small variety of specialised duties repetitively and with little variation.” These programs differ from conventional AI instruments by working as digital coworkers that may handle total workflows, adapt methods primarily based on real-time knowledge, and coordinate a number of specialised features. Advertising purposes embody marketing campaign optimization, viewers focusing on, and efficiency evaluation automation.

Giant Language Fashions (LLMs)

Giant Language Fashions are AI programs with billions of parameters designed for general-purpose language understanding and technology duties. The paper notes that “Giant language fashions (LLMs) are sometimes praised for exhibiting near-human efficiency on a variety of duties and valued for his or her potential to carry a normal dialog.” These fashions require substantial computational sources and usually function via centralized cloud infrastructure. Whereas highly effective for various purposes, LLMs could also be inefficient when deployed for slender, repetitive duties that comprise nearly all of enterprise AI agent operations.

Infrastructure Funding

Infrastructure funding refers back to the substantial capital allocation towards computing sources, knowledge facilities, and cloud providers supporting AI mannequin deployment. The analysis highlights that “the funding into the internet hosting cloud infrastructure surged to USD 57bn in the identical yr” whereas “the marketplace for the LLM API serving that underlies agentic purposes was estimated at USD 5.6bn in 2024.” This ten-fold disparity between infrastructure prices and market income creates financial strain for extra environment friendly deployment methods. The large funding displays business confidence in present operational fashions however might not align with precise utilization patterns.

Efficiency Benchmarks

Efficiency benchmarks present standardized measurements for evaluating AI mannequin capabilities throughout completely different duties and purposes. The examine demonstrates that “Phi-2 (2.7bn) achieves commonsense reasoning scores and code technology scores on par with 30bn fashions whereas working ∼15× sooner” and “DeepSeek-R1-Distill-Qwen-7B mannequin outperforms giant proprietary fashions corresponding to Claude-3.5-Sonnet-1022 and GPT-4o-0513.” These metrics problem conventional assumptions in regards to the relationship between mannequin measurement and functionality, suggesting that specialised coaching and structure design can obtain superior outcomes with fewer parameters.

Parameter Depend

Parameter rely represents the variety of learnable weights inside an AI mannequin, historically thought-about an indicator of mannequin functionality and complexity. The analysis argues that “with fashionable coaching, prompting, and agentic augmentation strategies, functionality — not the parameter rely — is the binding constraint.” Whereas bigger parameter counts traditionally correlated with higher efficiency, latest advances in coaching methodologies and mannequin structure allow smaller fashions to attain comparable outcomes. This shift challenges the business’s concentrate on scaling mannequin measurement reasonably than optimizing effectivity.

Inference Prices

Inference prices embody the computational bills related to working AI fashions for real-time purposes and person requests. Based on the evaluation, “Serving a 7bn SLM is 10–30× cheaper (in latency, power consumption, and FLOPs) than a 70–175bn LLM, enabling real-time agentic responses at scale.” These value benefits change into notably important for enterprise deployments requiring frequent mannequin invocations. Decrease inference prices allow broader adoption of AI applied sciences whereas enhancing revenue margins for corporations implementing automated programs.

Wonderful-tuning

Wonderful-tuning includes adapting pre-trained AI fashions for particular duties or domains via further coaching on specialised datasets. The analysis notes that “Parameter-efficient (e.g., LoRA and DoRA) and full-parameter finetuning for SLMs require just a few GPU-hours, permitting behaviors to be added, fastened, or specialised in a single day reasonably than over weeks.” This agility allows speedy customization and iteration in comparison with giant fashions requiring in depth computational sources. Wonderful-tuning flexibility permits organizations to create specialised fashions tailor-made to their particular operational necessities.

Advertising Automation

Advertising automation encompasses the usage of AI applied sciences to handle and optimize promoting campaigns, viewers focusing on, and efficiency evaluation with out fixed human oversight. Trade knowledge exhibits important funding development, with agentic AI attracting $1.1 billion in fairness funding throughout 2024. Fashionable advertising and marketing automation programs allow marketing campaign creation, viewers definition, efficiency forecasting, and artistic transient technology via interconnected AI brokers. The shift towards specialised fashions helps cost-effective automation whereas sustaining advertising and marketing effectiveness throughout digital channels.

Edge Deployment

Edge deployment includes working AI fashions on native gadgets or consumer-grade {hardware} reasonably than counting on centralized cloud infrastructure. The examine highlights that “advances in on-device inference programs corresponding to ChatRTX reveal native execution of SLMs on consumer-grade GPUs, showcasing real-time, offline agentic inference with decrease latency and stronger knowledge management.” This method affords benefits together with decreased latency, enhanced privateness safety, and decreased dependence on web connectivity. Edge deployment turns into notably invaluable for purposes requiring speedy responses or dealing with delicate knowledge that can not be transmitted to exterior servers.

Source link