Evaluation For those who thought AI networks weren’t difficult sufficient, the rise of rack-scale architectures from the likes of Nvidia, AMD, and shortly Intel has launched a brand new layer of complexity.

In comparison with the scale-out networks, which generally use Ethernet or InfiniBand, the scale-up materials on the coronary heart of those programs usually make use of proprietary, or on the very least rising, interconnect applied sciences that provide a number of orders of magnitude greater bandwidth per accelerator.

As an illustration, Nvidia’s fifth-gen NVLink interconnect delivers between 9x and 18x greater mixture bandwidth to every accelerator than Ethernet or InfiniBand at this time.

This bandwidth implies that GPU compute and reminiscence could be pooled, though they’re bodily distributed throughout a number of distinct servers. Nvidia CEO Jensen Huang wasn’t kidding when he known as the GB200 NVL72 “one large GPU.”

The transition to those rack-scale architectures is being pushed in no small half by the calls for of mannequin builders, like OpenAI and Meta, and they’re primarily aimed toward hyperscale cloud suppliers, neo-cloud operators like CoreWeave or Lambda, and enormous enterprises that have to maintain their AI workloads on-prem.

Given this goal market, these machines aren’t low-cost. Our sibling web site The Subsequent Platform estimates the price of a single NVL72 rack at $3.5 million.

To be clear, the scale-up materials that make these rack-scale architectures attainable aren’t precisely new. It is simply that till now, they’ve not often prolonged past a single node and often topped out at eight GPUs. As an illustration, here is a take a look at the scale-up cloth discovered inside AMD’s newly announced MI350-series programs.

AMD’s MI350-series GPU sticks with a reasonably normal configuration with eight GPUs coupled to an equal variety of 400Gbps NICs and a pair of x86 CPUs – Click on to enlarge

As you’ll be able to see, every chip connects the opposite seven in an all-to-all topology.

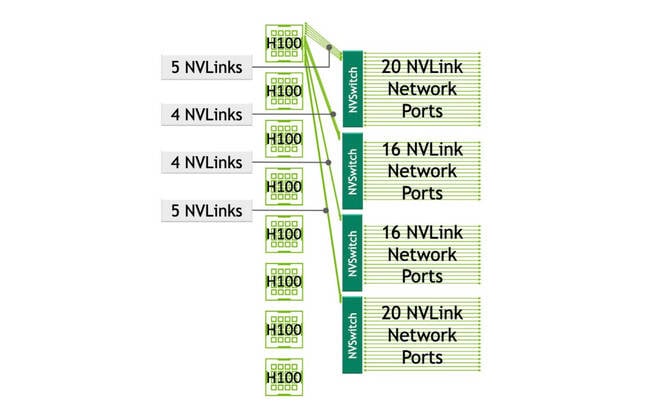

Nvidia’s HGX design follows the identical primary template for its 4 GPU H100 programs, however provides 4 NVLink switches to the combination for its extra ubiquitous eight GPU nodes. Whereas Nvidia says these switches benefit from chopping down on communication time, in addition they add complexity.

Reasonably than an all-to-all mesh, like we see in AMD’s 8-GPU nodes, Nvidia’s HGX structure has employed NVLink switches to mesh its GPUs collectively going again to the Volta era – Click on to enlarge

With the transfer to rack scale, this similar primary topology is just scaled up — at the least for Nvidia’s NVL programs. For AMD, an all-to-all mesh merely is not sufficient, and switches develop into unavoidable.

Diving into Nvidia’s NVL72 scale-up structure

We’ll dig into the Home of Zen’s upcoming Helios racks in a bit, however first let’s check out Nvidia’s NVL72. Since it has been out there a short time longer, we all know a good bit extra about it.

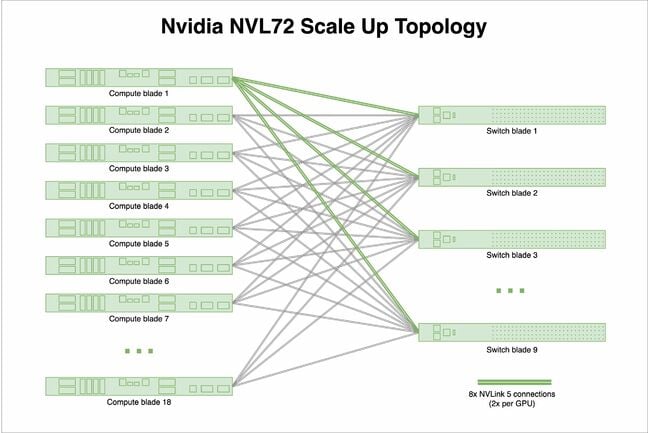

As a fast reminder, the rack-scale system features 72 Blackwell GPUs unfold throughout 18 compute nodes. All these GPUs are related by way of 18 7.2TB/s NVLink 5 change chips deployed in pairs throughout 9 blades.

From what we perceive, every change ASIC options 72 ports with 800Gbps or 100GB/s of bi-directional bandwidth every. Nvidia’s Blackwell GPUs, in the meantime, boast 1.8TB/s of mixture bandwidth unfold throughout 18 ports — one for every change within the rack. The result’s a topology that appears a bit like this one:

Every GPU within the rack connects to 2 NVLink ports in every of the rack’s 9 NVLink 5 switches. – Click on to enlarge

This high-speed all-to-all interconnect cloth implies that any GPU within the rack can entry one other’s reminiscence.

Why scale-up?

In line with Nvidia, these huge compute domains permit the GPUs to run way more effectively. For AI coaching workloads, the GPU large estimates its GB200 NVL72 programs are as much as 4x quicker than the equal variety of H100s, though the part chips solely supply 2.5x greater efficiency on the similar precision.

In the meantime, for inference, Nvidia says its rack scale configuration is as much as 30x quicker — partly as a result of numerous levels of information, pipeline, tensor, and knowledgeable parallelism could be employed to reap the benefits of all that reminiscence bandwidth even when the mannequin does not essentially profit from all of the reminiscence capability or compute.

With that mentioned, with between 13.5TB and 20TB of VRAM in Nvidia’s Grace-Blackwell-based racks, and roughly 30TB on AMD’s upcoming Helios racks, these programs are clearly designed to serve extraordinarily giant fashions like Meta’s (apparently delayed) two-trillion parameter Llama 4 Behemoth, which would require 4TB of reminiscence to run at BF16.

Not solely are the fashions getting bigger, however the context home windows, which you’ll consider because the LLMs’ brief time period reminiscence, are too. Meta’s Llama 4 Scout, for instance, is not significantly massive at 109 billion parameters — requiring simply 218GB of GPU reminiscence to run at BF16. Its 10-million-token context window, nevertheless, would require a number of instances that, particularly at greater batch sizes. (We focus on the reminiscence necessities of LLMs in our guide to running LLMs in production here.)

Speculating on AMD’s first scale-up system Helios

That is little doubt why AMD has additionally embraced the rack-scale structure with its MI400-series accelerators.

At its Advancing AI occasion earlier this month, AMD revealed its Helios reference design. In a nutshell, the system, very like Nvidia’s NVL72, will function 72 MI400-series accelerators, 18 EPYC Venice CPUs, and AMD’s Pensando Vulcano NICs, when it arrives subsequent 12 months.

Particulars on the system stay skinny, however we do know its scale-up cloth will supply 260TB/s of mixture bandwidth, and can tunnel the rising UALink over Ethernet.

For those who’re not acquainted, the rising Extremely Accelerator Hyperlink normal is an open different to NVLink for scale-up networks. The Extremely Accelerator Hyperlink Consortium just lately published its first specification in April.

At roughly 3.6TB/s of bidirectional bandwidth per GPU, that’ll put Helios on par with Nvidia’s first-generation Vera-Rubin rack programs, additionally due out subsequent 12 months. How AMD intends to try this, we are able to solely speculate — so we did.

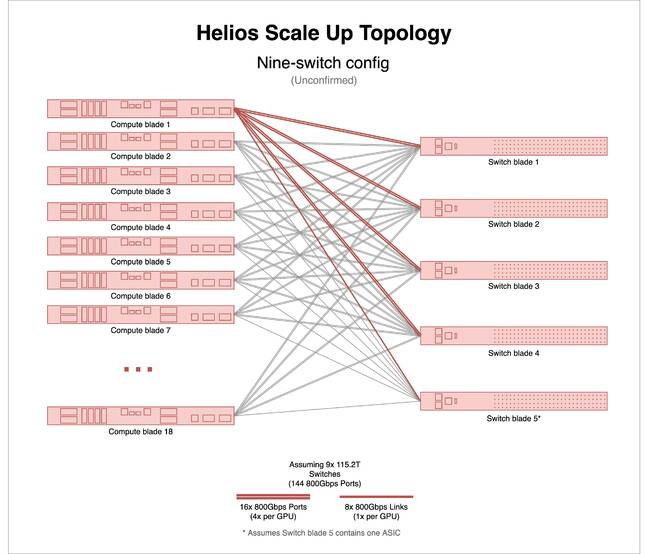

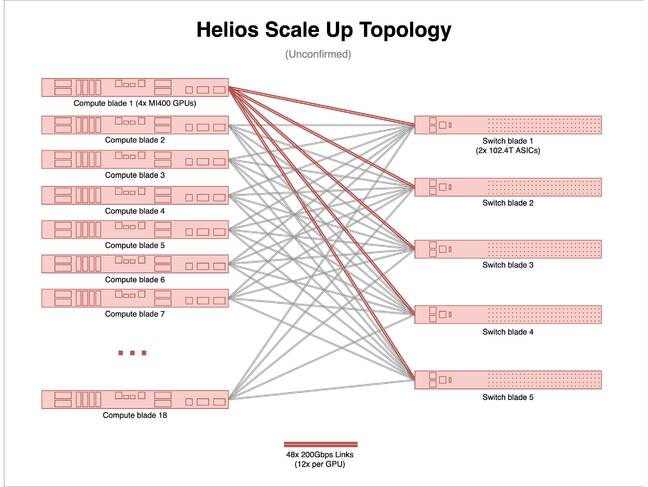

Based mostly on what we noticed from AMD’s keynote, the system rack seems to function 5 change blades, with what appears to be two ASICs a bit. With 72 GPUs per rack, this configuration strikes us as a bit odd.

The best rationalization is regardless of there being 5 change blades, there are literally solely 9 change ASICs in there. For this to work, every change chip would require 144 800Gbps ports. This can be a tad uncommon for Ethernet, however not far off from what Nvidia did with its NVLink 5 switches, albeit utilizing twice as many ASICs at half the bandwidth.

The end result can be a topology that appears fairly just like Nvidia’s NVL72.

The best means for AMD to attach 72 GPUs collectively can be to make use of 9 144-port 800Gbps switches. – Click on to enlarge

The tough bit is, at the least to our data, no such change ASIC able to delivering that degree of bandwidth exists at this time. Broadcom’s Tomahawk 6, which we looked at in depth a number of weeks again, comes the closest with as much as 128 800Gbps ports and 102.4Tbps of mixture bandwidth.

For the document, we do not know that AMD is utilizing Broadcom for Helios — it simply occurs to be one of many few publicly disclosed 102.4Tbps switches not from Nvidia.

However even with 10 of these chips crammed into Helios, you’d nonetheless want one other 16 ports of 800Gbps Ethernet to succeed in the 260TB/s bandwidth AMD is claiming. So what provides?

Our greatest guess is that Helios is utilizing a distinct topology from Nvidia’s NVL72. In Nvidia’s rack-scale structure, the GPUs join to at least one one other over the NVLink Switches.

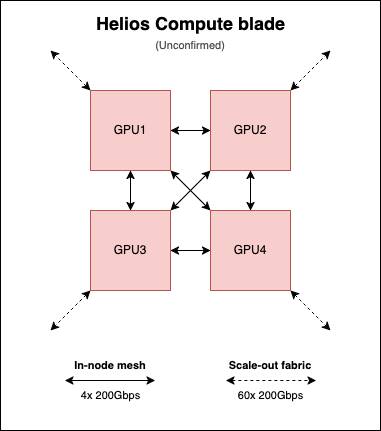

Nevertheless, it appears like AMD’s Helios compute blades will retain the chip-to-chip mesh from the MI300-series, albeit with three mesh hyperlinks connecting every GPU to the opposite three.

Assuming that AMD MI400-series GPUs retain their chip-to-chip mesh within the node, a ten change scale up cloth begins to make extra sense. – Click on to enlarge

That is all hypothesis after all, however the numbers do line up fairly properly.

By our estimate, every GPU is dedicating 600GB/s (12x 200Gbps hyperlinks) of bidirectional bandwidth to the in-node mesh and about 3TB/s of bandwidth (60x 200Gbps hyperlinks) for the scale-up community. That works out to about 600GB/s per change blade.

With the 4 GPUs in every compute blade meshed collectively, the dimensions up topology would seem like this. – Click on to enlarge

For those who’re pondering that is a lotta ports, we anticipate they’re going to get aggregated into round 60 800Gbps ports or probably even 30 1.6Tbps ports per compute blade. That is considerably just like what Intel did with its Gaudi3 systems. From what we perceive, the precise cabling will probably be built-in right into a blind-mate backplane similar to Nvidia’s NVL72 programs. So, in case you had been sweating the thought of getting to community the rack by hand, you’ll be able to relaxation straightforward.

We will see a number of advantages to this strategy. If we’re proper, every Helios compute blade will operate independently of each other. Nvidia, in the meantime, has a separate SKU known as the GB200 NVL4 aimed toward HPC purposes, which meshes the 4 Blackwell GPUs collectively, just like the above diagram, however does not assist utilizing NVLink for scale-up.

However once more, there is not any assure that is what AMD is doing — it is simply our greatest guess.

Scaling up does not imply you cease scaling out

You would possibly suppose the bigger compute domains enabled by AMD and Nvidia’s rack-scale architectures would imply the Ethernet, InfiniBand, or OmniPath — sure they’re again! — would take a again seat.

In actuality, these scale-up networks cannot scale a lot past a rack. The copper flyover cables utilized in programs like Nvidia’s NVL72 and presumably AMD’s Helios simply cannot attain that far.

As we have beforehand explored, silicon photonics has the potential to alter that, however the know-how faces its personal hurdles with regard to integration. We don’t think about Nvidia is charting a course towards 600kW racks as a result of it needs to, however fairly as a result of it anticipates the photonics tech vital for these scale-up networks to flee the rack gained’t be prepared in time.

So in case you want greater than 72 GPUs — and in case you’re doing any type of coaching, you undoubtedly do — you continue to want a scale-out cloth. In reality, you want two. One for coordinating compute on the back-end and one for information ingest on the front-end.

Rack-scale does not seem to have diminished the quantity of scale-out bandwidth required both. For its NVL72 at the least, Nvidia has caught with a 1:1 ratio of NICs to GPUs this era. Often there are one other two NIC or information processing unit (DPU) ports per blade for the traditional front-end community to maneuver information out and in of storage and so forth.

This is smart for coaching, however might not be strictly vital for inference in case your workload can match inside a single 72-GPU compute and reminiscence area. Spoiler alert: except you are operating some huge proprietary mannequin, the main points for which are not identified, you most likely can.

The excellent news is we’re about to see some critically excessive radix switches hit the market over the subsequent six to 12 months.

We have already talked about Broadcom’s Tomahawk 6, which can assist wherever from 64 1.6Tbps ports to 1,024 100Gbps ports. However there’s additionally Nvidia’s Spectrum-X SN6810 due out subsequent 12 months, which can supply as much as 128 800Gbps ports and can use silicon photonics to do it. Nvidia’s SN6800, in the meantime, will function 512 MPO ports good for 800Gbps a bit.

These switches dramatically cut back the variety of switches required for large-scale AI deployments. To attach up a cluster of 128,000 GPUs at 400Gbps, you’d want about 10,000 Quantum-2 InfiniBand switches. Choosing 51.2Tbps Ethernet switches successfully minimize that in half.

With the transfer to 102.4Tbps switching, the quantity shrinks to 2,500, and in case you can reside with 200Gbps ports, you’d want simply 750, because the radix is sufficiently giant you could get away with a two-tiered community versus the three-tiered fat-tree topologies we regularly see in large-scale AI coaching clusters. ®

Source link