This month’s search roundup dives into the largest developments shaping the search engine optimization panorama. From a latest surge in rating volatility all through April and new experiments in AI-powered search, to sensible updates for ecommerce and native search engine optimization, we’ll focus on all of it under.

All through April, a number of cases of rating volatility had been famous. search engine optimization monitoring instruments all recorded massive actions on the twenty second, twenty third and twenty fifth of April, which has prompted many to query whether or not a brand new Google algorithm update is on the horizon.

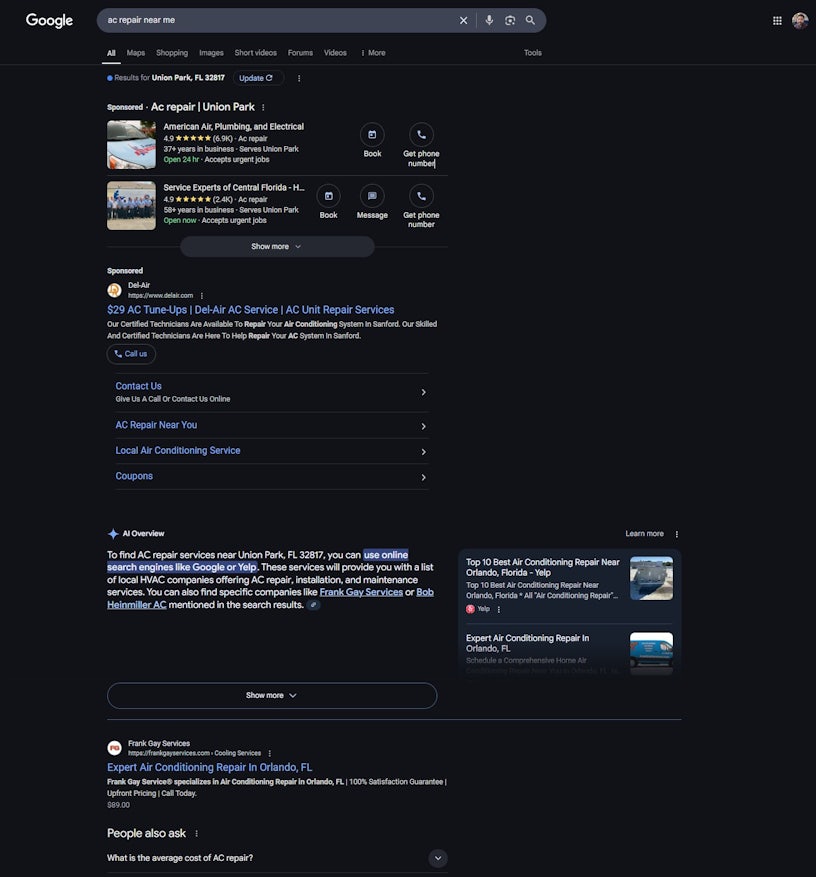

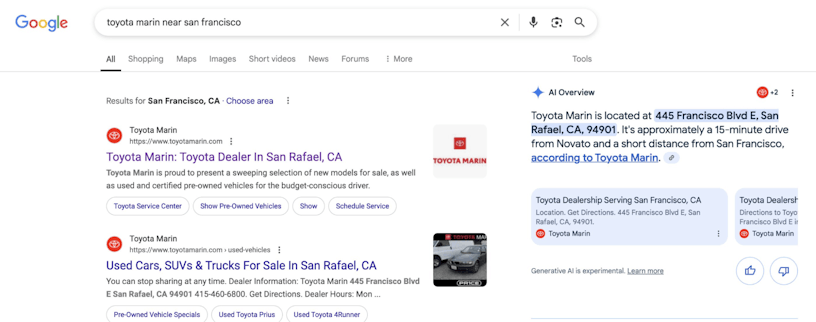

In different information, Google has been testing the mixing of AI Overviews into native search outcomes, experimenting with new codecs that would exchange or reposition the standard native pack and information panel.

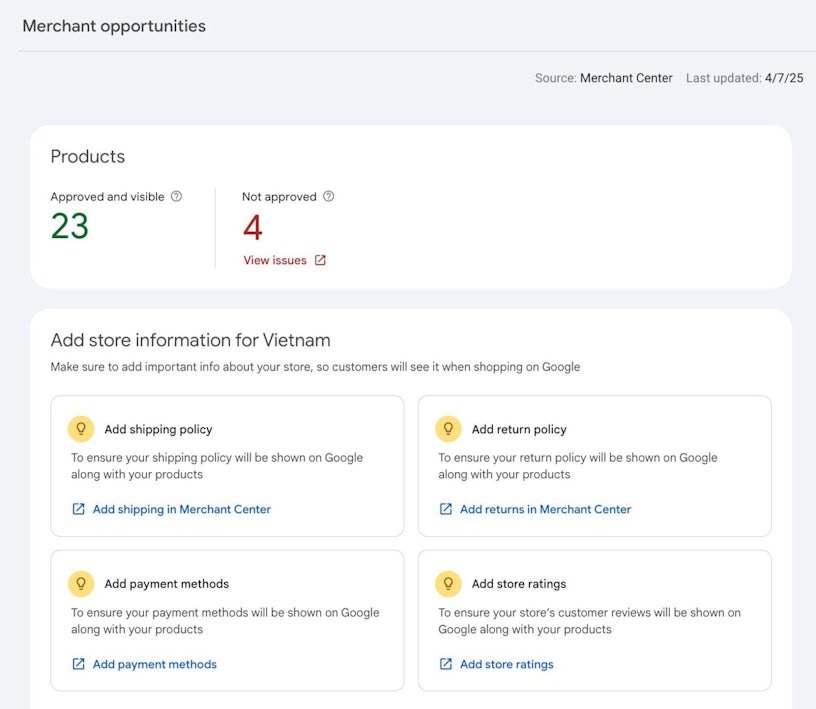

Google has additionally rolled out a brand new Service provider Alternatives report in Search Console. Retailers related by way of Google Merchant Center will now see sensible options to enhance product listings, resembling including transport data, retailer rankings, or cost choices.

Lastly, in a few interviews with representatives from Google, we’ve additionally gained extra perception into the newly rising llms.txt markdown-based recordsdata and their stance on Server-Side vs. Shopper-Facet Rendering. We’ll discover these updates in additional element within the article under.

Permit our site visitors mild system to information you to the articles that want your consideration, so be careful for Pink mild updates as they’re main modifications that can want you to take motion, whereas amber updates might make you assume and are positively value figuring out, however aren’t pressing. And eventually, inexperienced mild updates, that are nice to your search engine optimization and website information, however are much less important than others.

Eager to know extra about any of those modifications and what they imply to your search engine optimization? Get in touch or go to our SEO agency web page to learn the way we may help.

On April 25, 2025, a major spike in Google Search rating volatility was noticed, marking the second prevalence inside the similar week. This adopted earlier fluctuations on April 22 and 23 and former disturbances on April 16 and April 9. Regardless of these repeated shifts, Google has not formally confirmed any updates past the March 2025 core update.

A number of search engine optimization monitoring instruments, together with Semrush, Mozcast, SimilarWeb, and Sistrix, reported notable surges in volatility on April 25. These spikes counsel substantial modifications in search rankings throughout varied web sites.

The search engine optimization group has echoed these findings, with site owners reporting important declines in site visitors. Some famous drops of as much as 70%, significantly affecting Google Uncover site visitors. Others noticed their web sites disappearing from search outcomes or experiencing erratic visibility. These disruptions have led to widespread frustration, with some customers expressing issues over the growing dominance of adverts and perceived decline in search consequence high quality.

Whereas the precise reason for this volatility stays unclear, the frequency and depth of those fluctuations counsel ongoing, unannounced changes to Google’s search algorithms.

In early Could 2025, Google initiated exams integrating AI Overviews into native search outcomes, doubtlessly altering the standard presentation of native enterprise data. One experiment entails changing the acquainted “native pack”, which generally shows a map and a trio of enterprise listings for “close to me” queries, with AI-generated summaries incorporating native particulars.

One other take a look at positions AI Overviews on the right-hand aspect of the search outcomes web page, an area historically reserved for the native information panel. This structure change signifies Google’s exploration of recent codecs for presenting AI-generated content material alongside customary search outcomes.

These developments are a part of Google’s broader technique to combine AI Overviews into its search platform. Initially launched within the U.S. in Could 2024 and expanded to over 100 nations, together with the U.Okay., AI Overviews present concise, AI-generated summaries in response to person queries. Whereas designed to reinforce person expertise by delivering fast data, the function has confronted criticism for potential inaccuracies and its impression on web site site visitors, as customers might depend on summaries as an alternative of visiting supply websites.

Google has introduced the brand new Service provider Alternatives report inside Google Search Console, which may present you suggestions for enhancing how your on-line store seems on Google.

In the event you’ve created and related your Service provider Heart account beneath Service provider alternatives in Search Console, you’ll see urged alternatives, together with:

You possibly can return to the report back to see in case your data is pending, accepted, or flagged for points that want fixing.

This report helps you overview and see what alternatives you’re lacking along with your e-commerce website setup.

As Google continues evolving its Search interface, monitoring natural site visitors from newer search options, like AI Overviews (AIO), Featured Snippets, and Individuals Additionally Ask (PAA), has grow to be more and more necessary for search engine optimization professionals. These options usually ship customers on to a selected part of an internet web page utilizing URL fragments, resembling #:~:text=, highlighting the related textual content. Nevertheless, conventional analytics instruments, together with Google Analytics 4 (GA4), don’t mechanically register or section this type of site visitors.

To seize this knowledge, companies have to implement customized JavaScript variables in Google Tag Supervisor to extract the highlighted textual content from the URL. Then, ship this data to GA4 utilizing customized dimensions. This setup permits for the evaluation of person interactions originating from these SERP options, offering insights into how customers interact with highlighted content material in your website. Nevertheless, not all clicks from these options embody identifiable URL fragments, so monitoring might not seize each occasion.

KP Playbook affords a sensible information to bridge this hole utilizing Google Tag Supervisor (GTM) and GA4 customized dimensions.

Learn how to rank product pages in Google’s new product SERPs

In a latest article printed on Sitebulb, Angie Perperidou shared her 7 methods to optimise for product SERPs in 2025. This recommendation was offered in mild of the appreciable modifications we’ve seen in Google’s search outcomes as we shift away from conventional listings of class pages to extra detailed product data instantly within the search outcomes.

What’s modified?

Options like AI Overviews, picture grids, and product carousels now give customers entry to pictures, costs, and critiques with no need to go to a web site. In consequence, product pages aren’t simply competing with different ecommerce shops, they’re now competing with Google’s personal interface. If a web page lacks structured knowledge, distinctive content material, or visible attraction, it may not present up in search in any respect.

What e-commerce companies can do to adapt

Within the information, Angie stresses that ecommerce companies have to rethink how they optimise their product pages. She offered the next recommendation:

- Implementing product-related schema markup (like Product, Provide, and Evaluation) so Google can correctly index and show your content material in wealthy outcomes.

- Goal long-tail search queries which might be extra particular and fewer aggressive to drive area of interest site visitors and enhance visibility.

- Keep away from regurgitating producer descriptions and create distinctive copy that solutions buyer wants and differentiates your providing.

- Add FAQs marked up with the suitable schema instantly on product pages. Instruments like AlsoAsked, Reddit, Quora, and Google Search Console may help you uncover what customers are asking.

- Optimise product photographs and movies with descriptive filenames and alt textual content to assist pages seem in picture or video carousels while compressing recordsdata to enhance web page load pace (particularly necessary for cell customers).

- Encourage and show buyer critiques to construct credibility and improve your probabilities of capturing wealthy search outcomes to assist improve click-through charges.

- Take note of cell optimisation to make sure that pages have to load rapidly and performance effectively throughout all units.

In case you have an ecommerce web site and need assistance from an skilled SEO ecommerce agency, we may help. Simply go to our ecommerce SEO services web page for extra data.

Server-Facet vs. Shopper-Facet Rendering: What Google recommends

In a latest interview with Kenichi Suzuki of Faber Firm Inc., Martin Splitt, a Developer Advocate at Google, offered new insights into how Google handles JavaScript rendering, particularly in mild of AI instruments like Gemini.

Splitt defined that each Googlebot and Gemini’s AI crawler use a shared Net Rendering Service (WRS) to course of JavaScript content material. This makes Google’s methods extra environment friendly at rendering advanced websites in comparison with different AI methods which will battle with JavaScript.

Whereas some experiences have urged rendering delays can take weeks, Splitt clarified that rendering is usually accomplished inside minutes, with lengthy waits being uncommon exceptions possible as a result of measurement points.

Do you have to use Server-Facet or Shopper-Facet Rendering?

Splitt addressed the widespread search engine optimization debate between server-side rendering (SSR) and client-side rendering (CSR), noting that there’s no one-size-fits-all reply. The perfect strategy is determined by your web site’s goal. For conventional, content-focused websites, counting on JavaScript is usually a drawback because it introduces technical dangers, slows down efficiency, and drains cell batteries. In such circumstances, Splitt recommends SSR or pre-rendering static HTML.

Nevertheless, CSR stays helpful for dynamic, extremely interactive functions like design instruments or video enhancing software program. Quite than selecting one rendering methodology, he advises considering of SSR and CSR as completely different instruments, every applicable for particular use circumstances.

Structured knowledge is useful, however not a rating issue

The dialogue additionally coated the rising significance of structured knowledge in AI-driven search environments. Splitt confirmed that structured data performs a worthwhile position in serving to Google perceive web site content material extra confidently. This added context is particularly useful as AI turns into extra embedded in search capabilities. Nevertheless, he emphasised that whereas structured knowledge improves understanding, it doesn’t instantly affect a web page’s rating in search outcomes.

The main target ought to at all times be on customers and producing high quality content material

Splitt concluded with a reminder that, regardless of all of the technical choices accessible, the basics of search engine optimization haven’t modified. He urged website house owners and entrepreneurs to prioritise person expertise, align their web sites with clear enterprise objectives, and give attention to delivering high-quality, useful content material. As AI continues to reshape the best way search engines like google and yahoo interpret and ship data, staying grounded in person wants stays the best technique.

In March’s Google algorithm blog, we launched the brand new idea of llms.txt, which goals to assist AI crawlers entry a simplified model of a web site’s content material.

Typically mistaken for robots.txt, llms.txt goals to focus on solely the primary content material of a web page utilizing markdown to make it simpler for LLMs to course of the content material extra effectively.

Nevertheless, the llms.txt file continues to be only a proposal, not a extensively adopted customary, and it has but to realize critical traction amongst AI companies.

What’s Google’s take?

John Mueller from Google weighed in on a Reddit thread the place somebody requested if anybody had seen indicators that AI crawlers had been utilizing llms.txt. Mueller responded that, so far as he is aware of, no main AI service is utilizing and even searching for llms.txt recordsdata, evaluating its usefulness to the now-obsolete key phrases meta tag.

He went on to query the purpose of utilizing llms.txt when AI brokers already crawl the precise website content material, which gives a fuller, extra reliable image. In his view, asking bots to depend on a separate, simplified file is redundant and untrustworthy, particularly since structured knowledge already serves the same, extra credible goal.

llms.txt sparks concern about cloaking and abuse

A serious subject with llms.txt is the potential for abuse. Website house owners may present one model of content material to LLMs by the markdown file and a very completely different model to customers or search engines like google and yahoo, much like cloaking, which violates search engine pointers. This makes it a poor resolution for standardisation, particularly in an age the place AI and search engines like google and yahoo depend on extra subtle content material evaluation relatively than trusting publisher-declared intent.

llms.txt recordsdata have little to no impression in the mean time

In the identical Reddit dialogue, somebody managing over 20,000 domains famous that no main AI bots are accessing llms.txt. Even after implementation, website house owners noticed no significant impression on site visitors or bot exercise.

This sentiment was echoed in a follow-up LinkedIn publish by Simone De Palma, who initially began the dialogue. He argued that llms.txt might even damage the person expertise, since content material citations may lead customers to a plain markdown file as an alternative of a wealthy, navigable webpage. Others agreed, mentioning that there are higher methods to spend search engine optimization and growth time, resembling enhancing structured knowledge, robots.txt guidelines, and sitemaps.

Do you have to utilise llms.txt recordsdata?

In response to each Google and the search engine optimization group, llms.txt is presently ineffective, unsupported, and doubtlessly problematic. It doesn’t management bots like robots.txt, nor does it present any measurable search engine optimization profit. As an alternative, it has been advisable that website house owners give attention to established instruments like structured knowledge, correct crawling directives, and high-quality content material.

Source link