RSAC Company AI fashions are already skewed to serve their makers’ pursuits, and until governments and academia step as much as construct clear options, the tech dangers turning into simply one other instrument for business manipulation.

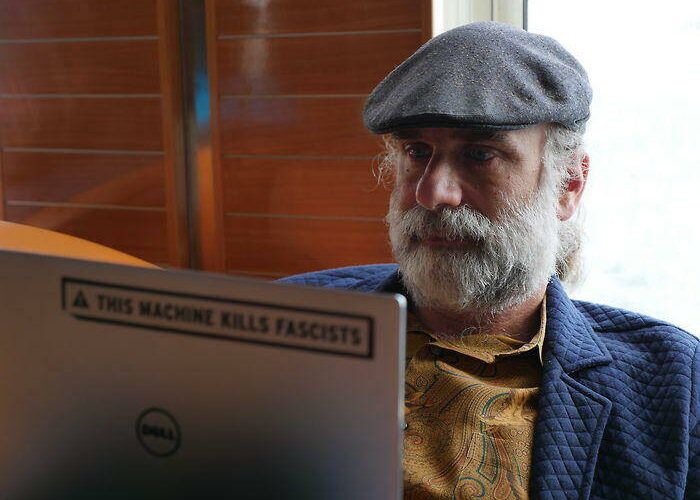

That is in keeping with cryptography and privateness guru Bruce Schneier, who spoke to The Register final week following a keynote speech on the RSA Conference in San Francisco.

“I fear that it will be like serps, which you utilize as if they’re impartial third events however are literally attempting to control you. They attempt to type of get you to go to the web sites of the advertisers,” he advised us. “It is integrity that we actually want to consider, integrity as a safety property and the way it works with AI.”

Throughout his RSA keynote, Schneier requested: “Did your chatbot advocate a specific airline or lodge as a result of it is the most effective deal for you, or as a result of the AI firm obtained a kickback from these firms?”

To take care of this quandary, Schneier proposes that governments ought to begin taking a extra hands-on stance in regulating AI, forcing mannequin builders to be extra open concerning the data they obtain, and the way the choices fashions make are conceived.

He praised the EU AI Act, noting that it gives a mechanism to adapt the legislation as know-how evolves, although he acknowledged there are teething issues. The laws, which entered into power in August 2024, introduces phased necessities primarily based on the danger degree of AI programs. Corporations deploying high-risk AI should preserve technical documentation, conduct danger assessments, and guarantee transparency round how their fashions are constructed and the way choices are made.

As a result of the EU is the world’s largest buying and selling bloc, the legislation is predicted to have a major impression on any firm desirous to do enterprise there, he opined. This might push different areas towards comparable regulation, although he added that within the US, significant legislative motion stays unlikely below the present administration.

Schneier famous that the difficulty of bias in AI brokers is tough to unravel, largely as a result of customers typically want programs that take their aspect. He gave the instance of an AI agent designed for judges – simply as a decide chooses legislation clerks who align with their views, they will possible decide a digital aide that does the identical.

The important thing to addressing that is transparency, he argues, so that folks can know what to anticipate. And it is right here that authorities and academia will help by constructing clear AI fashions that function a counterpoint to business programs.

I feel I simply need non-corporate AI

“I feel I simply need non-corporate AI,” he advised us. “France is constructing an AI, proper? Constructing an AI with non-profits. I need universities constructing them, and whether or not you belief them is sophisticated. Are you going to belief the Chinese language authorities AI? In all probability not.”

The French plan, dubbed Present AI when it was launched in February 2025, is a public-private partnership involving the French authorities, Salesforce, Google, and several other philanthropic organizations.

The initiative is backed by $400 million in mixed funding and has the help of 9 different governments from each inside and outdoors the EU. The objective is to advertise the event of open-source infrastructure, enhance entry to high-quality datasets, and help AI instruments that serve the general public good with higher transparency and accountability.

“Present AI can change the world of AI,” said French President Emmanuel Macron on the time. “By offering entry to information, infrastructure, and computing energy to numerous companions, Present AI will contribute to growing our personal AI ecosystems in France and Europe, diversifying the market, and fostering innovation worldwide in a good and clear approach.”

Schneier mentioned that such fashions had been an excellent approach ahead, however firms will combat such efforts tooth and nail. Nonetheless, it should be finished for shoppers, Schneier mentioned, and legislators cannot afford to fail this time.

“We failed with social media. We failed with search, however we will do it with AI,” he asserted. “It is a mixture of tech and coverage.” ®

Source link