OpenAI, as if making an attempt to interrupt its personal document for probably the most complicated product lineup in historical past, has launched two new AI fashions for ChatGPT: OpenAI o3 and OpenAI o4-mini.

These be a part of GPT-4.5, which remains to be in testing, and GPT-4o, the default possibility for ChatGPT customers. Naturally, I needed to see how they’d carry out in opposition to one another.

However, whereas there are every kind of stress checks for AI to see the very restrict of what they’ll do, I am extra curious about how they carry out underneath extra regular circumstances. Wouldn’t it matter to a mean one that makes use of AI which mannequin ChatGPT turned to for his or her occasional trivia query or humorous picture?

I designed 4 distinct prompts: one centered on visible logic, one on visible creativity, one on linguistics and translation, and one on poetry. Then I ran every immediate by o3, o4-mini, GPT-4o, and GPT-4.5 and watched how they dealt with it.

Earlier than trying on the outcomes, it is value noting what every mannequin is supposedly greatest at, in accordance with OpenAI no less than. The brand new o3 mannequin is meant to be the genius amongst geniuses, with distinctive reasoning capabilities and a knack for decoding photos. It’s nonetheless underneath the GPT-4 household umbrella, however OpenAI says it performs higher than its siblings on many fronts. The o4-mini mannequin is the budget-friendly various. It’s quicker and cheaper, if barely much less highly effective.

GPT-4.5 is supposedly probably the most succesful mannequin OpenAI has ever constructed within the broadest sense. It is supposedly extra considerate, higher at understanding context, at pondering in longer phrases, and at typically combining logic and empathy.

For most individuals, the ChatGPT mannequin they will cope with is GPT-4o. OpenAI’s first natively multimodal mannequin can do all of it and do it properly, even when it lacks the logical or emotional prospers of its newer siblings.

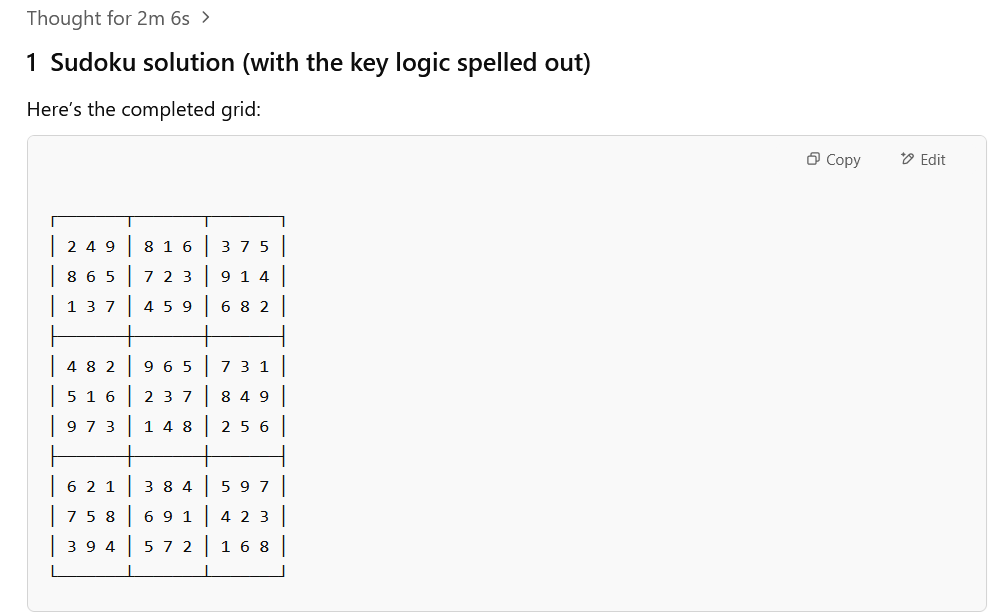

Sudoku

I began with a check of the visible reasoning that the brand new fashions declare to be so adept at. I made a decision to mix it with some logic testing that even I may perceive: a Sudoku puzzle.

I additionally needed them to clarify their reply, as in any other case, it is not a lot of an AI assistant – only a machine for fixing Sudoku. I needed them not simply to dump a solution, however stroll by the logic. I uploaded the identical picture to every mannequin and requested: “Here is a photograph of a Sudoku puzzle. Are you able to resolve it and clarify your reasoning step-by-step?”

The reply was sure for all of them. The o3 and o4-mini variations confirmed their pondering earlier than going by the reply, however all of them bought it proper. What was extra attention-grabbing was the brevity of the o4-mini and the very mathematical reasoning in each new fashions.

Whereas the 4o and 4.5 have been extra conversational in explaining why “you’ll be able to’t put some other quantity right here,” slightly than displaying an precise equation. As an extra check, I put a intentionally inconceivable Sudoku sheet to the identical check. All of them noticed the problem, however the place all of them merely walked by the issues, GPT-4o, for some motive, wrote out an ‘reply’ sheet that simply had plenty of zeroes on it.

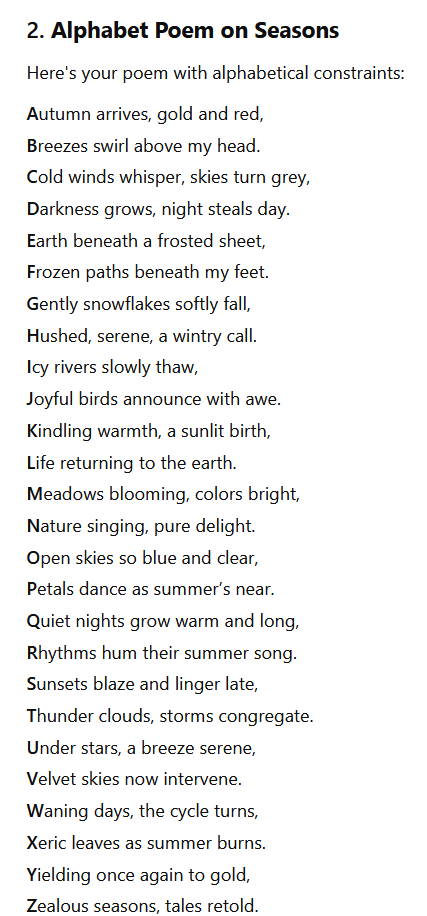

Poetry

This one was meant to check creativity, with some limitations to season it with logic. I requested the fashions to: “Write a brief poem in regards to the altering seasons, however every line should begin with the subsequent letter of the alphabet, starting with ‘A’.”

This sort of immediate forces a mannequin to strike a stability between construction and creativeness. You want creativity to explain the seasons, and self-discipline to observe the alphabetical format. Whereas all of them adopted the format, o3 stood out for being the one one that did not rhyme.

The entire different managed to stay to the transient, with higher or lesser creative skill, and all had a mixture of couplets and four-line rhymes. They have been all a bit of bland, good for a greeting card maybe, however hardly Dickinson. Nonetheless, the GPT-4.5 poem was charming and value sharing as I did above.

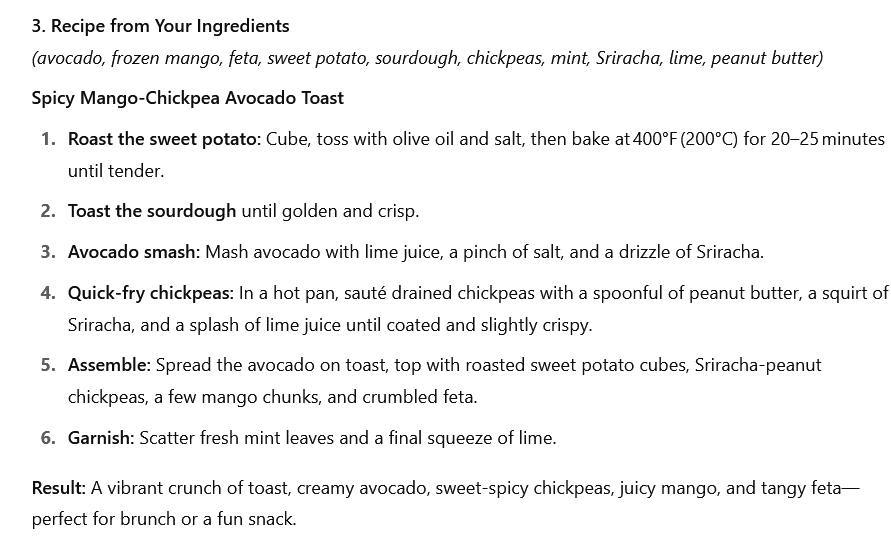

What can I cook dinner?

For this check, I gathered a bunch of random components and took an image of them, then uploaded the picture, which included an avocado, frozen mango chunks, feta, a candy potato, sourdough bread, chickpeas, mint, Sriracha, lime, and peanut butter.

Why that blend? For no motive apart from to see what would occur after I advised the AI fashions: “Here is an image of the components I’ve. What can I cook dinner with them?”

o3 was very sensible with a suggestion of “Spicy Candy‑Potato & Chickpea Toasts with Avocado‑Mango Smash and Peanut‑Sriracha Drizzle.” It broke down the totally different parts right into a desk with the components and recipe for every, and even a bullet level checklist of the explanation why it might style good.

The o4-mini recipe, which you’ll see above, for “Spicy Mango‑Chickpea Avocado Toast,” was easy with the directions and a pleasant description of the “consequence” of the recipe. GPT-4o had the same concept with “Candy and Spicy Avocado-Chickpea Toast,” however, surprisingly for the in any other case conversational mannequin, it was a really transient information, even shorter than the o4-mini.

Maybe not surprisingly, GPT-4.5 got here out with a full menu of dishes, together with “Avocado & Chickpea Toast with Mango Salsa,” “Candy Potato & Tofu Buddha Bowl,” “Spicy Mango-Peanut Tofu Wrap,” “Thai-Fashion Candy Potato & Chickpea Soup,: and “Refreshing Mango-Mint Sorbet.”

Additional, every had an outline and dialogue of the style and magnificence. I am genuinely wanting to make the sorbet. Because it’s only a mix of frozen mango cubes with contemporary mint, a squeeze of lime, and a spoonful of peanut butter to make it creamy, you then freeze and serve it with mint leaves and lime zest.

Rain Translate

The final check was all about nuance. I requested the AI fashions to: “Translate the phrase ‘It is raining cats and canines’ into Japanese, guaranteeing the which means is preserved culturally.”

Literal translations of idioms not often work. What I used to be in search of was an understanding of not simply the phrases, however the context. This was principally a reminder of how far even the baseline ChatGPT fashions have come. All of them got here again with variations on the identical reply: that there is not a precise translation, however the closest is to say it is raining like somebody has overturned a bucket.

GPT-4.5 did give me the literal translation, whereas additionally explaining why it would not make sense in Japanese to say it. Personally, I simply loved the acute emoji utilization of GPT-4o, which felt, for some motive, that it needed to translate the phrase into these little photos too.

Mannequin mania

I’ll say that not one of the fashions carried out poorly. Every positively had their very own quirks and emphasised various things. o3 is probably the most analytical and exact, o4-mini had the identical method however was a bit of quicker. GPT-4.5 positively took pains to imitate human responses probably the most, and GPT-4o simply loves emojis.

On the extra excessive ranges of testing or advanced prompts, I am certain every mannequin stands out as very totally different from the others. However, for primary, non-business or software program code-centric prompts, you’ll be able to’t actually go mistaken with any of them. If I am within the kitchen, although, I could defer to GPT-4.5, no less than if the sorbet seems pretty much as good because it guarantees.

You may additionally like

Source link