Ahead-looking: Nvidia CEO Jensen Huang unveiled a strong lineup of AI-accelerating GPUs on the firm’s 2025 GPU Know-how Convention, together with the Blackwell Extremely B300, Vera Rubin, and Rubin Extremely. These GPUs are designed to boost AI efficiency, notably in inference and coaching duties.

The Blackwell Extremely B300, set for launch within the second half of 2025, will increase reminiscence capability from 192GB to 288GB of HBM3e and gives a 50% enhance in dense FP4 tensor compute in comparison with the Blackwell GB200.

These enhancements assist bigger AI fashions and enhance inference efficiency for frameworks equivalent to DeepSeek R1. In a full NVL72 rack configuration, the Blackwell Extremely will ship 1.1 exaflops of dense FP4 inference compute, marking a big leap over the present Blackwell B200 setup.

The Blackwell Extremely B300 is not only a standalone GPU. Alongside the core B300 unit, Nvidia is introducing new B300 NVL16 server rack options, the GB300 DGX Station, and the GB300 NV72L full rack system.

Combining eight NV72L racks varieties the whole Blackwell Extremely DGX SuperPOD (pictured above), that includes 288 Grace CPUs, 576 Blackwell Extremely GPUs, 300TB of HBM3e reminiscence, and a formidable 11.5 ExaFLOPS of FP4 compute energy. These programs may be interconnected to create large-scale supercomputers, which Nvidia is asking “AI factories.”

Initially teased at Computex 2024, next-gen Vera Rubin GPUs are anticipated to launch within the second half of 2026, delivering substantial efficiency enhancements, notably in AI coaching and inference.

Vera Rubin options tens of terabytes of reminiscence and is paired with a customized Nvidia-designed CPU, Vera, which incorporates 88 customized Arm cores with 176 threads.

The GPU integrates two chips on a single die, attaining 50 petaflops of FP4 inference efficiency per chip. In a full NVL144 rack setup, Vera Rubin can ship 3.6 exaflops of FP4 inference compute.

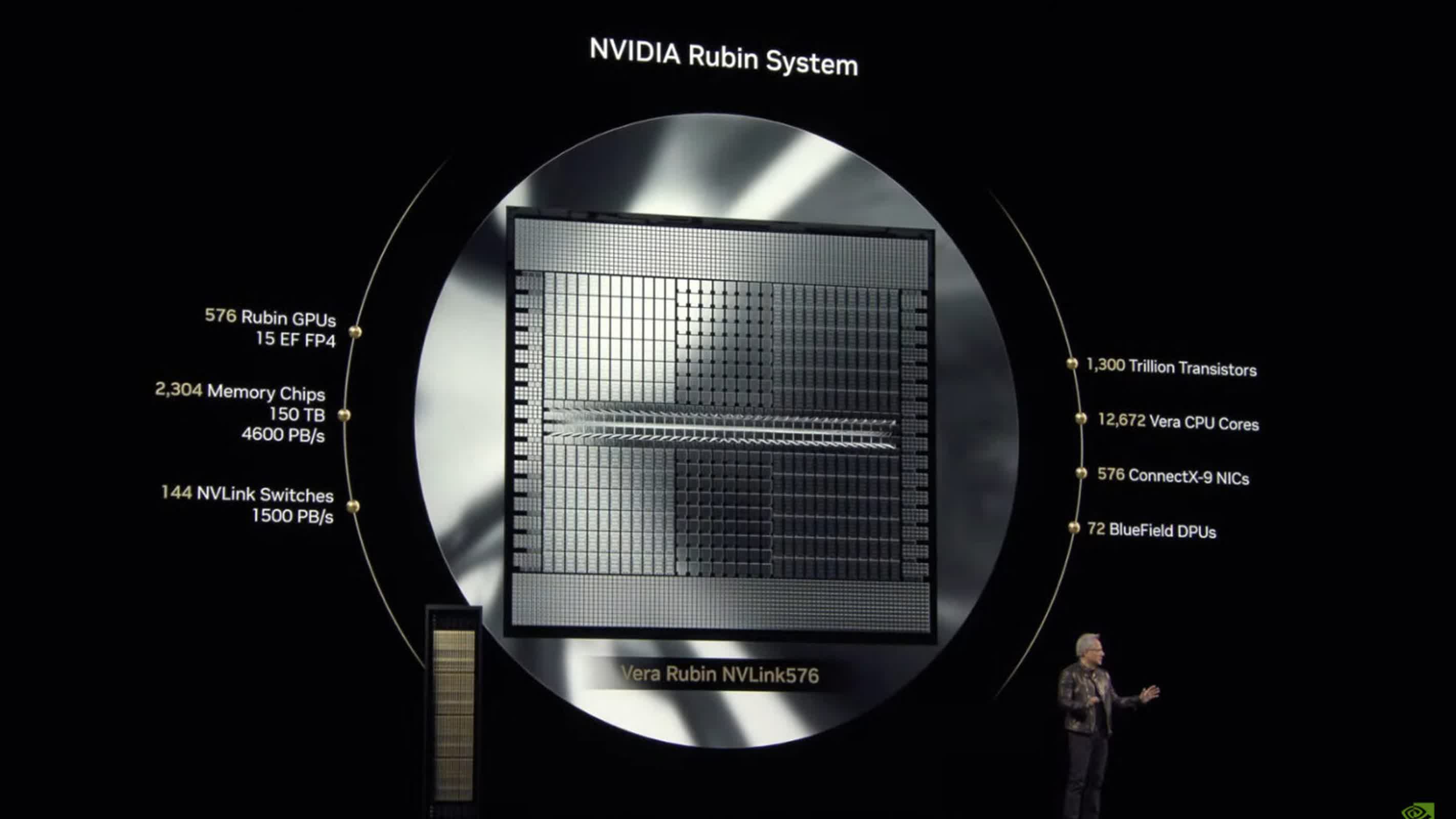

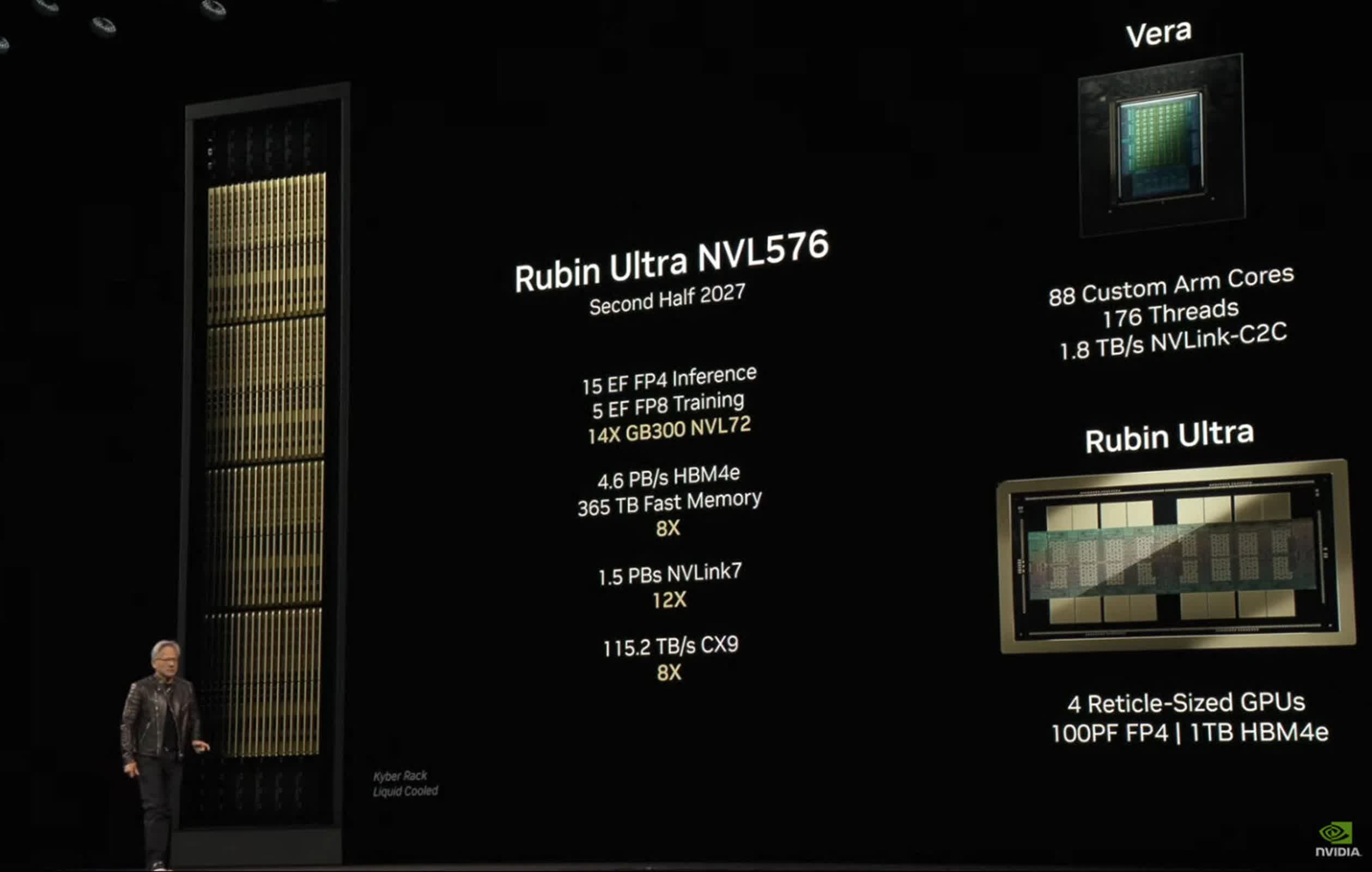

Constructing on Vera Rubin’s structure, Rubin Extremely is slated for launch within the second half of 2027. It should make the most of the NVL576 rack configuration, with every GPU that includes 4 reticle-sized dies, delivering 100 petaflops of FP4 precision per chip.

Rubin Extremely guarantees 15 exaflops of FP4 inference compute and 5 exaflops of FP8 coaching efficiency, considerably surpassing Vera Rubin’s capabilities. Every Rubin Extremely GPU will embody 1TB of HBM4e reminiscence, contributing to 365TB of quick reminiscence throughout your entire rack.

Nvidia additionally launched a next-generation GPU structure known as “Feynman,” anticipated to debut in 2028 alongside the Vera CPU. Whereas particulars stay scarce, Feynman is anticipated to additional advance Nvidia’s AI computing capabilities.

Throughout his keynote, Huang outlined Nvidia’s formidable imaginative and prescient for AI, describing information facilities as “AI factories” that produce tokens processed by AI fashions. He additionally highlighted the potential for “bodily AI” to energy humanoid robots, leveraging Nvidia’s software program platforms to coach AI fashions in digital environments for real-world purposes.

Nvidia’s roadmap is completely happy to place these GPUs as pivotal in the way forward for computing, emphasizing the necessity for elevated computational energy to maintain tempo with AI developments. This technique comes as Nvidia goals to reassure traders following latest market fluctuations, constructing on the success of its Blackwell chips.

Source link