Dealing with stiff competitors from its long-time rival AMD and the ever-present specter of customized Arm silicon within the cloud, Intel on Monday emitted one other wave of Xeon 6 processors.

Nevertheless, these new P-core Xeons aren’t attempting to match AMD on core rely or uncooked computational grunt this time. We acquired those parts, which had been primarily geared toward HPC and AI-centric workloads, final fall.

As a substitute, its 6700P, 6500P, and 6300P-series Granite Rapids Xeons launching right this moment are aimed squarely on the workhorses of the datacenter – assume virtualization containers, storage arrays, and, in fact, huge multi-socket database servers.

Having efficiently restored core rely parity with its x86 rival, Intel is leaning closely on what stays of its status amongst prospects, its complement of compute accelerators, and extra aggressive pricing in a bid to stem its share losses within the datacenter.

Scaling Xeon 6 down

Peeling again the built-in warmth spreader, we discover a now-familiar assortment of silicon. With Xeon 6, Intel has totally embraced heterogeneous chiplet structure splitting of I/O performance from compute and reminiscence.

On the high of the stack are the 6900P-series elements we checked out final fall, which function a trio of compute and reminiscence dies, constructed on the Intel 3 course of node, flanked by a pair of Intel 7-based I/O dies.

Whereas all of Intel’s Xeon 6 elements largely share the identical I/O die, Intel’s new 6700P and 6500P Xeons function completely different compute tiles starting from a pair of extreme-core-count dies on the 86-core half or a single high-core-count or low-core-count die for the 48-core and 16-core chips.

Right here we see Intel has totally embraced the heterogeneous chiplet structure pioneered by long-time rival AMD – click on to enlarge

This offers Intel some flexibility by way of balancing core rely and clock speeds for particular workloads, and, we think about, helps fairly a bit with yields. Nevertheless, as a result of the reminiscence controller is a part of the compute die quite than built-in into the I/O die, this implies they’re restricted to eight reminiscence channels versus the 12 on its flagship elements this era.

Intel’s newest chips make up for this considerably by together with help for 2 DIMMs per channel configurations – one thing notably absent on its earlier Granite Rapids elements. In single-socket configurations, the chips additionally help as much as 136 lanes of PCIe 5.0 connectivity versus 88 on its multi-socket optimized processors.

Much less silicon signifies that this spherical of Xeon 6 processors run a good bit cooler and pull much less wattage than the five hundred W elements we checked out final yr coming in between 150 and 350 W relying largely on core rely. This implies now you can have as much as 22 extra cores in the identical energy footprint because the final era.

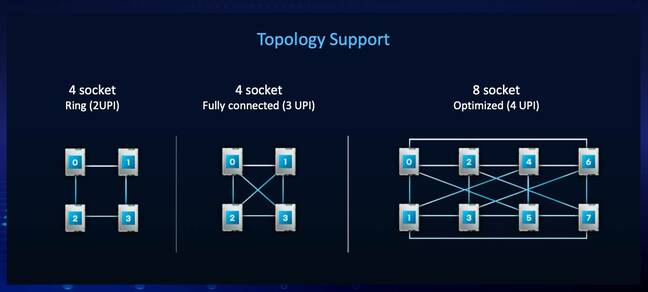

Intel stays dedicated to multi-socket programs

As of 2025, Intel stays the one provider of x86 equipment for giant multi-socket programs, generally employed in giant, mission-critical database environments. For reference, AMD’s Epyc processors have solely ever been supplied in single or twin socket configurations

For these operating these varieties of enormous, memory-hungry workloads, like SAP HANA, the discharge of Intel’s 6700P collection elements represents a significant efficiency uplift over its getting older Sapphire Rapids Xeons.

Yep, Intel continues to be the one x86 vendor providing configurations that scale past two sockets – click on to enlarge

Nevertheless, with Compute Specific Hyperlink (CXL) reminiscence growth units eliminating the necessity for added CPU sockets to realize the multi-terabyte reminiscence capacities demanded by these workloads, the query turns into whether or not these huge multi-socket configurations are even vital.

One in every of CXL’s key options is the power so as to add extra reminiscence capability to suitable programs through growth modules that slot into a normal PCIe interface. The additional reminiscence, which, by the best way, would not need to be DDR5, then seems as one other NUMA node.

Regardless of these developments, Intel Senior Fellow Ronak Singhal would not see demand for 4 and eight-socket programs going away any time quickly. “Whenever you’re wanting on the reminiscence growth, there’s a specific amount you are able to do with CXL, however for the individuals which might be going to four-socket and eight-socket, and even past, they wish to get these further cores as nicely,” he mentioned.

Intel leans arduous on its accelerator investments

In comparison with final gen, Intel says its 6700P-series chips will ship wherever from 14 to 54 p.c greater efficiency in comparison with its top-specced fifth-gen Xeons.

Nevertheless, towards its competitors, it is clear that Intel is leaning fairly closely on the built-in accelerator engines baked into its chips to distinguish and provides it a leg up.

Whereas the rise of generative AI has shifted the definition of accelerators considerably to imply GPUs or different devoted AI accelerators, Intel has been constructing customized accelerators for issues like cryptography, safety, storage, analytics, networking, and, sure, AI into its chips for years now.

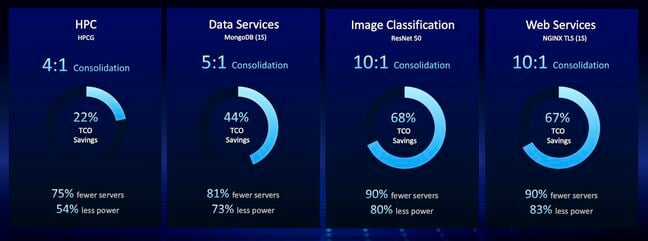

Leaning on its onboard accelerators, Intel says a single Xeon 6 server has the potential to switch as much as ten second-gen Xeon containers – click on to enlarge

Together with elevated core counts and directions per clock this era, Intel says its cryptographic engines and superior matrix extensions (AMX) now imply a single Xeon 6 server can take the place of as much as ten Cascade Lake programs, at the very least for workloads like picture classification and Nginx net serving with TLS.

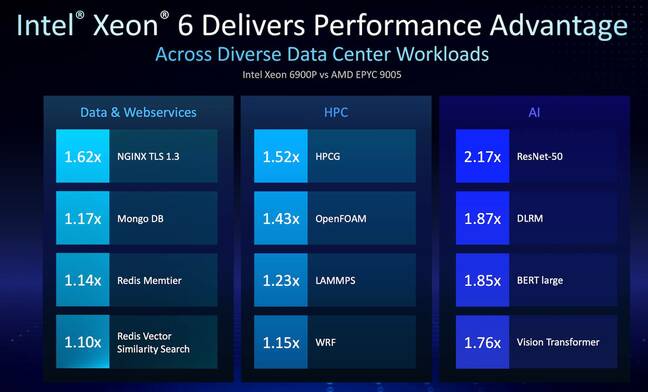

In comparison with AMD’s newest and biggest fifth-gen Epycs, Intel is claiming a efficiency benefit of 62 p.c for Nginx TLS, 17 p.c for MongoDB, 52 p.c in HPCG, 43 p.c in OpenFOAM, and a couple of.17x in ResNet-50.

This is how Intel says its newest Xeons stack as much as Epyc. Clearly take these with a grain of salt – click on to enlarge

As common, we suggest taking these efficiency claims with a grain of salt, particularly as a result of they’re for Intel’s flagship 6900P processors and deal with workloads that profit straight from both its built-in accelerator engines, or early help for pace MRDIMM reminiscence tech.

With that mentioned, in case your workloads can reap the benefits of these accelerator engines, it could be value taking a more in-depth have a look at Intel’s Xeon 6 lineup. Talking of which, here is a full rundown of Intel’s 6700P and 6500P collection silicon launching right this moment.

Intel Xeon 6 6700P/6500P Efficiency SKUs

| 86 | 2 GHz | 3.2 GHz | 3.8 GHz | 336 MB | 350 W | 2 | 8 | 6,400/8,000 MT/s | 4 | 88 | $10,400 |

| 64 | 2.4 GHz | 3.6 GHz | 3.9 GHz | 336 MB | 350 W | 2 | 8 | 6,400/8,000 MT/s | 4 | 88 | $9,595 |

| 48 | 2.7 GHz | 3.8 GHz | 3.9 GHz | 288 MB | 350 W | 2 | 8 | 6,400/8,000 MT/s | 4 | 88 | $6,497 |

| 32 | 3.1 GHz | 4.1 GHz | 4.3 GHz | 336 MB | 300 W | 2 | 8 | 6,400/8,000 MT/s | 4 | 88 | $5,250 |

| 32 | 2.9 GHz | 4 GHz | 4 GHz | 192 MB | 330 W | 2 | 8 | 6,400/8,000 MT/s | 4 | 88 | $4,995 |

| 36 | 2 GHz | 3.4 GHz | 4.1 GHz | 144 MB | 205 W | 2 | 8 | 6,400/NA MT/s | 4 | 88 | $3,351 |

| 32 | 2.5 GHz | 3.6 GHz | 3.8 GHz | 144 MB | 250 W | 2 | 8 | 6,400/NA MT/s | 4 | 88 | $3,726 |

| 24 | 3 GHz | 4.2 GHz | 4.2 GHz | 144 MB | 255 W | 2 | 8 | 6,400/NA MT/s | 4 | 88 | $2,878 |

| 16 | 3.2 GHz | 4 GHz | 4.2 GHz | 72 MB | 190 W | 2 | 8 | 6,400/NA MT/s | 3 | 88 | $1,195 |

| 8 | 3.5 GHz | 4.3 GHz | 4.3 GHz | 48 MB | 150 W | 2 | 8 | 6,400/NA MT/s | 3 | 88 | $765 |

Intel Xeon 6 6700P/6500P Mainline SKUs

| 86 | 2 GHz | 3.2 GHz | 3.8 GHz | 336 MB | 350 W | 8 | 8 | 6,400 MT/s | 4 | 88 | $19,000 |

| 64 | 2.4 GHz | 3.6 GHz | 3.9 GHz | 336 MB | 330 W | 8 | 8 | 6,400 MT/s | 4 | 88 | $16,000 |

| 48 | 2.5 GHz | 3.8 GHz | 4.1 GHz | 192 MB | 300 W | 8 | 8 | 6,400 MT/s | 4 | 88 | $12,702 |

| 32 | 2.9 GHz | 4.1 GHz | 4.2 GHz | 144 MB | 270 W | 8 | 8 | 6,400 MT/s | 4 | 88 | $6,540 |

| 24 | 2.7 GHz | 3.9 GHz | 4.1 GHz | 144 MB | 210 W | 8 | 8 | 6,400 MT/s | 4 | 88 | $2,478 |

| 16 | 3.6 GHz | 4.2 GHz | 4.3 GHz | 72 MB | 210 W | 8 | 8 | 6,400 MT/s | 3 | 88 | $3,622 |

| 8 | 4 GHz | 4.3 GHz | 4.3 GHz | 48 MB | 165 W | 8 | 8 | 6,400 MT/s | 3 | 88 | $2,816 |

| 64 | 2.2 GHz | 3.4 GHz | 3.8 GHz | 288 MB | 330 W | 2 | 8 | 6,400 MT/s | 4 | 88 | $7,803 |

| 48 | 2.1 GHz | 3.3 GHz | 3.8 GHz | 288 MB | 270 W | 2 | 8 | 6,400 MT/s | 4 | 88 | $4,650 |

| 32 | 2.3 GHz | 3.7 GHz | 4.1 GHz | 144 MB | 225 W | 2 | 8 | 6,400 MT/s | 4 | 88 | $2,234 |

| 24 | 2.4 GHz | 3.4 GHz | 4 GHz | 144 MB | 210 W | 2 | 8 | 6,400 MT/s | 4 | 88 | $1,295 |

| 16 | 2.3 GHz | 3.8 GHz | 3.8 GHz | 72 MB | 150 W | 2 | 8 | 6,400 MT/s | 3 | 88 | $740 |

| 12 | 2.2 GHz | 3.9 GHz | 4.1 GHz | 48 MB | 150 W | 2 | 8 | 6,400 MT/s | 3 | 88 | $563 |

Intel Xeon 6 6700P/6500P Single-socket SKUs

| 80 | 2 GHz | 3.2 GHz | 3.8 GHz | 336 MB | 350 W | 1 | 8 | 6,400/8,000 MT/s | 0 | 136 | $8,960 |

| 64 | 2.5 GHz | 3.6 GHz | 3.9 GHz | 336 MB | 350 W | 1 | 8 | 6,400/8,000 MT/s | 0 | 136 | $6,570 |

| 48 | 2.5 GHz | 3.7 GHz | 3.8 GHz | 288 MB | 300 W | 1 | 8 | 6,400/NA MT/s | 0 | 136 | $4,421 |

| 32 | 2.5 GHz | 3.9 GHz | 4.1 GHz | 144 MB | 245 W | 1 | 8 | 6,400 / NA MT/s | 0 | 136 | $2,700 |

| 24 | 2.6 GHz | 4.1 GHz | 4.1 GHz | 144 MB | 225 W | 1 | 8 | 6,400/NA MT/s | 0 | 136 | $1,250 |

| 16 | 3.2 GHz | 4.1 GHz | 4.2 GHz | 72 MB | 150 W | 1 | 8 | 6,400/NA MT/s | 0 | 136 | $815 |

Intel will get aggressive on pricing

Intel’s Xeon 6 6700P and 6500P-series launch sees the x86 big get significantly extra aggressive with regard to pricing.

Core-for-core, Intel has historically charged a premium for its chips in comparison with AMD. Evaluating Intel’s fourth-gen Xeon launch – that is Sapphire Rapids in case you’d forgotten – to AMD’s fourth-gen Epyc processors, we noticed launch worth variations starting from just a few hundred {dollars} to a number of thousand relying on the phase.

With Intel’s newest Xeon launch we do not see the identical pricing dynamics at play. launch costs for AMD’s fifth-gen Epycs from final fall, it is clear Intel has tried to match, if not undercut, its smaller competitor on pricing at any given core rely or goal market.

The one exception to that is Intel’s 4 and eight-socket suitable chips, which don’t have any competitors within the x86 area and so we nonetheless see Intel charging a premium right here. Want a maxed-out, eight-socket database server? You’ll be able to anticipate to pay greater than $150,000 ($19,000 a chunk) in CPUs alone.

After all, these are tray costs we’re speaking about, and they also do not consider quantity reductions supplied to prospects by both chipmaker. Costs aren’t mounted and it is commonplace for them to be revised to account for aggressive strain or market situations.

We have already seen Intel slash costs for its flagship 6900P-series Xeons by a median of $4,181 since they had been launched in September. And it’s simple to see why. AMD was charging practically $5,000 much less for a similar variety of cores with its fifth-gen Epyc Turin elements. Intel’s extra aggressive pricing makes its chip greater than $500 cheaper.

And that is not the tip of the story. In need of steep worth cuts to the Epyc lineup, Intel’s Xeon 6 processors may find yourself being considerably cheaper than AMD’s if a 25-plus-percent tariff on semiconductor imports finally ends up being carried out by the Trump administration.

Intel’s Xeon 6 processors are among the many few current-generation merchandise the corporate continues to be manufacturing in-house. Assuming it will possibly sustain with US demand at its home fabs, Intel Xeons are positioned to sidestep these tariffs. AMD, in the meantime, is reliant on TSMC for manufacturing.

New entry-level Xeons and SoCs for the sting

Alongside its mainstream 6700P and 6500P processor households, Intel can be rolling out new embedded and entry-level Xeon processors.

On the backside of the stack is Intel’s 6300-series Xeons, which might be had in 4, six, and eight-core flavors with clock speeds as much as 5.7 GHz. Nevertheless, with solely two DDR5 reminiscence channels, which max out at 128 GB of capability and speeds of 4,800 MT/s, these chips are extra carefully aligned with AMD’s child Epycs we checked out final yr than your typical datacenter CPU.

For embedded, edge, and networking environments, Intel can be rolling out a brand new Xeon 6 SoC variant, which it is positioning as a successor to its fourth-gen Xeon with vRAN increase.

Alongside its datacenter-focused elements, Intel is rolling out a brand new edge-optimized SKU with an I/O die tuned for virtualized RAN networks – click on to enlarge

The chip, which is designed to be built-in straight into edge compute, networking, or safety home equipment, might be had with as much as 42 cores and incorporates a distinctive I/O die with 200 Gbps of mixture Ethernet bandwidth, presumably damaged out throughout eight 25GbE hyperlinks.

Together with powering issues like virtualized radio entry community (vRAN) programs, the chips can be outfitted with accelerators for cryptographic acceleration, AI, or media transcoding. The concept being that these chips may be deployed in safety home equipment or to preprocess information on the edge.

You are in all probability not cool sufficient for Intel’s 288-core monster

When you’re questioning what ever occurred to that 288-core E-core Xeon former CEO Pat Gelsinger teased again at Intel Innovation in 2023, it is nonetheless lurking within the shadows, Singhal instructed press forward of the launch.

However not like Intel’s earlier Sierra Forest E-core Xeons launched final yr, it appears Intel is holding its highest core-count elements in reserve for cloud service suppliers.

“The 288-core is now in manufacturing. We even have this deployed now with a big cloud buyer,” Singhal mentioned. “We’re actually engaged on that 288-core processor carefully with every of our prospects to customise what we’re constructing there for his or her wants.”

This is not shocking because the half was at all times designed to serve the cloud and managed service supplier market, offering a great deal of energy environment friendly if not essentially probably the most feature-packed or performant cores for serving up microservices, web-scale apps, and different throughput-oriented applications.

We additionally know that demand for its E-core elements hasn’t lived as much as expectations.

“What we have seen is that is extra of a distinct segment market, and we have not seen quantity materialize there as quick as we anticipated,” Intel co-CEO Michelle Johnston Holthaus said in the course of the firm’s This fall earnings name.

This finally resulted within the delay of Intel’s 18A-based Clearwater Forest elements from 2025 to 2026. However as we famous, the timing of that launch was at all times awkwardly near Sierra Forest and, in our opinion, left potential prospects within the troublesome place of both being an early adopter or ready just a little longer for what’s prone to be a much more refined and performant half.

Based on Singhal, the half is already operating within the lab and an Intel buyer has powered on its first programs utilizing it. ®

Source link