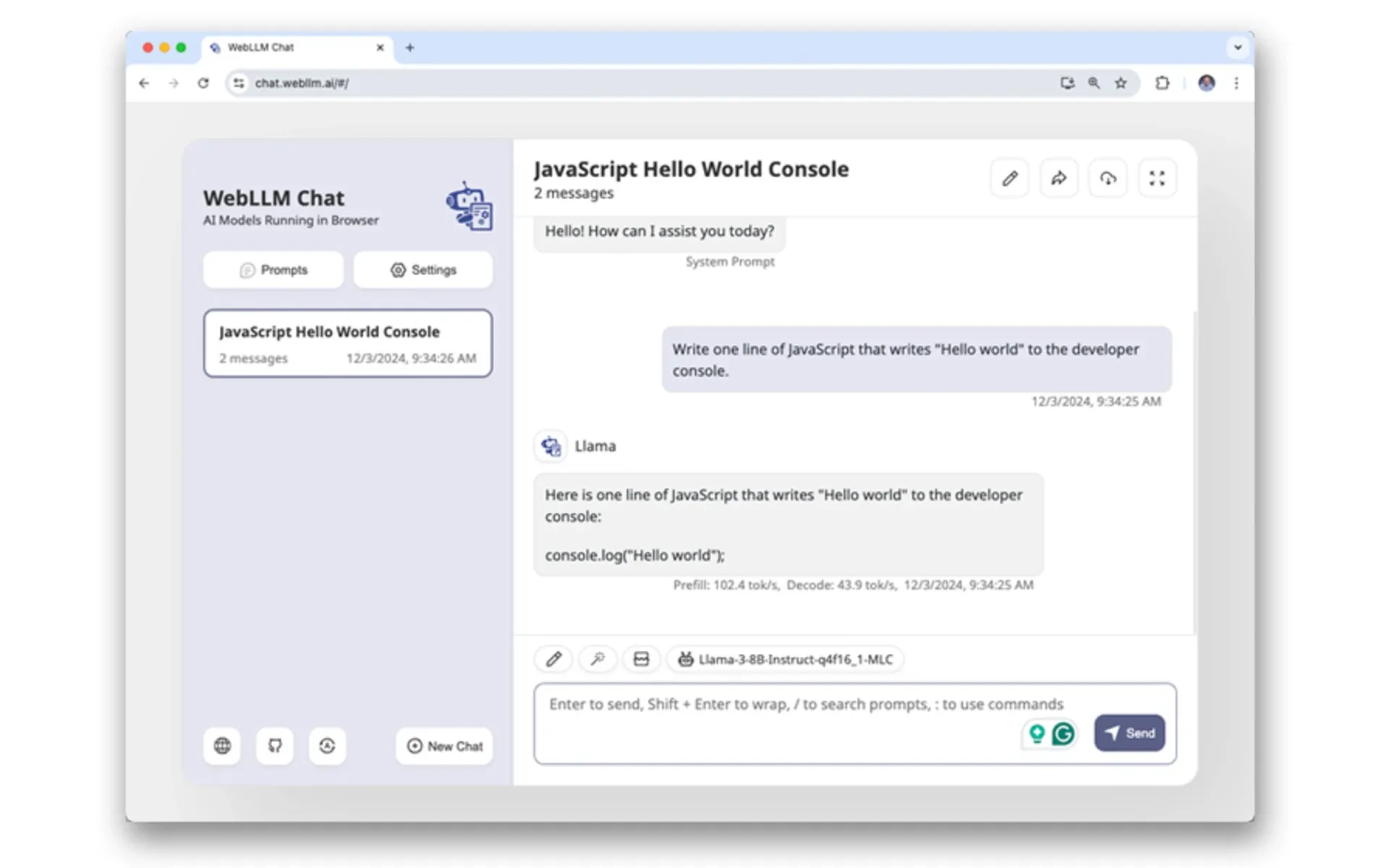

WebLLM and new browser APIs allow offline-capable chatbots that preserve delicate knowledge on customers’ units whereas sustaining performance.

In a big improvement for net software capabilities introduced on January 13, 2024, builders obtained new instruments to construct native chatbots that function completely on customers’ units, eliminating the necessity to transmit delicate knowledge to exterior servers.

In accordance with Christian Liebel, a Google Developer Knowledgeable who authored documentation in regards to the new capabilities, these advances focus on WebLLM, a web-based runtime for Massive Language Fashions (LLMs) offered by Machine Studying Compilation. The know-how combines WebAssembly and WebGPU to allow near-native efficiency for AI mannequin inference straight in net browsers.

Alexandra Klepper, who printed the initial announcement, famous that the implementation permits computer systems to “generate new content material, write summaries, analyze textual content for sentiment, and extra” with out requiring cloud connectivity. This marks a departure from present cloud-based options that usually course of knowledge on distant servers.

The technical specs reveal important necessities for implementation. Fashions with 3 billion parameters compressed to 4 bits per parameter lead to roughly 1.4 GB file sizes that have to be downloaded earlier than first use. Bigger 7 billion parameter fashions, which offer enhanced translation and data capabilities, require downloads exceeding 3.3 GB.

A key innovation within the implementation includes the Cache API, launched alongside Service Employees. This programmable cache stays underneath developer management, enabling totally offline operation as soon as fashions are downloaded. The cache isolation happens per origin, that means separate web sites can not share cached fashions.

The system helps three distinct message roles: system prompts that outline conduct and position, consumer prompts for enter, and assistant prompts for responses. This construction permits N-shot prompting, permitting pure language examples to information mannequin conduct.

Safety concerns characteristic prominently within the implementation. In accordance with the technical documentation, builders should deal with LLM responses like consumer enter, accounting for potential malformed or malicious values ensuing from hallucination or immediate injection assaults. The documentation explicitly warns towards straight including generated HTML to paperwork or mechanically executing returned JavaScript code.

The know-how faces sure limitations. Non-deterministic conduct means fashions can produce various or contradictory responses to an identical prompts. The documentation acknowledges that hallucinations could happen, with fashions producing incorrect info based mostly on realized patterns fairly than factual accuracy.

Native deployment offers particular benefits for consumer privateness and operational reliability. By processing all knowledge on-device, the system eliminates transmission of personally identifiable info to exterior suppliers or areas. This method additionally permits constant response instances and maintains performance throughout community outages.

The implementation consists of integration with Chrome’s experimental Immediate API, which permits a number of purposes to make the most of a centrally downloaded mannequin, addressing effectivity considerations about duplicate downloads throughout completely different net purposes.

This improvement arrives as a part of broader efforts to boost net software capabilities whereas sustaining consumer privateness. The documentation emphasizes that builders should confirm LLM-generated outcomes earlier than taking consequential actions, reflecting ongoing consideration to reliability and security concerns in AI implementations.

For net builders all for implementation, full documentation and supply code examples can be found by means of the net.dev platform, with separate guides masking WebLLM integration and Immediate API utilization.

Source link