Ahead-looking: A brand new report has revealed the large quantity of Nvidia GPUs utilized by Microsoft and the improvements it took in arranging them to assist OpenAI prepare ChatGPT. The information comes as Microsoft proclaims a major improve to its AI supercomputer to additional its homegrown generative AI initiative.

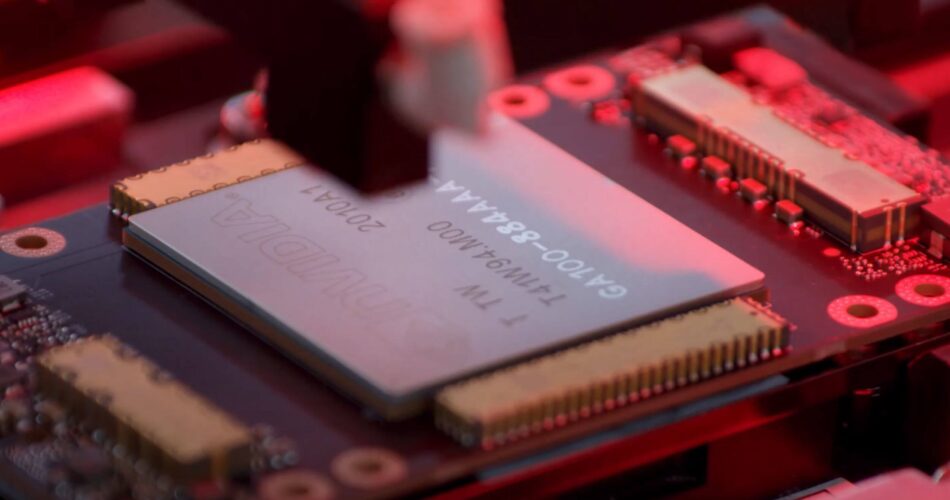

In line with Bloomberg, OpenAI trained ChatGPT on a supercomputer Microsoft constructed from tens of hundreds of Nvidia A100 GPUs. Microsoft introduced a brand new array utilizing Nvidia’s newer H100 GPUs this week.

The problem going through the businesses began in 2019 after Microsoft invested $1 billion into OpenAI whereas agreeing to construct an AI supercomputer for the startup. Nevertheless, Microsoft did not have the {hardware} in-house for what OpenAI wanted.

After buying Nvidia’s chips, Microsoft needed to rethink the way it organized such a large variety of GPUs to stop overheating and energy outages. The corporate will not say exactly how a lot the endeavor price, however government vice chairman Scott Guthrie put the quantity above a number of hundred million {dollars}.

Additionally learn: Has Nvidia won the AI training market?

Concurrently working all of the A100s pressured Redmond to think about the way it positioned them and their energy provides. It additionally needed to develop new software program to extend effectivity, make sure the networking gear may stand up to huge quantities of knowledge, design new cable trays that it may manufacture independently, and use a number of cooling strategies. Relying on the altering local weather, the cooling strategies included evaporation, swamp coolers, and out of doors air.

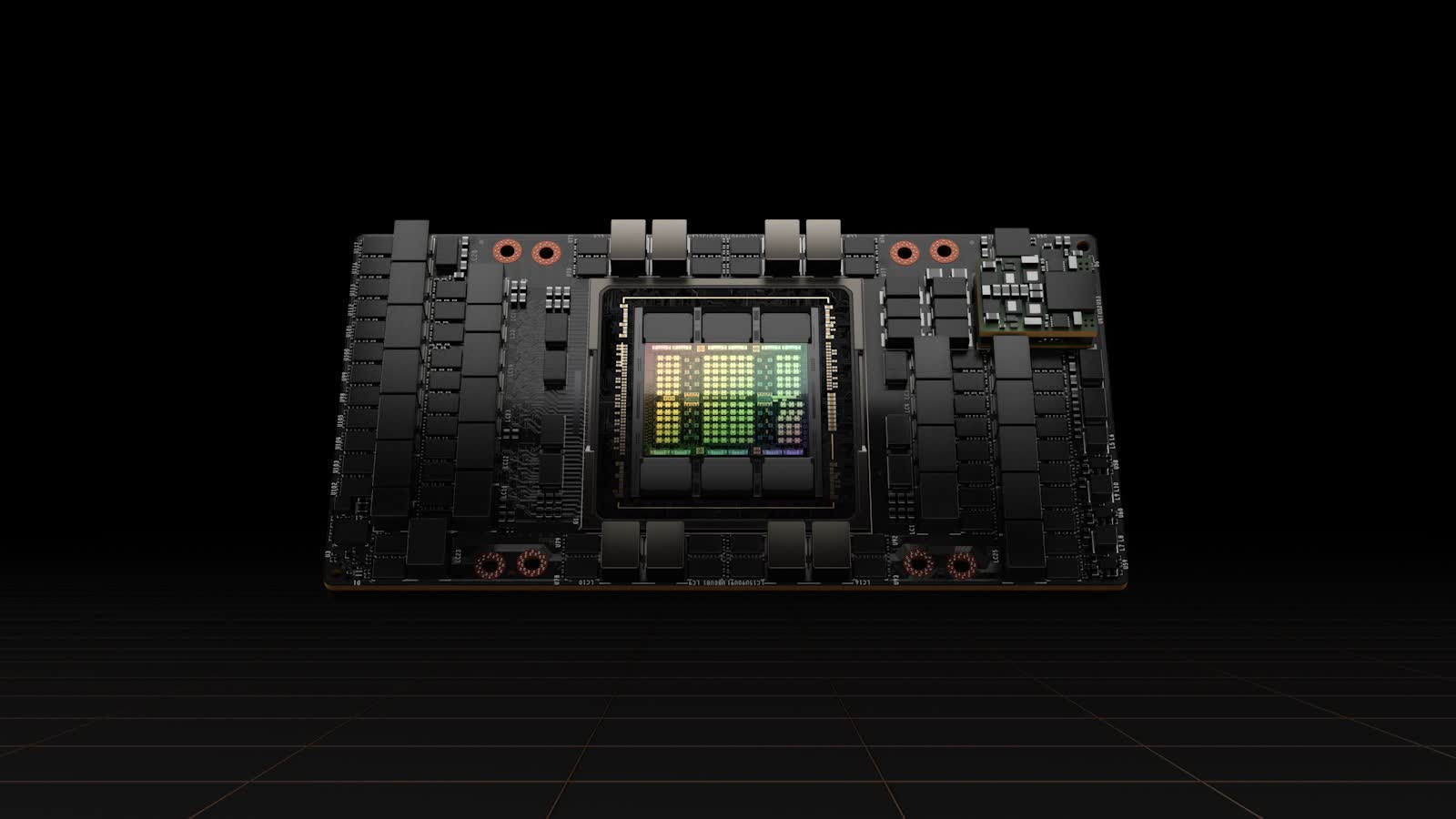

For the reason that preliminary success of ChatGPT, Microsoft and a few of its rivals have began work on parallel AI fashions for search engines like google and yahoo and different purposes. To hurry up its generative AI, the corporate has launched the ND H100 v5 VM, a digital machine that may use between eight and hundreds of Nvidia H100 GPUs.

The H100s join by means of NVSwitch and NVLink 4.0 with 3.6TB/s of bisectional bandwidth between every of the 8 native GPUs inside every digital machine. Every GPU boasts 400 Gb/s of bandwidth by means of Nvidia Quantum-2 CX7 InfiniBand and 64GB/s PCIe5 connections. Every digital machine manages 3.2Tb/s by means of a non-blocking fat-tree community. Microsoft’s new system additionally options 4th-generation Intel Xeon processors and 16-channel 4800 MHz DDR5 RAM.

Microsoft plans to make use of the ND H100 v5 VM for its new AI-powered Bing search engine, Edge internet browser, and Microsoft Dynamics 365. The digital machine is now accessible for preview and can come customary with the Azure portfolio. Potential customers can request access.

Source link