Function Generative AI poses attention-grabbing challenges for educational publishers tackling fraud in science papers because the know-how exhibits the potential to idiot human peer evaluation.

Describe a picture for DALL-E, Secure Diffusion, and Midjourney, they usually’ll generate one in seconds. These text-to-image methods have quickly improved over the previous few years and what initially started as a analysis prototype, producing benign and splendidly weird illustrations of child daikon radishes strolling canines in 2021, has since morphed into industrial software program, constructed by billion-dollar corporations, able to producing more and more practical photographs.

These AI fashions can produce lifelike footage of human faces, objects, and scenes, and it is trying like a matter of time earlier than they get good at creating convincing scientific photographs and knowledge too. Textual content-to-image fashions at the moment are extensively accessible, fairly low cost to make use of, they usually may assist dodgy scientists forge outcomes and publish sham analysis extra simply.

Picture manipulation is already a high concern for educational publishers because it’s essentially the most common form of scientific misconduct of late. Authors can use all types of tips, resembling flipping, rotating, or cropping elements of the identical picture to pretend knowledge. Editors are fooled into believing all the outcomes being offered are actual and can publish their work.

Many publishers at the moment are turning to AI software program in an try to detect indicators of picture duplication through the evaluation course of. Most often, photographs have been mistakenly duplicated by scientists who’ve muddled up their knowledge, however typically it is used for blatant fraud.

However simply as publishers start to get a grip on picture duplication, one other menace is rising. Some researchers could also be tempted to make use of generative AI fashions to create pretend knowledge. In actual fact, there’s proof to recommend that sham scientists are doing this already.

AI-made photographs noticed in papers?

In 2019, DARPA launched its Semantic Forensics (SemaFor) program, funding researchers creating forensic instruments able to detecting AI-made media, to fight disinformation.

A spokesperson for Uncle Sam’s protection analysis company confirmed it has noticed pretend medical photographs printed in actual science papers that seem like generated utilizing AI. Earlier than text-to-image fashions, generative adversarial networks had been fashionable. DARPA realized these fashions, finest recognized for his or her capacity to create deepfakes, may additionally forge photographs of medical scans, cells, or different sorts of imagery typically present in biomedical research.

“The menace panorama is transferring fairly quickly,” William Corvey, SemaFor’s program supervisor, informed The Register. “The know-how is changing into ubiquitous for benign functions.” Corvey mentioned the company has had some success creating software program able to detecting GAN-made photographs, and the instruments are nonetheless below improvement.

The menace panorama is transferring fairly quickly

“We have now outcomes that recommend you possibly can detect ‘siblings or distant cousins’ of the generative mechanism you have discovered to detect beforehand, no matter the content material of the generated photographs. SemaFor analytics have a look at quite a lot of attributions and particulars related to manipulated media, the whole lot from metadata, statistical anomalies, to extra visible representations,” he mentioned.

Some picture analysts scrutinizing knowledge in scientific papers have additionally come throughout what appear to be GAN-generated photographs. A GAN being a generative adversarial community, a kind of machine-learning system that may generate writing, music, footage, and extra.

For example, Jennifer Byrne, a professor of molecular oncology on the College of Sydney, and Jana Christopher, a picture integrity analyst for journal writer EMBO Press, got here throughout an odd set of photographs that appeared in 17 bio-chemistry-related research.

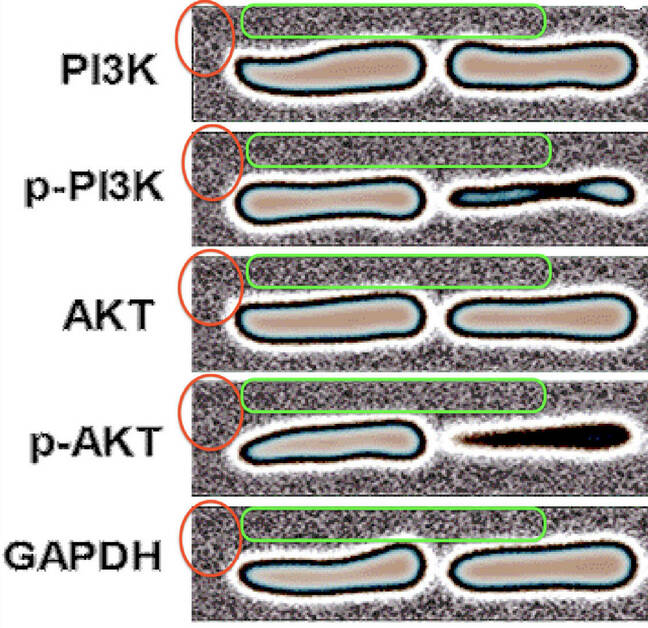

The images depicted a collection of bands generally generally known as western blots, which point out the presence of particular proteins in a pattern, that each one curiously appeared to have the identical background. That is not alleged to occur.

Examples of repeating backgrounds in western blot photographs, highlighted by the crimson and inexperienced outlines … Supply: Byrne, Christopher 2020

In 2020, Byrne and Christopher got here to the conclusion that the suspicious-looking photographs had been most likely produced as a part of a paper mill operation: an effort to mass produce papers on bio-chemical research utilizing faked knowledge, and get them peer reviewed and printed. Such a caper is perhaps pulled off to, for instance, profit lecturers who’re compensated primarily based on their accepted paper output, or to assist a division hit a quota of printed reviews.

“The blots within the instance proven in our paper are almost certainly computer-generated,” Christopher informed The Register.

I typically come throughout fake-looking photographs, predominantly western blots, however more and more additionally microscopy photographs

“Screening papers each pre- and post-publication, I typically come throughout fake-looking photographs, predominantly western blots, however more and more additionally microscopy photographs. I’m very conscious that many of those are almost certainly generated utilizing GANs.”

Elisabeth Bik, a contract picture sleuth, can typically inform when photographs have been manipulated, too. She pores over scientific paper manuscripts, trying to find duplicated photographs, and flags these points for journal editors to look at additional. However it’s tougher to fight pretend photographs once they have been comprehensively generated by an algorithm.

She identified that though the repeated background in photographs highlighted within the Byrne and Christopher’s research is a telltale signal of forgery, the precise western blots themselves are distinctive. The pc imaginative and prescient software program Bik makes use of to scan papers and spot picture fraud would discover it exhausting to flag these bands as a result of there are not any duplications of the particular blots.

“We’ll by no means discover an overlap. They’re all, I imagine, artificially made. How precisely, I am undecided,” she informed The Register.

It is simpler to generate pretend photographs with the newest generative AI fashions

GANs have largely been displaced by diffusion fashions. These methods generate distinctive footage and energy right this moment’s text-to-image software program together with DALL-E, Secure Diffusion, and Midjourney. They be taught to map the visible illustration of objects and ideas to pure language, and will considerably decrease the barrier for educational dishonest.

Scientists can simply describe what kind of false knowledge they need generated, and these instruments will do it for them. In the meanwhile, nonetheless, they can not fairly create realistic-looking scientific photographs but. Generally the instruments produce clusters of cells that look convincing at first look, however fail miserably relating to western blots.

That is the kind of factor these AI applications can generate:

Right here’s what @OpenAI’s DALL-E does with organic cell prompts

Particularly: “cells below a microscope” and “T-cells below a scanning electron microscope” pic.twitter.com/BgcZr3k5Q5

— Tara Basu Trivedi (@tbt94) August 23, 2022

William Gibson – a physician-scientist and medical oncology fellow, not the well-known creator – has additional examples here, together with how right this moment’s fashions wrestle with the idea of a western blot.

The know-how is just getting higher, nonetheless, as builders practice bigger fashions on extra knowledge.

David Bimler, one other skilled at recognizing picture manipulation in science papers, higher generally known as Smut Clyde, informed us: “Papermillers will illustrate their merchandise utilizing no matter technique is least expensive and quickest, counting on weaknesses within the peer-review course of.”

“They may merely copy [western blots] from older papers however even that includes work to go looking by previous papers. In the meanwhile, I believe, utilizing a GAN continues to be some effort. Although that can change,” he added.

DARPA is now seeking to broaden its SemaFor program to check text-to-image methods. “These sorts of fashions are pretty new and whereas in scope, should not a part of our present work on SemaFor,” Corvey mentioned.

“Nevertheless, SemaFor evaluators are seemingly to have a look at these fashions through the subsequent analysis section of this system starting Fall 2023.”

In the meantime, the standard of scientific analysis will erode if educational publishers cannot discover methods to detect pretend AI-generated photographs in papers. Within the best-case state of affairs, this type of educational fraud shall be restricted to only paper mill schemes that do not obtain a lot consideration anyway. Within the worst-case state of affairs, it’s going to influence even essentially the most respected journals and scientists with good intentions will waste money and time chasing false concepts they imagine to be true. ®