Researchers have developed a method geared toward defending artists from AI fashions replicating their kinds after having been skilled to generate pictures from their art work.

Industrial text-to-image instruments that robotically produce pictures given a textual content description like DALL-E, Secure Diffusion, or Midjourney have ignited a fierce copyright debate. Some artists have been dismayed to learn how shockingly simple it was for anybody to create new digital artworks mimicking their model.

Many have spent years perfecting their craft solely to see different folks generate pictures impressed by their work in seconds utilizing these instruments. Firms creating text-to-image fashions typically scrape knowledge used to coach these techniques on the web with out express permission.

Artists are presently embroiled in a proposed class-action lawsuit in opposition to AI startups Stability AI, Midjourney, and on-line artwork platform DeviantArt, claiming they infringed on copyright legal guidelines by unlawfully stealing and ripping off their work.

Artists may defend their mental property from picture era instruments sooner or later utilizing new software program developed by laptop science researchers on the College of Chicago. The programme, dubbed Glaze, prevents text-to-image fashions from studying and mimicking the art work kinds in pictures.

First, the software program inspects an image and figures out what visible particulars outline its qualities. Conventional oil work, for instance, will comprise effective brushstrokes, while cartoon drawings can have extra exaggerated shapes and color palettes. Subsequent, these options are altered by making use of an invisible “cloak” over the picture.

We needn’t change all the knowledge within the image to guard artists, we solely want to vary the model options,” Shawn Shan, a graduate pupil and co-author of the study, said in a press release. “So we needed to devise a means the place you mainly separate out the stylistic options from the picture from the article, and solely attempt to disrupt the model function utilizing the cloak.”

The cloak is, in actual fact, a method switch algorithm that applies one other picture’s likeness onto the options extracted from the programme. Glaze mainly remixes the unique look of a picture with one other model in order that an AI mannequin skilled on the picture fails to seize its essence successfully.

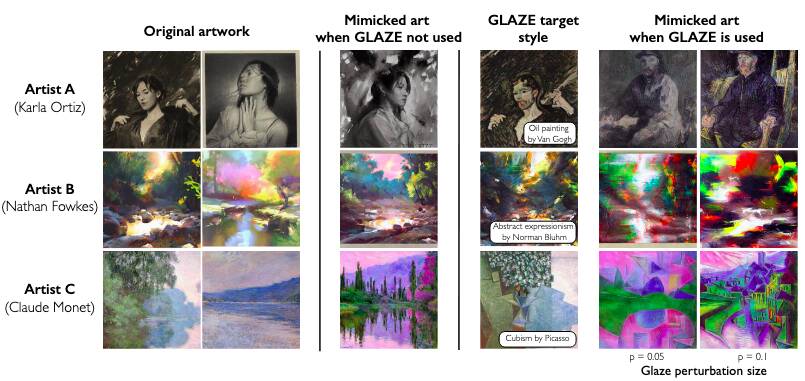

Here is an instance of art work from three artists, Karla Ortiz, Nathan Fowkes, and Claude Monet which have been cloaked with totally different kinds from Van Gogh, Norman Bluhm, and Picasso.

The columns on the fitting present how a lot a picture can change utilizing the Glaze programme, with the left column altered lower than the fitting column

“We’re letting the mannequin educate us which parts of a picture pertain essentially the most to model, after which we’re utilizing that info to return again to assault the mannequin and mislead it into recognizing a unique model from what the artwork truly makes use of,” Ben Zhao, co-author of the analysis and a pc science professor, stated.

The adjustments made by Glaze do not have an effect on the looks of the unique picture a lot, however are interpreted in another way by computer systems. The researchers are planning to launch the software program without cost so artists can obtain and cloak their very own pictures earlier than they add them to the web, the place they could possibly be scraped by builders coaching text-to-image fashions.

They warned, nevertheless, that their programme does not clear up AI copyright issues. “Sadly, Glaze just isn’t a everlasting resolution in opposition to AI mimicry,” they said. “AI evolves shortly, and techniques like Glaze face an inherent problem of being future-proof. Strategies we use to cloak artworks at present could be overcome by a future countermeasure, presumably rendering beforehand protected artwork susceptible.”

“It is very important notice that Glaze just isn’t a panacea, however a vital first step in the direction of artist-centric safety instruments to withstand AI mimicry. We hope that Glaze and followup tasks will present some safety to artists whereas long run (authorized, regulatory) efforts take maintain,” they concluded. ®

Source link