Deepfake movies on-line that includes AI-generated information anchors spouting pro-Chinese language authorities propaganda are doubtless the creations of a prolific disinformation crew dubbed Spamouflage.

These movies signify “the primary time Graphika has noticed state-aligned IO [influence operation] actors utilizing video footage of AI-generated fictitious folks of their operations,” the social media analytics agency’s researchers reported, detailing the Spamouflage deepfakes in a report [PDF] this week.

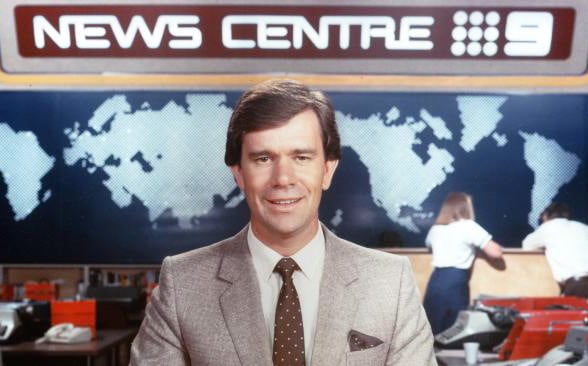

The movies present a female and male broadcaster, each purporting to be reporting for a media firm known as Wolf Information. In a single, the information anchor condemns America for its failures to cease gun violence. The opposite stresses the necessity for China-US cooperation on world financial restoration efforts.

“At first look, the Wolf Information anchors current as actual folks,” Graphika stated. “Our preliminary speculation was that they had been paid actors that had been recruited to seem within the movies.”

This is able to align with Spamouflage’s normal modus operandi, in keeping with Graphika and other researchers together with Google who observe the miscreants as Dragonbridge and have documented their use of actual folks on digicam of their earlier disinformation campaigns.

A number of the crew’s different affect operations have included makes an attempt to meddle within the 2022 American midterm elections and trolling rare-earth mining firms through the use of hundreds of phony social media accounts, prompting a stern finger-wagging by the Pentagon.

“However additional investigation revealed the Wolf Information presenters had been nearly definitely created utilizing expertise offered by a British AI video firm known as Synthesia,” Graphika researchers claimed.

Reverse picture searches unearthed a ton of unrelated movies exhibiting the identical man and lady “information anchors” who seem within the Spamouflage Wolf Information broadcast. The AI-generated folks converse in a number of languages in these vids, together with Arabic, Romanian, Spanish and English, like this one selling freight dealer providers.

In it, the male anchor says, “Whats up, my identify is Mr Cruise. And I am an avatar.”

Cruise is considered one of Synthesia’s “100+” avatars, and it seems his identify is Jason. The feminine anchor from the Wolf Information movies is Anna.

Synthesia’s ethics page says it “won’t supply [its] software program for public use. All content material will undergo an specific inside screening course of earlier than being launched to our trusted shoppers.”

Moreover, its avatar FAQ page says there’s limits as to what the avatars can and can’t say: “Political, sexual, private, felony and discriminatory content material just isn’t tolerated or authorised.”

Spamouflage’s pretend information tales would violate each insurance policies. Synthesia couldn’t be reached for remark. Victor Riparbelli, Synthesia’s co-founder and chief govt, told the New York Occasions earlier this week Spamouflage broke Synthesia’s phrases of service through the use of the tech to make its movies.

That’s to say, Spamouflage someway obtained maintain of and used Synthesia’s expertise to provide its content material, opposite to Synthesia’s guidelines. There isn’t a suggestion of wrongdoing by Synthesia.

Whereas government agencies and security researchers alike have sounded the alarm about deepfakes being utilized in political affect operations within the not-too-distant future, till now AI-generated media has been largely restricted to pretend faces — not solely pretend folks, in keeping with Graphika.

Nevertheless, regardless of the usage of avatars, the remainder of the Spamouflage movies appear like “low high quality political spam,” the researchers wrote, echoing Google’s assessment of the crew’s YouTube movies, 83 p.c of which had fewer than 100 views.

Moreover, the deepfake aspect of the newscasts “was nearly definitely created utilizing a industrial service” versus in-house functionality, the report concluded. This means Spamouflage and others haven’t got the technical experience to drag off extremely refined deepfakes and can proceed to make use of accessible industrial instruments.

Nevertheless, as Graphika notes, “this additionally raises questions on find out how to successfully reasonable the usage of these services.” ®

Source link