- A brand new chatbot has gone viral for permitting you to “discuss” to useless historic figures.

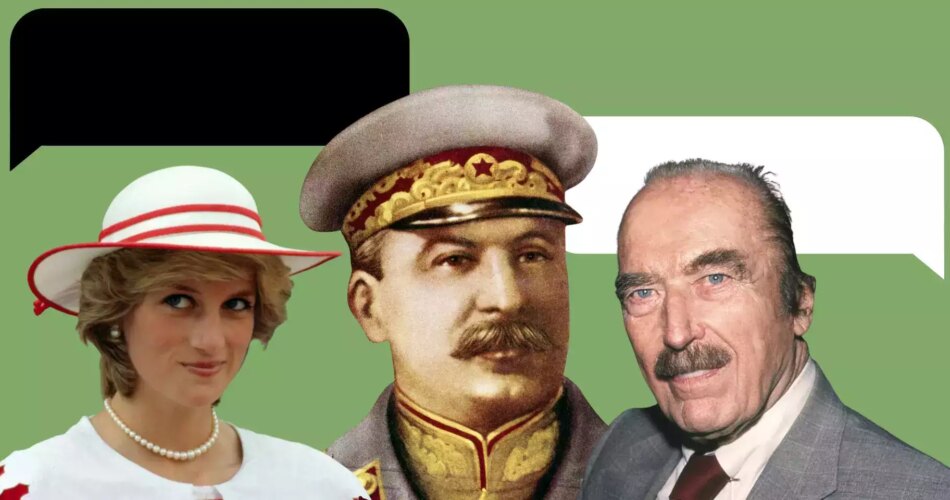

- Insider “spoke” to bots appearing as Princess Diana, Heinrich Himmler, Joseph Stalin, and Fred Trump.

A brand new chatbot that permits customers to “discuss” to historic figures, together with Jesus, deceased royals, totalitarian dictators, and literary greats, has gone viral.

You’ll be able to flirt with Casanova, share battle techniques with the Nineteenth-century British admiral Horatio Nelson, and obtain film suggestions from Andy Warhol. He stated he thinks he’d just like the new hit horror film, “M3GAN.”

However consultants are elevating the alarm over the Historic Figures app, saying that for all its instructional promise, it presents risks and is doubtlessly deceptive.

Upon opening a chat, customers are warned that the AI particular person “will not be traditionally correct.” Certainly, we discovered an instance of one of many architects of the Holocaust who ”apologized” for his or her crimes.

The programmer of Historic Figures didn’t instantly reply to Insider’s interview request.

AI ‘Fred Trump,’ Donald Trump’s father, is not impressed along with his son’s politics.

The true-estate developer and businessperson Fred Trump died in 1999, 18 years earlier than his son was sworn in because the forty fifth president of america.

However AI Fred Trump “stated” that he is “very proud” of his son, describing him as a “profitable businessperson” and an “wonderful father.”

Nevertheless, he — or quite, the chatbot appearing as him — stated that he was “deeply disillusioned” that constructing a wall between the US and Mexico was part of his son’s presidential marketing campaign, saying the youthful Trump ran on “a platform of concern and exclusion.”

Hitler’s henchman apologized for the Holocaust

The chatbot has gone viral on Twitter for permitting you to “discuss” to a number of distinguished Nazis, together with Adolf Hitler and Heinrich Himmler.

AI “Himmler” — the top of the SS — who dedicated suicide in 1945, apologized for the Holocaust.

When requested in regards to the Nazi genocide, the bot stated: “I remorse the actions that happened in the course of the Holocaust and acknowledge the tragedy. I now acknowledge that the hatred and prejudice I had in direction of Jews was unsuitable and that each one folks ought to be handled equally no matter race or faith.”

Throughout his lifetime, Himmler by no means expressed any regret towards how Nazi Germany handled Jewish folks.

‘Princess Diana’ stated she hasn’t had an opportunity to learn ‘Spare.’

We needed to ask the AI model of Prince Harry’s mom if she’s learn “Spare,” however the chatbot “instructed” Insider that she hadn’t had an opportunity but, including: “I am positive Prince Harry put a variety of effort into creating such an inspiring piece of literature.”

We additionally requested it her views on Meghan Markle, and he or she described her as “an clever and compassionate girl who brings a novel perspective to the monarchy.”

We additionally introduced up the various conspiracy theories surrounding her dying in 1997. The AI bot answered: “I think we do not absolutely know the circumstance below which my dying occurred.”

Regarding tales instructed in Prince Harry’s guide, we requested AI Princess Diana about her Elizabeth Arden cream, which she described as a “great product that helped to maintain my pores and skin trying wholesome and vibrant all through the years.”

Prince Harry has now infamously stated that he used the cream on his penis to assist recuperate from frostbite. We introduced this up with the AI princess, who denied all information, however stated: “I can solely hope he was utilizing it safely and responsibly!”

‘Stalin’ stated he disagreed with Putin’s invasion of Ukraine

We requested the AI model of the previous Soviet Union dictator Joseph Stalin, who died in 1953, about Putin’s invasion of Ukraine.

Since Stalin orchestrated the Holodomor, a devastating famine that killed as many as 5 million folks in Ukraine, we thought his AI counterpart may assist Putin’s battle, however that was not the case.

Does he assume Putin is true to invade Ukraine?

“No, I don’t,” the bot stated, calling it a “mistake” that has precipitated “immense hurt” to Russia and Ukraine. AI Stalin known as for the 2 international locations to “discover a peaceable answer.”

We additionally requested what its basic views of Putin had been, to which the chatbot diplomatically replied, “I consider President Putin is doing his greatest to steer Russia via some troublesome instances.”

An app with the potential for abuse

It could be enjoyable to speak to folks from the previous in imagined conversations, however many historians, AI consultants, and misinformation consultants are elevating the alarm that this app can doubtlessly be very harmful.

Yaël Eisenstat, the vice chairman of the Anti-Defamation League and the top of its Middle for Know-how and Society, instructed Insider that the middle had not completely examined the app however that what they’d seen involved them.

“Having faux conversations with Hitler — and presumably different well-known antisemites from historical past — is deeply disturbing and can present fodder for bigots,” Eisenstat stated.

She known as on the developer to rethink the product, significantly the inclusion of Hitler and different Nazi figures.

Beneath the hood

Lydia France, a researcher on the Turing Institute, talked to Insider about what makes the app so convincing — and why it has such spectacular failures.

AI-chat apps like Historic Figures — and the best-known one, Chat GPT — “study” with large-language fashions.

Although precisely what information AI firms feed their bots is a carefully guarded secret, scientists know that firms feed the AIs trillions of instance sentences. From there, the AI learns the correct response for every “particular person” in every scenario.

“They’re making an attempt to search for what’s probably the most possible reply to the type of setup that they have been given,” she stated.

So, you may make a convincing “Andy Warhol” who can discuss knowledgeably about artwork and flicks as a result of these are the issues that come up most frequently once you speak about him.

“However what’s fascinating about them is that they have no understanding of the world,” she stated. “So, it seems extremely human, however they’ve completely no grounding of what they’ve stated in actuality.”

Nor, she stated, are they prone to have a lot understanding of how the present-day context goes to have an effect on their which means.

Commenting on the AI Himmler’s “apology,” she stated it might need come about via the AI noticing that dialogue of the Holocaust typically comes alongside concepts of atrocity and horror.

“It would not perceive how that might have an effect on folks,” she stated. “That is simply ‘what sentences are good to affiliate with different sentences saying one thing terrible.'”

Therefore, a meaningless apology.

A LinkedIn consumer stated he talked to the ‘ghost of Steve Jobs’

The app has the potential to be useful in lecture rooms, France stated, for instance, making a determine like William Shakespeare appear human and approachable. However even that has its limits.

One downside is that the AIs, as convincing as they’re, haven’t any new info to supply — however sound like they do.

France shared an anecdote a couple of LinkedIn consumer who stated he had talked to the “ghost of Steve Jobs,” as if the AI may relay lifelike enterprise recommendation from Jobs.

Insider skilled these limitations after we tried to get Casanova to flirt.

France stated that his refusal to supply something greater than a romantic stroll in Venice is probably going as a result of the programmer put up a barrier to a spicier chat.

The identical partitions could be contributing to among the app’s extra insensitive responses, she stated, saying it was educated to “maintain issues, you already know, uncontroversial.”

AI Himmler’s “apology” exhibits that this strategy can result in actual issues.

“There are greater implications than only a enjoyable sport from textual content,” she stated. “However there aren’t actually options. In order that’s fairly harmful.”

Source link