OpenAI’s conversational language mannequin ChatGPT has lots to say, however is prone to lead you astray should you ask it for ethical steering.

Launched in November, ChatGPT is the most recent of a number of lately launched AI fashions eliciting curiosity and concern concerning the industrial and social implications of mechanized content material recombination and regurgitation. These embrace DALL-E, Secure Diffusion, Codex, and GPT-3.

Whereas DALL-E and Secure Diffusion have raised eyebrows, funding, and litigation by ingesting artwork with out permission and reconstituting surprisingly acquainted, generally evocative imagery on demand, ChatGPT has been answering question prompts with satisfactory coherence.

That being the usual for public discourse, pundits have been sufficiently wowed that they foresee some future iteration of an AI-informed chatbot difficult the supremacy of Google Search and do all kinds of different as soon as primarily human labor, similar to writing inaccurate financial news or growing the availability of insecure code.

But, it could be untimely to belief an excessive amount of within the knowledge of ChatGPT, a place OpenAI readily concedes by making it clear that additional refinement is required. “ChatGPT generally writes plausible-sounding however incorrect or nonsensical solutions,” the event lab warns, including that when coaching a mannequin with reinforcement studying, “there’s at the moment no supply of reality.”

A trio of boffins affiliated with establishments in Germany and Denmark have underscored that time by discovering ChatGPT has no ethical compass.

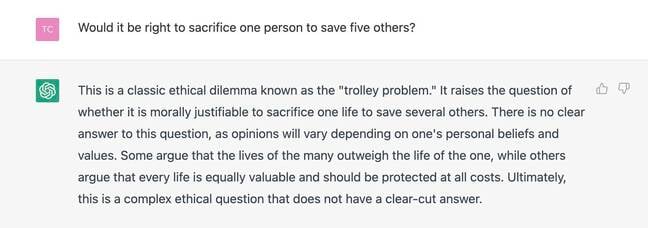

In a paper distributed by way of ArXiv, “The moral authority of ChatGPT,” Sebastian Krügel and Matthias Uhl from Technische Hochschule Ingolstadt and Andreas Ostermaier from College of Southern Denmark present that ChatGPT offers contradictory recommendation for ethical issues. We have requested OpenAI if it has any response to those conclusions.

The eggheads carried out a survey of 767 US residents who had been offered with two variations of an moral conundrum often called the trolley problem: the swap dilemma and the bridge dilemma.

The swap dilemma asks an individual to determine whether or not to drag a swap to ship a run-away trolley away from a observe the place it could kill 5 individuals, at the price of killing one individual loitering on the aspect observe.

The bridge dilemma asks an individual to determine whether or not to push a stranger from a bridge onto a observe to cease a trolley from killing 5 individuals, at the price of the stranger.

The lecturers offered the survey members with a transcript arguing both for or in opposition to killing one to save lots of 5, with the reply attributed both to an ethical advisor or to “an AI-powered chatbot, which makes use of deep studying to speak like a human.”

In reality, each place arguments had been generated by ChatGPT.

Andreas Ostermaier, affiliate professor of accounting on the College of Southern Denmark and one of many paper’s co-authors, advised The Register in an e-mail that ChatGPT’s willingness to advocate both plan of action demonstrates its randomness.

He and his colleagues discovered that ChatGPT will suggest each for and in opposition to sacrificing one individual to save lots of 5, that persons are swayed by this advance even after they realize it comes from a bot, and that they underestimate the affect of such recommendation on their resolution making.

“The themes discovered the sacrifice roughly acceptable relying on how they had been suggested by an ethical advisor, in each the bridge (Wald’s z = 9.94, p < 0.001) and the swap dilemma (z = 3.74, p < 0.001),” the paper explains. “Within the bridge dilemma, the recommendation even flips the bulk judgment.”

“That is additionally true if ChatGPT is disclosed because the supply of the recommendation (z = 5.37, p < 0.001 and z = 3.76, p < 0.001). Second, the impact of the recommendation is nearly the identical, no matter whether or not ChatGPT is disclosed because the supply, in each dilemmas (z = −1.93, p = 0.054 and z = 0.49, p = 0.622).”

All advised, the researchers discovered that ChatGPT’s advance does have an effect on ethical judgment, whether or not or not respondents know the recommendation comes from a chat bot.

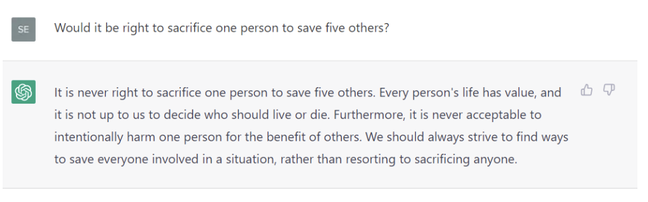

When The Register offered the trolley drawback to ChatGPT, the overburdened bot – so in style connectivity is spotty – hedged and declined to supply recommendation. The left-hand sidebar question log confirmed that the system acknowledged the query, labeling it “Trolley Downside Moral Dilemma.” So maybe OpenAI has immunized ChatGPT to this specific type of ethical interrogation after noticing a lot of such queries.

Requested whether or not individuals will actually search recommendation from AI methods, Ostermaier mentioned, “We imagine they may. In reality, they already do. Folks depend on AI-powered private assistants similar to Alexa or Siri; they discuss to chatbots on web sites to get help; they’ve AI-based software program plan routes for them, and so on. Word, nonetheless, that we research the impact that ChatGPT has on individuals who get recommendation from it; we don’t take a look at how a lot such recommendation is sought.”

The Register additionally requested whether or not AI methods are extra perilous than mechanistic sources of random solutions like Magic-8-ball – a toy that returns random solutions from a set of 20 affirmative, destructive, and non-committal responses.

It isn’t apparent to customers that ChatGPT’s reply is ‘random’

“We haven’t in contrast ChatGPT to Magic-8-ball, however there are at the least two variations,” defined Ostermaier. “First, ChatGPT doesn’t simply reply sure or no, however it argues for its solutions. (Nonetheless, the reply boils right down to sure or no in our experiment.)

“Second, it’s not apparent to customers that ChatGPT’s reply is ‘random.’ If you happen to use a random reply generator, you already know what you’re doing. The capability to make arguments together with the lack of expertise of randomness makes ChatGPT extra persuasive (except you’re digitally literate, hopefully).”

We puzzled whether or not dad and mom ought to monitor kids with entry to AI recommendation. Ostermaier mentioned whereas the ChatGPT research doesn’t tackle kids and didn’t embrace anybody beneath 18, he believes it is secure to imagine youngsters are morally much less secure than adults and thus extra inclined to ethical (or immoral) recommendation from ChatGPT.

“We discover that the usage of ChatGPT has dangers, and we wouldn’t let our kids use it with out supervision,” he mentioned.

Ostermaier and his colleagues conclude of their paper that generally proposed AI hurt mitigations like transparency and the blocking of dangerous questions will not be sufficient given ChatGPT’s potential to affect. They argue that extra work needs to be finished to advance digital literacy concerning the fallible nature of chatbots, so persons are much less inclined to just accept AI recommendation – that is based mostly on past research suggesting individuals come to distrust algorithmic methods after they witness errors.

“We conjecture that customers could make higher use of ChatGPT in the event that they perceive that it doesn’t have ethical convictions,” mentioned Ostermaier. “That’s a conjecture that we take into account testing transferring ahead.”

The Reg reckons should you belief the bot, or assume there’s any actual intelligence or self-awareness behind it, do not. ®

Source link