AI chips serve two capabilities. AI builders first take a big (or actually large) set of information and run advanced software program to search for patterns in that knowledge. These patterns are expressed as a mannequin, and so now we have chips that “practice” the system to generate a mannequin.

Then this mannequin is used to make a prediction from a brand new piece of information, and the mannequin infers some seemingly final result from that knowledge. Right here, inference chips run the brand new knowledge towards the mannequin that has already been skilled. These two functions are very completely different.

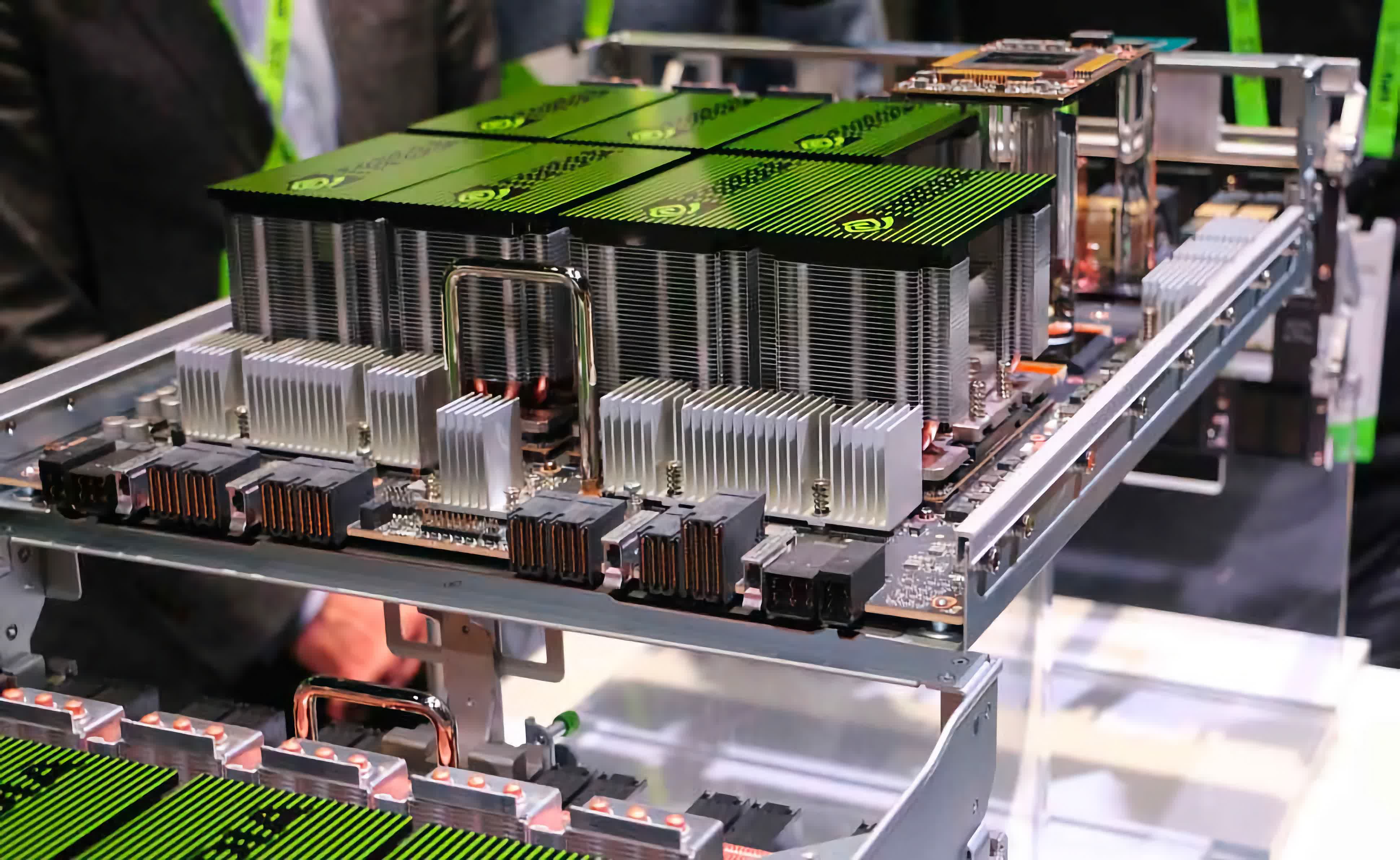

Coaching chips are designed to run full tilt, typically for weeks at a time, till the mannequin is accomplished. Coaching chips thus are usually massive, “heavy iron.”

Inference chips are extra various, a few of these are utilized in knowledge facilities, others are used on the “edge” in gadgets like smartphones and video cameras. These chips are usually extra assorted, designed to optimize completely different points like energy effectivity on the edge. And, after all, there all types of in-between variants. The purpose is that there are large variations between “AI chips.”

For chip designers, these are very completely different merchandise, however as with all issues semiconductors, what issues most is the software program that runs on them. Seen on this mild, the state of affairs is way less complicated, but additionally dizzyingly difficult.

Easy as a result of inference chips typically simply have to run the fashions that come from the coaching chips (sure, we’re oversimplifying). Difficult as a result of the software program that runs on coaching chips is massively assorted. And that is essential. There are tons of, in all probability hundreds, of frameworks now used for coaching fashions. There are some extremely good open-source libraries, but additionally most of the large AI firms/hyperscalers construct their very own.

As a result of the sphere for coaching software program frameworks is so fragmented, it’s successfully unattainable to construct a chip that’s optimized for them. As now we have identified up to now, small modifications in software program can successfully neuter the positive aspects supplied by special-purpose chips. Furthermore, the individuals operating the coaching software program need that software program to be extremely optimized for the silicon on which it runs. The programmers operating this software program in all probability don’t need to muck round with the intricacies of each chip, their life is difficult sufficient constructing these coaching techniques. They don’t need to must study low-level code for one chip solely to must re-learn the hacks and shortcuts for a brand new one later. Even when that new chip provides “20%” higher efficiency, the effort of re-optimizing the code and studying the brand new chip renders that benefit moot.

Which brings us to CUDA — Nvidia’s low-level chip programming framework. By this level, any software program engineer engaged on coaching techniques in all probability is aware of a good bit about utilizing CUDA. CUDA will not be excellent, or elegant, or particularly straightforward, however it’s acquainted. On such whimsies are huge fortunes constructed. As a result of the software program setting for coaching is already so various and altering quickly, the default resolution for coaching chips is Nvidia GPUs.

The marketplace for all these AI chips is just a few billion {dollars} proper now and is forecasted to develop 30% or 40% a yr for the foreseeable future. One research from McKinsey (perhaps not probably the most authoritative supply right here) places the information heart AI chip market at $13 billion to $15 billion by 2025 — by comparability the overall CPU market is about $75 billion proper now.

Of that $15 billion AI market, it breaks all the way down to roughly two-thirds inference and one-third coaching. So this can be a sizable market. One wrinkle in all that is that coaching chips are priced within the $1,000’s and even $10,000’s, whereas inference chips are priced within the $100’s+, which implies the overall variety of coaching chips is just a tiny share of the overall, roughly 10%-20% of models.

On the long run, that is going to be necessary on how the market takes form. Nvidia goes to have a number of coaching margin, which it may possibly carry to bear in competing for the inference market, just like how Intel as soon as used PC CPUs to fill its fabs and knowledge heart CPUs to generate a lot of its income.

To be clear, Nvidia will not be the one participant on this market. AMD additionally makes GPUs, however by no means developed an efficient (or a minimum of extensively adopted) different to CUDA. They’ve a reasonably small share of the AI GPU market, and we don’t see that altering any time quickly.

Additionally learn: Why is Amazon building CPUs?

There are a variety of startups that attempted to construct coaching chips, however these principally received impaled on the software program drawback above. And for what it is value, AWS has additionally deployed their very own, internally-designed coaching chip, cleverly named Trainium. From what we will inform this has met with modest success, AWS doesn’t have any clear benefit right here apart from its personal inside (large) workloads. Nonetheless, we perceive they’re shifting ahead with the subsequent era of Trainium, so that they should be pleased with the outcomes thus far.

A few of the different hyperscalers could also be constructing their very own coaching chips as nicely, notably Google which has new variants of its TPU coming quickly which are particularly tuned for coaching. And that’s the market. Put merely, we expect most individuals available in the market for coaching compute will look to construct their fashions on Nvidia GPUs.

Source link