In recent months, Google has been slowly acclimating the public to a new way of thinking about search that is likely to be a hallmark of our future interactions with the platform.

Searching the internet has been, since its inception, a text-based activity, based on the concept of locating the best match between the intent of the searcher and a set of results displayed in the form of text links and content snippets.

But in this emerging phase, search is becoming increasingly multi-modal — able, in other words, to handle input and output in various formats, including text, images, and sound. At its best, multimodal search is more intuitive and convenient than traditional methods.

At least some of the impetus for Google’s move toward thinking of search as a multi-modal activity comes from the rise of social media platforms like Instagram, Snapchat, and TikTok, all of which have evolved user expectations in the direction of highly visual and immediate interaction with content. As a veteran internet company, Google has moved to keep pace with these changing expectations.

The Emergence of Multisearch

Representing the next evolution of tools like Google Images, the company has focused immense development resources into Google Lens, Vision AI, and other components of its sophisticated image recognition technology.

Google Lens is fairly well established as a search tool that lets you quickly translate road signs and menus, research products, identify plants, or look up recipes simply by pointing your phone’s camera at the object you want to search for.

This year, Google introduced the concept of “multisearch,” which allows users to add text qualifiers to image searches in Lens. You can now take a photo of a blue dress and ask Google to look for it in green, or add “near me” to see local restaurants that offer dishes matching an image.

The Image Icon Joins the Voice Icon

In a further step toward nudging the public toward image-based search, Google also recently added an image icon to the main search box at google.com.

The image icon takes its place alongside the microphone, Google’s prompt to search by voice. In the early days of Amazon Alexa and its ilk, voice search was supposed to take over the internet. That didn’t quite happen, but voice search has since grown to occupy a useful niche in our arsenal of methods for interacting with devices, convenient when talking is faster or safer than typing. So too, hearing Google Assistant or Alexa read search results out loud will sometimes be preferable to reading text on a screen.

This brings us to the vision of a multi-modal search interface: users should be able to search by, with, and for any medium that is the most useful and convenient for the given circumstance.

A voice prompt to “show me pictures of unicorns” might work best for a child still learning to read; an image-based input potentially conveys more information than any short text phrase regarding the color, texture, and detailed features of a retail product. It’s safe to assume that any combination of text, voice, and image will soon be supported for both inputs and outputs.

Marketing in the World of Multi-modal Search

What does all of this mean for marketers? Those with goals to increase exposure of businesses and their offerings online will do well to focus their attention on two priorities.

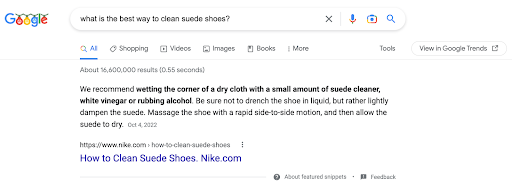

The first is to provide content for consumption in search that is not just promotional but also useful. With consumers being trained to ask questions of all kinds and receive responses that help them stay informed and make better decisions, marketers need to compete to provide answers and advice, in addition to promoting the availability of their products or services. Google uses Featured Snippets, for example — the answers showcased at the top of search results — as content to be read aloud by Google Assistant when users ask questions, offering a great opportunity to increase brand exposure and to be recognized as an authoritative industry voice.

Image Optimization is Key

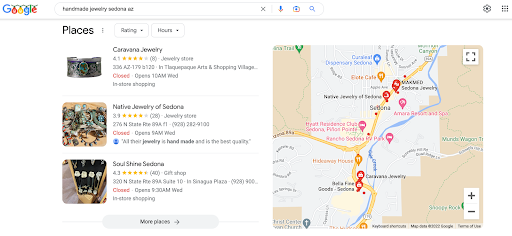

The other major priority for marketers in the age of multi-modal search is image optimization. Google’s Vision AI technology provides the company with an automated means of understanding the content of pictures. With its image recognition technology — an important facet of Google’s Knowledge Graph, which creates linkages between entities as a way of understanding internet content — the company is transforming search results for local and product searches into immersive, image-first experiences, matching featured images to search intent.

Marketers who publish engaging photo content in strategic places will stand to win out in Google’s image-rich search results. In particular, e-commerce websites and store landing pages, Google Business Profiles, and product listings uploaded to Google’s Merchant Center should showcase photos that correspond to search terms a company hopes to rank for. Photos should be augmented with descriptive text, but Google can interpret and display photos that match a searcher’s query even without text descriptions.

A search for “handmade jewelry in Sedona, Arizona,” for example, returns Google Business Profiles in the result, each of which displays a photo pulled from the profile’s image gallery that corresponds to what the user was searching for.

Rising Up in Search

The new shopping experience in search, announced by Google this fall, can be invoked by typing “shop” at the beginning of any query for a product. The results are dominated by images from retail websites, matched precisely to the search query entered by the user.

Food and retail are on the cutting edge of multi-modal search. In these categories, marketers already need to be actively working on image optimization and content marketing with various media use cases in mind. For other business categories, multi-modal search is coming.

Wherever it’s more convenient to use pictures in place of text or voice in place of visual display, Google will want to make these options available across all business categories. It’s best to get ready now for the multi-modal future.

About the Author

With over a decade of local search experience, Damian Rollison, SOCi’s Director of Market Insights, has focused his career on discovering innovative ways to help businesses large and small get noticed online. Damian’s columns appear frequently at Street Fight, Search Engine Land, and other publications, and he is a frequent speaker at industry conferences such as Localogy, Brand Innovators, State of Search, SMX, and more.

Source link