AI adoption isn’t optionally available – it’s already occurring inside your group, whether or not you sanctioned it or not. Whereas management groups debate AI methods and procurement departments consider distributors, staff throughout each division are quietly integrating AI instruments into their every day workflows. They’re not asking for permission, they usually’re not ready for approval.

This creates a elementary dilemma for leaders. Staff are appearing decisively as a result of AI instruments are low cost, quick, and efficient. In the meantime, organizations are lagging behind, slowed down by sluggish coverage cycles and cautious procurement processes. By the point the official AI technique is permitted, your workforce has already moved on to the subsequent era of instruments.

However right here’s the essential reframe: shadow AI isn’t organizational failure. Moderately, it’s a wake-up name that your persons are motivated, formidable, and already innovating. They’re not being reckless; they’re being resourceful. They’re making an attempt to succeed sooner than the corporate can allow them.

Leaders who acknowledge why staff flip to those instruments can redirect that vitality into intentional, secure, and impactful adoption. The organizations that thrive received’t simply handle shadow AI dangers – they’ll channel worker motivations into a real aggressive benefit.

Understanding Shadow AI: When Adoption Outpaces Technique

Shadow AI refers back to the use of unapproved, unsanctioned AI instruments inside a corporation. It’s the pure evolution of “shadow IT,” however with decrease boundaries to entry and sooner adoption cycles.

Why it’s occurring:

- AI instruments are low cost. Many are free—or price lower than a crew’s espresso finances—and ship ROI virtually immediately.

- Stress is mounting. Clients, opponents, and markets demand velocity. Inside approval cycles can’t sustain.

- Insurance policies are too sluggish. Whereas corporations spend months drafting governance frameworks, staff are fixing issues in actual time.

The end result? A rising disconnect between organizational warning and particular person initiative. Staff are optimizing right now whereas management debates tomorrow.

The Human Story Behind Shadow AI: Understanding Motivations by Position

Staff utilizing unsanctioned AI instruments aren’t appearing recklessly – they’re fixing actual enterprise issues beneath actual strain. Gross sales groups are closing offers sooner, advertising and marketing is scaling campaigns, and help groups are hitting tighter SLAs.

However with out clear guardrails, right now’s productiveness can grow to be tomorrow’s safety drawback. Delicate CRM information, worker data, monetary forecasts, or proprietary product plans might unintentionally find yourself saved in public AI programs – and even listed on the open internet.

These are a few of the main elements driving shadow AI adoption throughout roles – and the long-term safety dangers leaders have to handle:

Persona

Enterprise Stress

Why They Use AI

Lengthy-Time period Safety Dangers

Gross sales Groups

Hit quotas, outpace opponents

Personalize outreach, speed up pipeline, analysis prospects sooner

CRM information or shopper particulars saved in public fashions; listed information dangers

Advertising Groups

Ship extra campaigns, increased high quality

Content material drafting, marketing campaign ideation, search engine optimization, personalization

AI-generated messaging might expose product roadmaps, pricing, or technique

Buyer Assist

Scale back ticket decision time, hit SLA targets

Chatbots, auto-summarizers, draft reply era

Buyer PII saved in third-party AI programs missing compliance

Product Managers / R&D

Innovate sooner, attain market first

Analyze suggestions, brainstorm options, prototype quickly

Proprietary product plans or function specs leaked into instruments with no IP protections

Tech Leaders / IT

Steadiness innovation with safety

Pilot instruments, discover efficiencies

Device sprawl causes inconsistent information protections and lack of oversight

L&D

Upskill workforce rapidly

Experiment with AI-based studying instruments

Coaching information might embrace worker particulars despatched to insecure programs

HR Groups

Enhance engagement, streamline admin duties

AI-powered surveys, chatbots, analytics

Worker PII or efficiency information dealt with by instruments that don’t meet regulatory requirements

Finance Groups

Drive effectivity, cut back prices, enhance accuracy

Automate invoices, bills, and forecasting

Monetary data or delicate forecasts saved insecurely or shared unknowingly

Authorized / Compliance

Handle rising regulatory complexity

Use AI to evaluation contracts and analysis sooner

Defective AI summaries miss dangers; information shared with non-compliant instruments

Executives / C-Suite

Sign innovation, ship sooner

Generate investor experiences, board decks, strategic comms

AI-generated supplies might embrace delicate company technique that turns into public

Procurement / Ops

Optimize provide chains, handle vendor complexity

Vendor evaluation, forecasting, contract workflows

Provider pricing or contract particulars uncovered through unsecured AI platforms

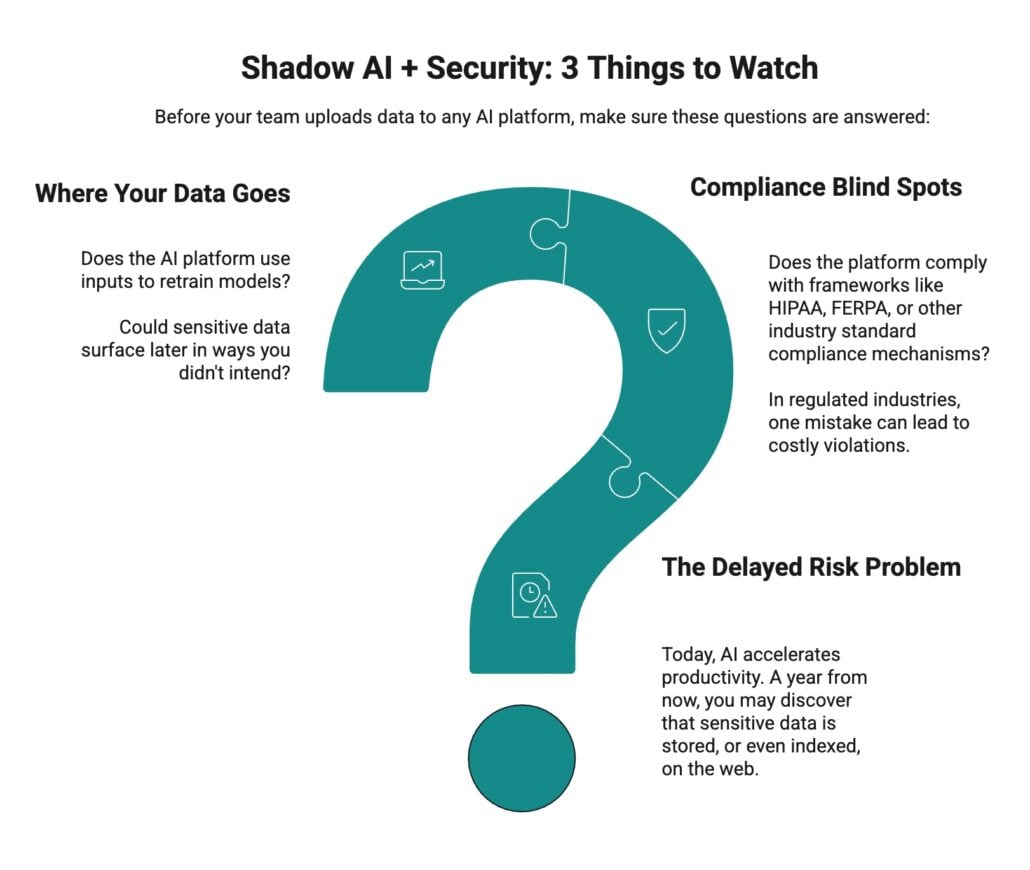

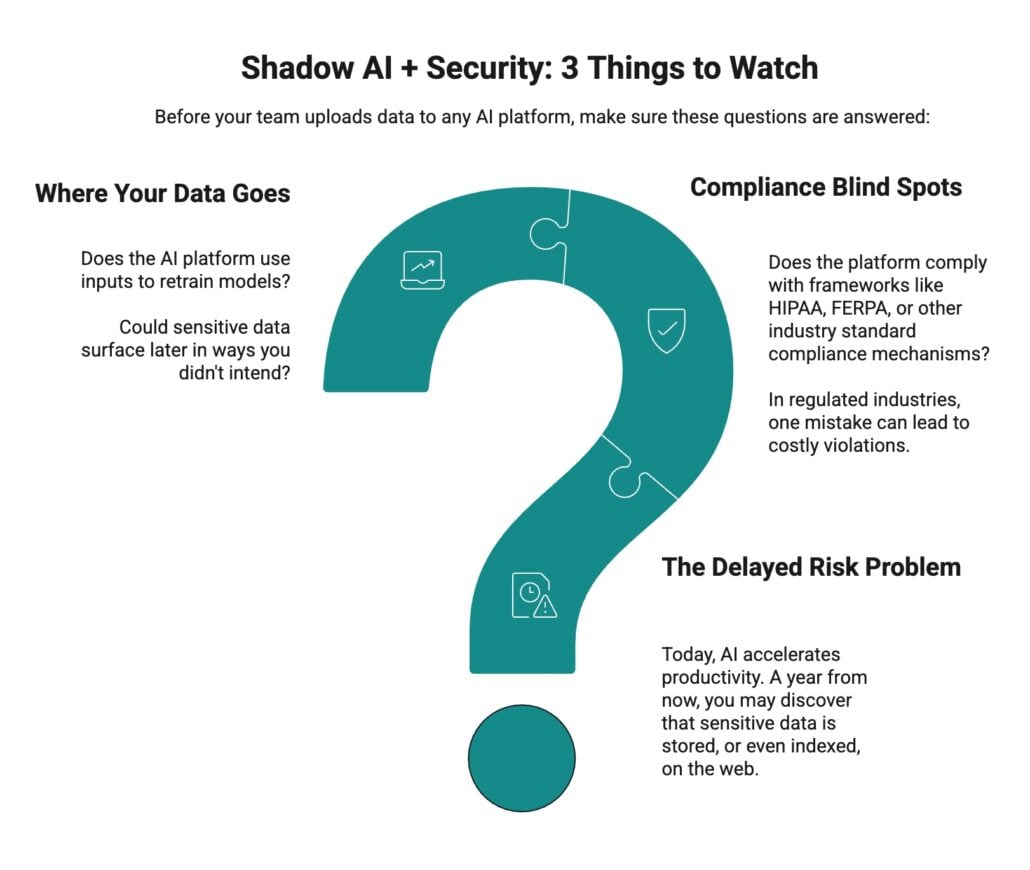

Earlier than You Have a good time Productiveness Positive factors, Test the Information Path

AI instruments are serving to groups work smarter and sooner—however velocity can create hidden dangers if information flows aren’t understood. Even when staff are fixing actual enterprise issues, delicate data like CRM data, contracts, or forecasts can unintentionally find yourself within the mistaken locations.

Unchecked, these small exposures can accumulate into vital compliance and safety dangers. Leaders want readability on the place information goes, the way it’s dealt with, and which AI instruments are secure for delicate data.

Why Shadow AI Persists: The Organizational Lag

Shadow AI continues regardless of identified dangers due to organizational dynamics that widen the hole between management intentions and worker wants. These organizational lags embrace:

Sluggish Coverage Cycles

Complete AI insurance policies take months to put in writing. Safety critiques, stakeholder approvals, and a number of revisions create delays that really feel glacial in comparison with the tempo of instrument adoption.

- Staff can begin utilizing a brand new AI instrument in minutes.

- By the point insurance policies are finalized, the AI panorama has already shifted.

- The end result: insurance policies are outdated earlier than they even roll out.

Device Paralysis

Leaders usually overanalyze instruments, slowing progress whereas staff transfer forward.

- Countless vendor evaluations and have comparisons stall decision-making.

- Pricing negotiations drag on whereas groups want options right now.

- Staff bypass the delays and seize instruments that clear up rapid issues.

- Backside line: the right turns into the enemy of the useful.

Management Over Enablement

Organizations are inclined to default to blocking or banning instruments quite than creating secure pathways for adoption.

- Staff nonetheless want AI to work sooner and smarter.

- Bans push utilization underground, eradicating alternatives for oversight and steering.

- With out structured enablement, shadow AI turns into the trail of least resistance.

Blended Alerts From the High

Staff are listening to two conflicting messages from government management:

- Message #1:

- “Transfer sooner.” “Be extra productive.” “Use AI to innovate.”

- Message #2

- Our requirements coverage will likely be out “by the top of the yr” or it will likely be out “at the beginning of 2026.” Which merely isn’t quick sufficient if staff try to adapt to message #1.

This stress drives shadow AI adoption:

- Groups hear the productiveness mandate loud and clear.

- They see opponents leveraging AI to win clients, cut back prices, and innovate sooner.

- However with out instruments or guardrails, they’re compelled to determine it out themselves.

The Asimov Paradox: Why Staff Go Rogue

This dynamic mirrors Asimov’s Three Laws of Robotics — guidelines that made sense individually however brought about paralyzing conflicts when utilized collectively. Asimov’s robots had been programmed to:

- Keep away from harming people.

- Obey human instructions except they brought about hurt.

- Defend themselves except it conflicted with the upper legal guidelines.

When these directives clashed, the robots froze — unable to reconcile competing priorities. Staff right now face the identical sort of gridlock:

- Legislation 1: Transfer quick. Innovate with AI.

- Legislation 2: Look ahead to governance requirements (coming “quickly”).

- Legislation 3: Defend your self and the corporate from compliance and safety dangers.

When velocity conflicts with compliance, staff do what Asimov’s robots couldn’t: they adapt. They resolve the paradox by going underground — utilizing AI instruments exterior official channels to satisfy the productiveness mandate they’ve been given.

The Repair: Asimov ultimately launched a “Zeroth Legislation” — a higher-order precept that outdated the conflicts. Maybe organizations want their very own equal: “Allow accountable innovation that serves the group’s long-term pursuits.” This might assist staff navigate the strain

4 Steps to Turning Shadow AI Into Aggressive Benefit

If the Asimov paradox explains why shadow AI persists, the answer is constructing a transparent path ahead: empowering staff to innovate with out exposing the group to pointless threat.

The objective isn’t to ban shadow AI — it’s to channel the momentum your groups have already got into structured, safe adoption that aligns particular person wants with organizational targets. Right here’s how:

1. Strategic Readability: See the Huge Image

Efficient AI management begins with visibility. Too usually, leaders act with out figuring out their AI maturity or aggressive standing, leading to scattered initiatives and missed alternatives. Step one is to get granular: you’ll be able to’t optimize a course of you don’t perceive.

Earlier than you even take into consideration which AI instruments to undertake, you will need to map your online business processes. This implies understanding the “Job to be Achieved” for every position and the particular workflows they comply with. For instance, what’s the precise step-by-step course of for “worker onboarding”? Or “buyer help ticket decision”?

This course of mapping reveals:

- Current inefficiencies: The place are the bottlenecks, redundant duties, and guide handoffs?

- Hidden friction factors: The place are staff getting caught and turning to unsanctioned instruments?

- Alternatives for AI: The place can an AI instrument actually create worth, not simply add one other layer of complexity?

Upon getting a transparent map of your present workflows, you’ll be able to then proceed with a broader readiness evaluation throughout 4 dimensions:

- Know-how stack

- Information infrastructure

- Expertise capabilities

- Workflow maturity (constructing in your preliminary course of maps)

The place are the gaps? What foundational parts should be strengthened earlier than AI adoption can scale?

From there, benchmark towards friends and opponents. Understanding your relative standing helps you prioritize alternatives and spot areas of differentiation. What are others doing that you just aren’t? The place are you able to leap forward?

Lastly, consider alternatives by means of a prioritization lens:

- Influence – Does this clear up an actual drawback recognized in your course of map?

- Effort – Can it’s examined rapidly with present assets?

- Moral threat – What’s the worst-case state of affairs?

- Readiness – Do your individuals have the coaching and guardrails?

2. Individuals Energy: Construct a Community of AI Advocates

AI adoption succeeds or fails on individuals, not know-how. The most effective technique on the planet received’t matter if staff don’t embrace the instruments.

That’s why you want AI champions – curious, credible people with an experimentation mindset. These aren’t at all times senior or technical leaders. They’re usually those already testing instruments, asking good questions, and desperate to share learnings.

Give them structured areas to experiment and collaborate with:

- Pilot teams

- “AI workplace hours”

- Inside challenges

Assist them with out micromanaging. Present cowl from management, reward studying velocity over perfection, and have a good time small wins. Your position is to create psychological security for experimentation whereas staying conscious of the dangers.

3. Course of Self-discipline: Innovate With out Shedding Management

Experimentation with out governance rapidly turns into harmful. To scale safely, leaders want a stability of innovation and management.

Begin with an audit of present shadow AI use: What instruments are in play? What information is being shared? The place are the vulnerabilities?

Then transfer right into a phased rollout:

- Audit & Educate – Map utilization, elevate consciousness, set safe-use tips.

- Focused Pilots – Concentrate on low-risk, high-impact areas like advertising and marketing or buyer help.

- Scale With Safeguards – Broaden confirmed pilots, implement KPIs, governance, and compliance.

Not all features ought to be handled the identical. Excessive-risk areas like finance, authorized, and compliance want strict oversight. Buyer-facing groups want clear model guardrails. Operations might permit extra flexibility, supplied information protocols are in place.

4. Belief and Transparency: Construct a Tradition That Embraces AI

Even the neatest technique will fail if staff don’t belief it. AI adoption usually stalls as a result of fears go unaddressed:

- Job displacement – “Will AI exchange me?”

- Expertise obsolescence – “If I don’t be taught this now, will I fall behind?”

- Unclear objective – “Why is the corporate utilizing this, and what does it imply for me?”

Leaders have to confront these issues straight. Body AI as augmentation, not alternative, and share role-specific examples. Place AI coaching as profession development, not remedial upskilling.

Above all, talk clearly and constantly. Be certain that staff know the targets, the boundaries, and the way selections are being made. Uncertainty is what drives shadow adoption deeper underground.

Mastering the Strikes: Main Shadow AI Adoption

“The one technique to make sense out of change is to plunge into it, transfer with it, and be part of the dance.” – Alan Watts

Change is occurring, whether or not you’re main it or not. Your staff are already within the dance of innovation, shifting with the fast tempo of AI development. The query is not whether or not to take part, however the best way to lead the choreography. It’s time to assist them grasp the steps.

To start this transformation, it is advisable perceive your present actuality. Begin by conducting an off-the-cuff “shadow AI audit”:

- Ask groups what instruments they’re utilizing to get work performed sooner.

- Evaluation current high-quality outputs and ask how they had been created.

- Survey staff anonymously about their AI experimentation.

You’ll seemingly uncover extra innovation than you anticipated – and extra threat than you’re comfy with. That’s your start line for transformation.

Need assistance reworking shadow AI right into a strategic benefit?

With 20 years of B2B know-how market analysis experience, we’ve a deep specialization in understanding the personas, motivations, and behavioral drivers throughout organizational roles and features. We can assist you lead through the chaos of shadow AI adoption and use it for aggressive benefit.

Source link