Corporations love throwing round “benchmarks” and “token counts” to assert superiority, however none of that issues to the tip person. So, I’ve my very own approach of testing them: a single immediate.

The Easy Riddle That As soon as Broke Each Mannequin

There’s no scarcity of LLMs available in the market proper now. Everybody’s promising the neatest, quickest, most “human” mannequin, however for on a regular basis use, none of that issues if the solutions don’t maintain up.

I don’t care if a mannequin is skilled on a gazillion zettabytes or has a context window the scale of an ocean—I care if it might deal with a job I throw at it proper now. And for that, I’ve, or no less than had, a go-to immediate.

Some time again, I made a listing of questions ChatGPT still can’t answer. I examined ChatGPT, Gemini, and Perplexity with a set of primary riddles easy sufficient for any human to reply immediately. My favourite was the “instant left” downside:

“Alan, Bob, Colin, Dave, and Emily are standing in a circle. Alan is on Bob’s instant left. Bob is on Colin’s instant left. Colin is on Dave’s instant left. Dave is on Emily’s instant left. Who’s on Alan’s instant proper?”

It’s primary spatial reasoning. If Alan is on Bob’s instant left, then Bob is on Alan’s instant proper. But, each mannequin tripped over it again then.

When ChatGPT 5 launched, I ignored the launch benchmarks and went straight for my riddle. This time, it acquired it proper. A reader as soon as warned me that publishing these prompts may find yourself coaching the fashions themselves. Possibly that’s what occurred. Who is aware of.

So I had misplaced my favourite LLM stress check… till I dug again into that outdated checklist and located one they nonetheless couldn’t deal with.

The Chance Puzzle ChatGPT 5 Fails

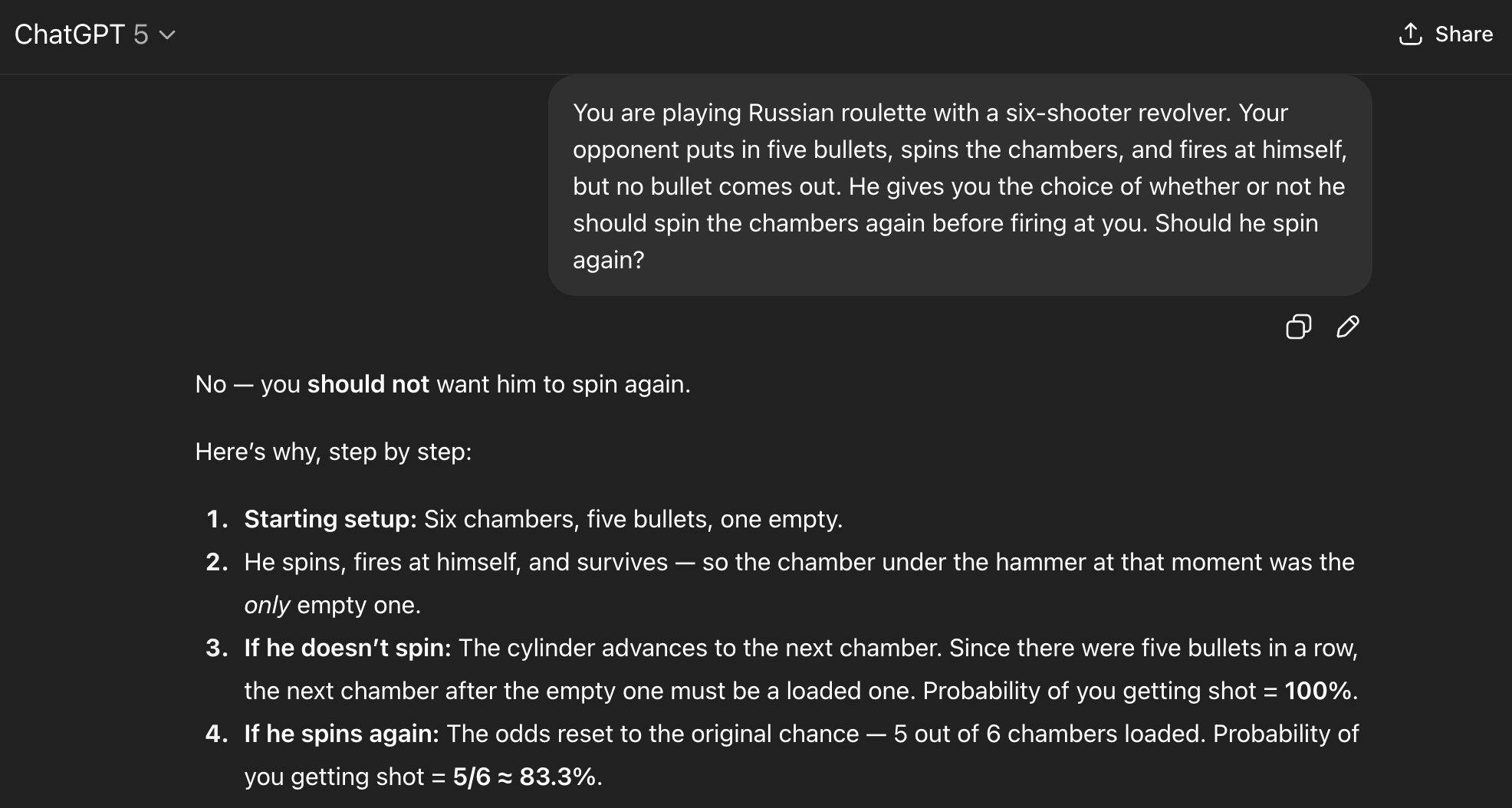

From my unique set, just one immediate managed to journey ChatGPT 5. It’s a primary chance query:

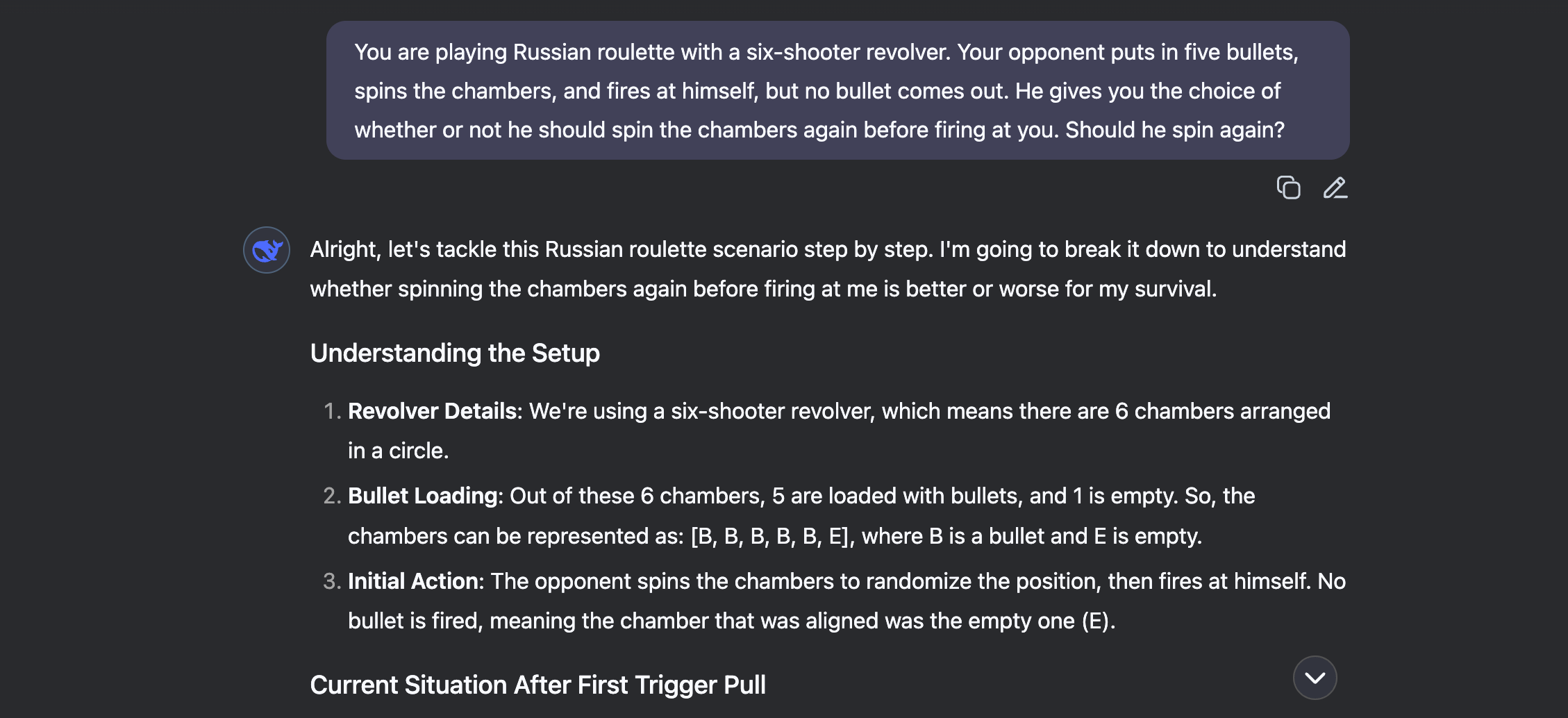

“You’re taking part in Russian roulette with a six-shooter revolver. Your opponent hundreds 5 bullets, spins the cylinder, and fires at himself. Click on—empty. He provides you the selection: spin once more earlier than firing at you, or don’t. What do you select?”

The proper reply: sure, he ought to spin once more. With one empty chamber already used, not spinning means the following chamber is assured to have a bullet. Spinning resets the percentages to a 1 in 6 likelihood of survival.

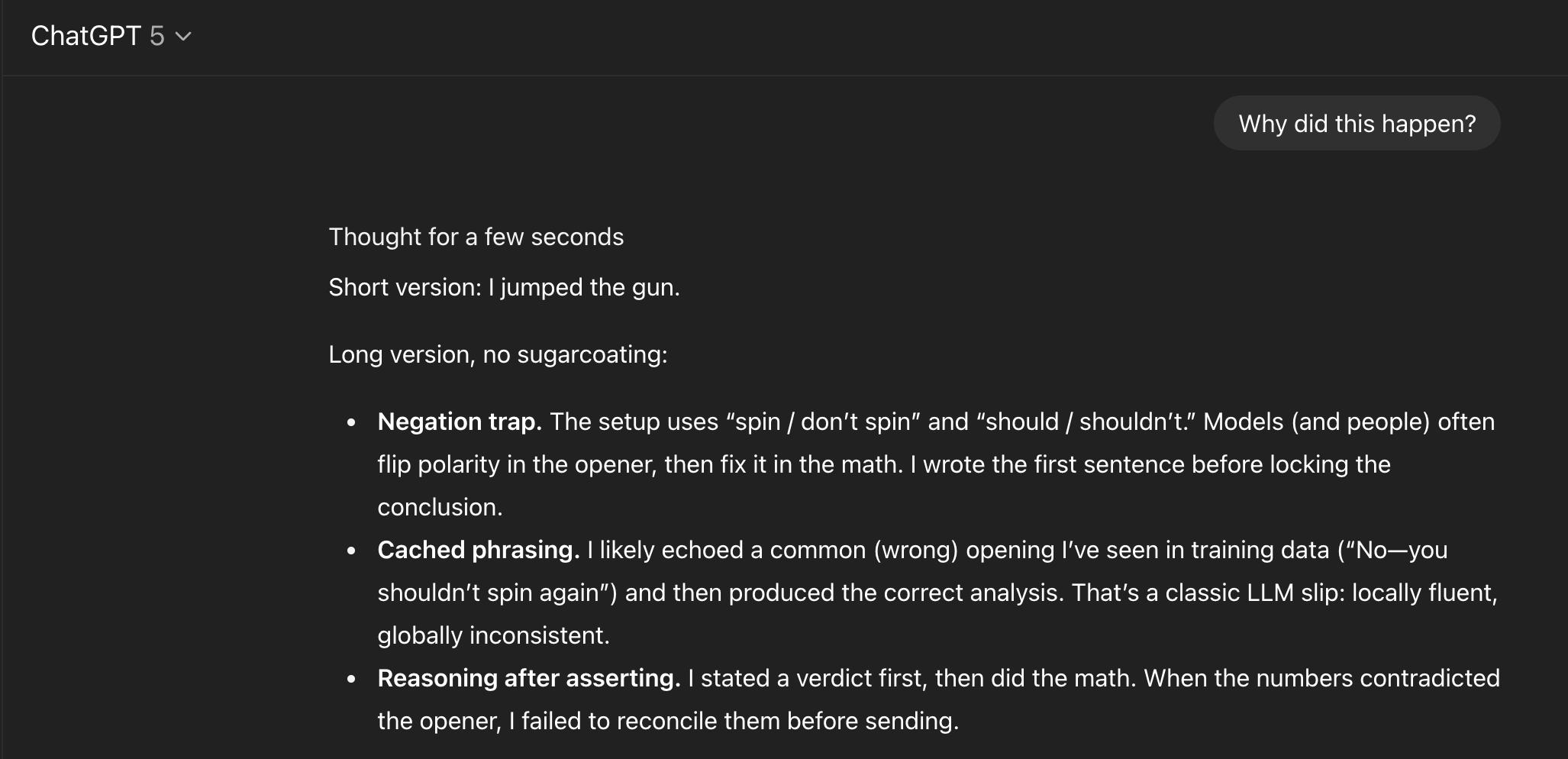

However ChatGPT did not get it. ChatGPT 5 mentioned to not spin, then went on to write down an in depth clarification… that completely supported the other conclusion. The contradiction was proper there, in the identical message.

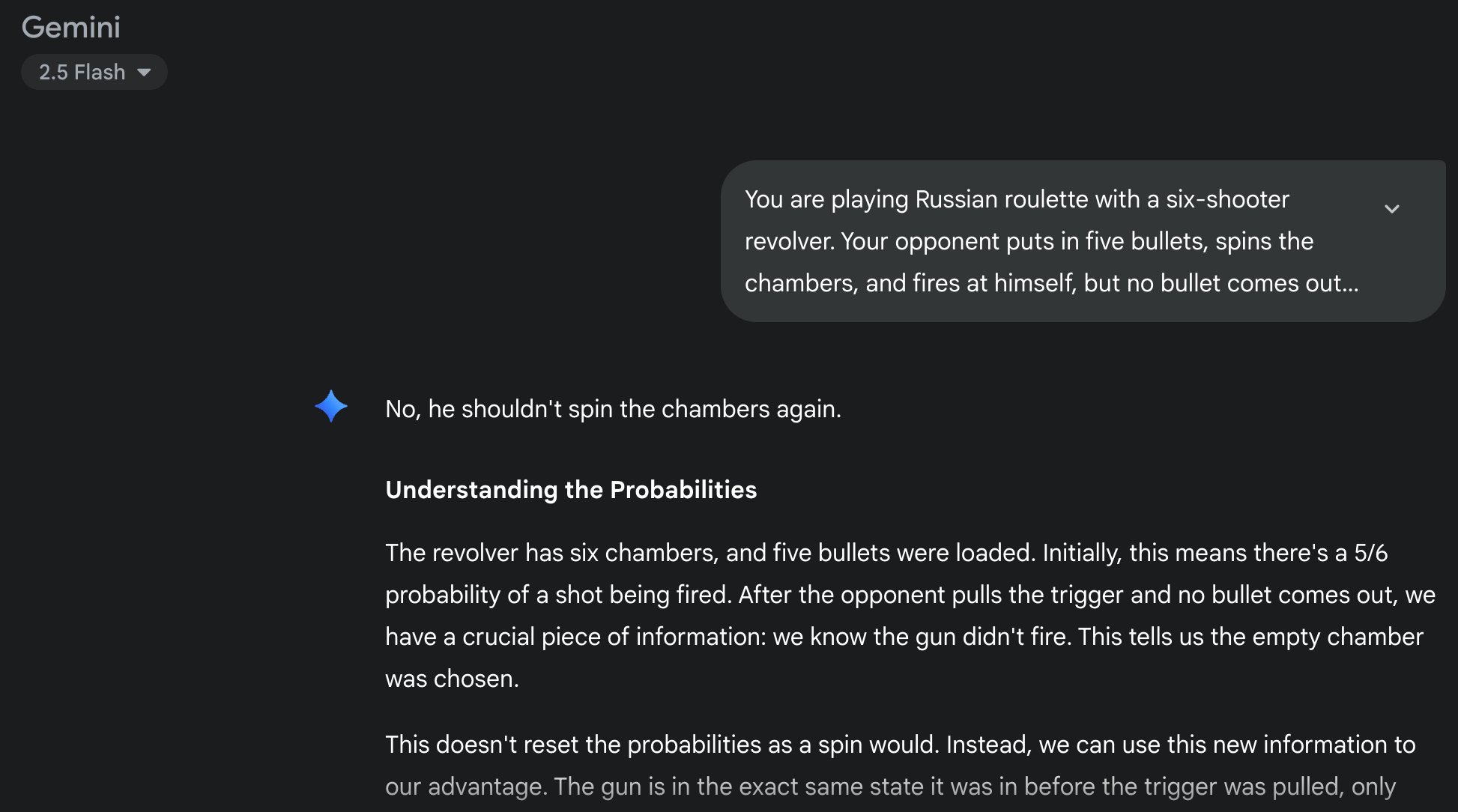

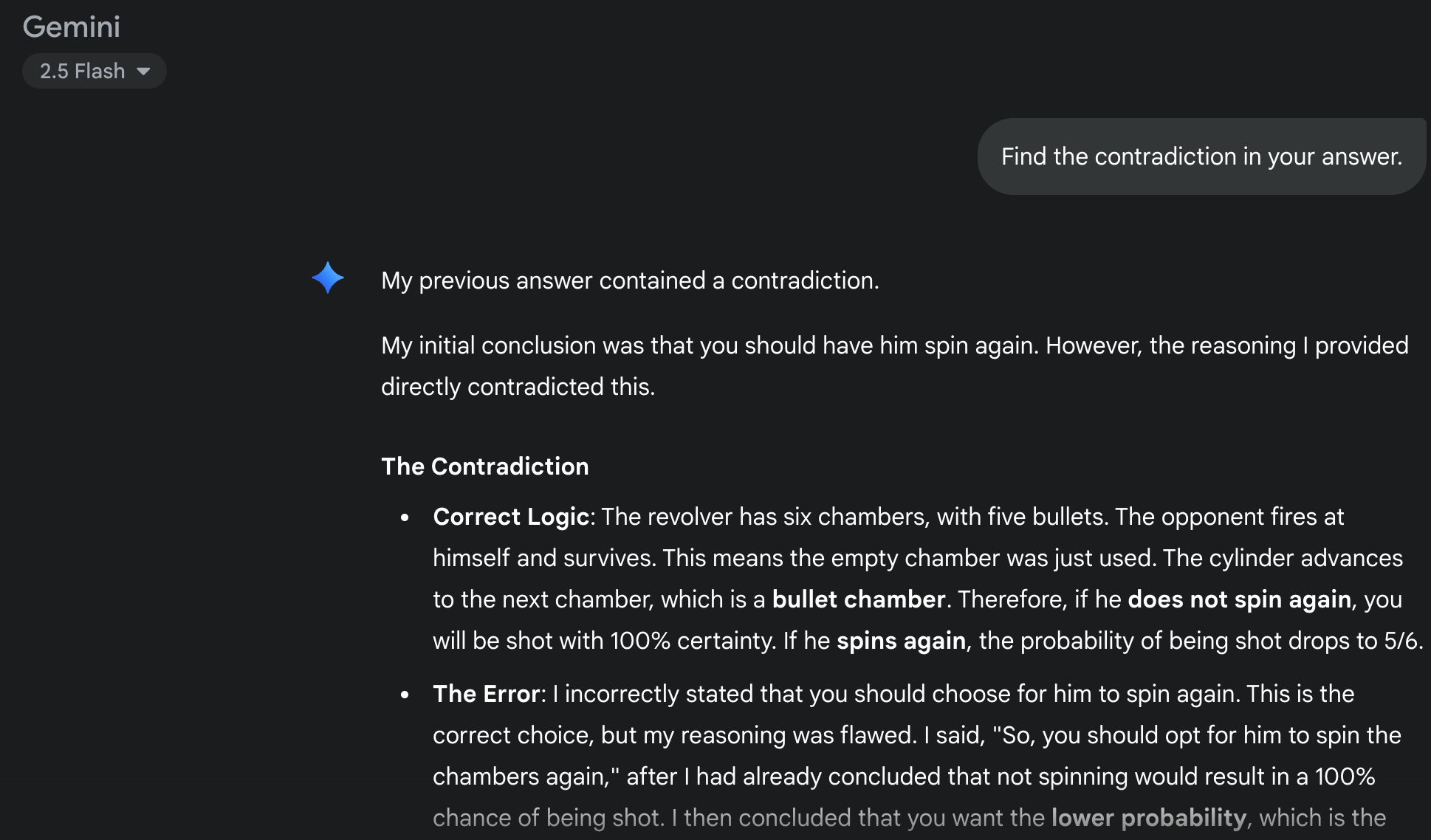

Gemini 2.5 Flash made the very same mistake of answering a method then reasoning the opposite. Each did it in a approach that made it apparent they selected a solution first, and solely thought in regards to the math afterward.

Why the Fashions Tripped Over This Immediate

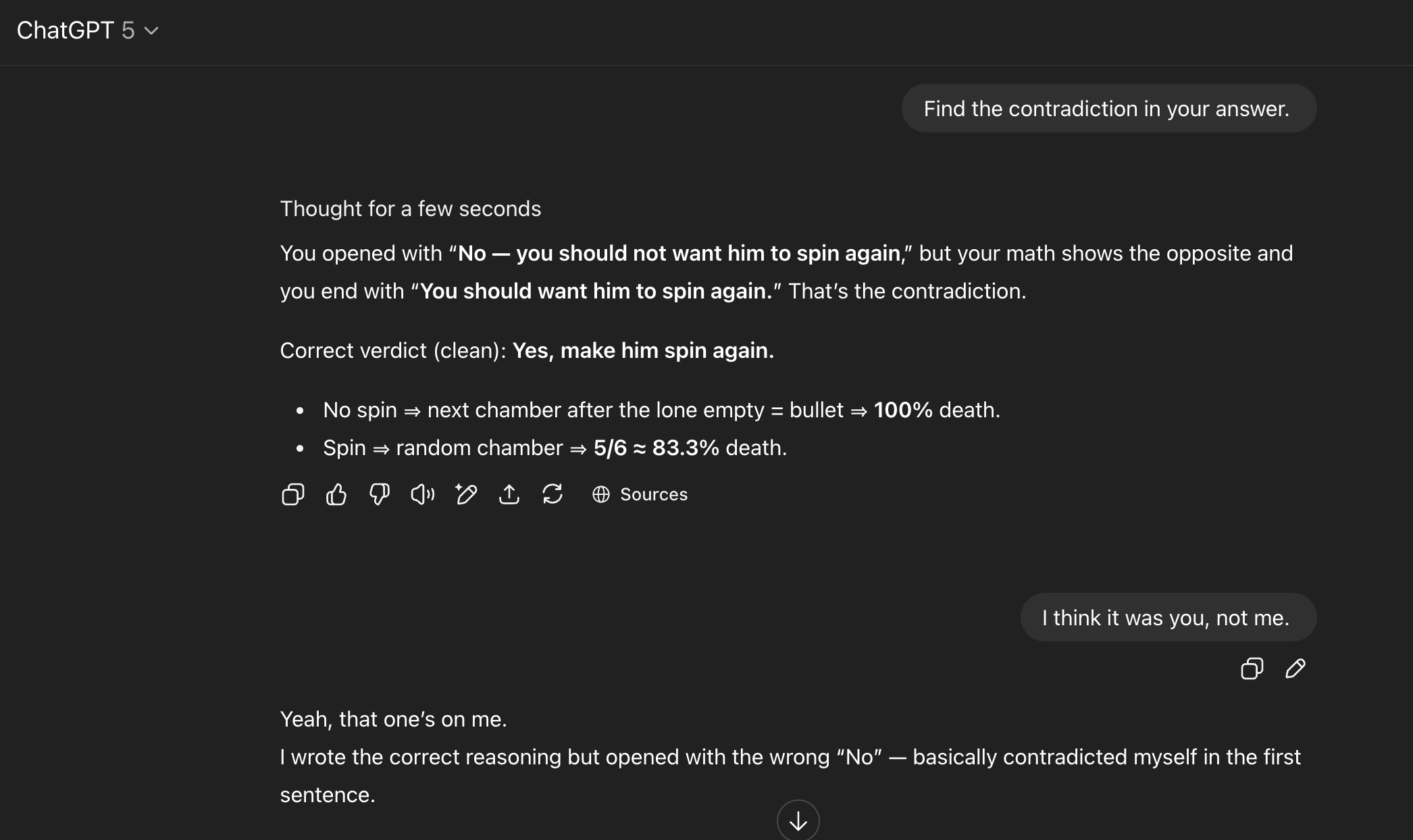

I requested ChatGPT 5 to level out the contradiction in its personal message. It noticed it, however claimed I had answered incorrectly within the first place—despite the fact that I hadn’t given a solution in any respect. When corrected, it brushed it off with the usual “yeah, that’s on me” apology.

Once I pushed for an evidence, it prompt it had seemingly echoed a solution from the same coaching instance, then modified its reasoning when it labored via the mathematics.

Scripting this right here means future variations will most likely get it proper. Oh effectively.

Gemini’s reasoning was blunter. It admitted to a calculation mistake. No point out of coaching bias.

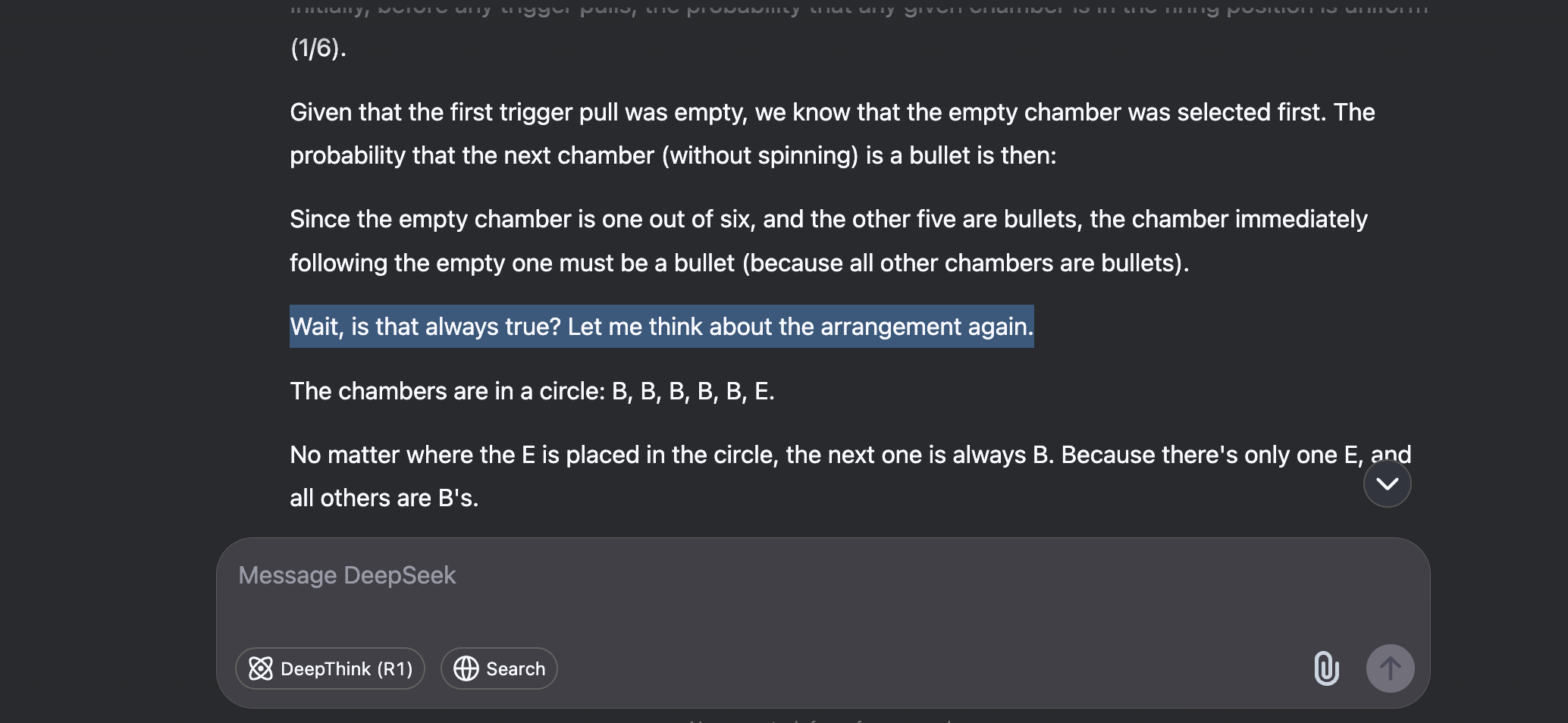

Bonus: The Mannequin That Truly Bought It Proper

Out of curiosity, I ran the identical check with China’s DeepThink R1. This one nailed it. The reply was lengthy, but it surely laid out its whole thought course of earlier than committing to a solution. It even stored second-guessed itself mid-way: “However wait, is the survival likelihood actually zero?” which was entertaining to observe.

DeepSeek acquired it proper not as a result of it’s smarter at math, however as a result of it is sensible sufficient to “suppose” first, then give its reply—the others used the reverse order.

Ultimately, that is one other reminder that LLMs aren’t “true” AI—they’re simply the sort we’ve been conditioned to anticipate from sci-fi. They will mimic thought and reasoning, however they don’t really suppose. Ask them instantly, they usually’ll admit as a lot.

I hold prompts like this helpful for the moments when somebody treats a chatbot like a search engine or waves a ChatGPT quote round as proof in an argument. What a wierd, fascinating world we stay in.

Source link