ChatGPT’s reminiscence was once easy. You instructed it what to recollect, and it listened.

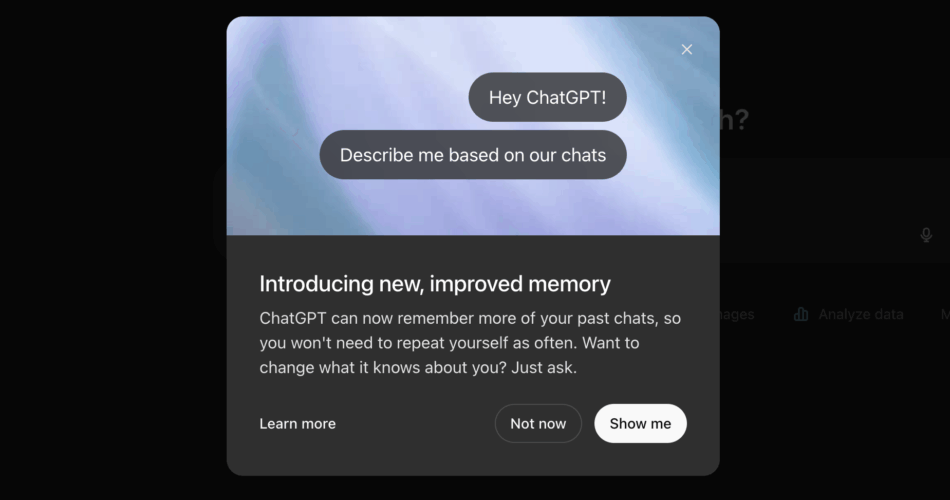

Since 2024, ChatGPT has had a reminiscence characteristic that lets customers retailer useful context. Out of your tone of voice and writing model to your objectives, pursuits, and ongoing initiatives. You can go into settings to view, replace, or delete these reminiscences. Sometimes, it might notice one thing essential by itself. However largely, it remembered what you requested it to. Now, that’s altering.

OpenAI, the corporate behind ChatGPT, is rolling out a major upgrade to its memory. Past the handful of details you manually saved, ChatGPT will now draw from your entire previous conversations to tell future responses by itself.

According to OpenAI, reminiscence now works in two methods: “saved reminiscences,” added instantly by the consumer, and insights from “chat historical past,” that are those that ChatGPT will collect robotically.

This characteristic, known as long-term or persistent reminiscence, is rolling out to ChatGPT Plus and Professional customers. Nonetheless, on the time of writing, it’s not obtainable within the UK, EU, Iceland, Liechtenstein, Norway, or Switzerland on account of regional rules.

The concept right here is easy: the extra ChatGPT remembers, the extra useful it turns into. It’s a giant leap for personalization. But it surely’s additionally an excellent second to pause and ask what we could be giving up in return.

A reminiscence that will get private

It’s straightforward to see the enchantment right here. A extra customized expertise from ChatGPT means you clarify your self much less and get extra related solutions. It’s useful, environment friendly, and acquainted.

“Personalization has all the time been about reminiscence,” says Rohan Sarin, Product Supervisor at Speechmatics, an AI speech tech firm. “Understanding somebody for longer means you don’t want to clarify every part to them anymore.”

He offers an instance: ask ChatGPT to suggest a pizza place, and it would gently steer you towards one thing extra aligned along with your health objectives – a refined nudge based mostly on what it is aware of about you. It is not simply following directions, it’s studying between the strains.

“That’s how we get near somebody,” Sarin says. “It’s additionally how we belief them.” That emotional resonance is what makes these instruments really feel so helpful – possibly even comforting. But it surely additionally raises the danger of emotional dependence. Which, arguably, is the entire level.

“From a product perspective, storage has all the time been about stickiness,” Sarin tells me. “It retains customers coming again. With every interplay, the switching price will increase.”

OpenAI doesn’t conceal this. The corporate’s CEO,. Sam Altman, tweeted that reminiscence permits “AI techniques that get to know you over your life, and develop into extraordinarily helpful and customized.”

That usefulness is obvious. However so is the danger of relying on them not simply to assist us, however to know us.

Does it keep in mind like we do?

A problem with long-term reminiscence in AI is its incapacity to grasp context in the identical manner people do.

We instinctively compartmentalize, separating what’s personal from what’s skilled, what’s essential from what’s fleeting. ChatGPT might wrestle with that kind of context switching.

Sarin factors out that as a result of folks use ChatGPT for therefore many various issues, these strains might blur. “IRL, we depend on non-verbal cues to prioritize. AI doesn’t have these. So reminiscence with out context might deliver up uncomfortable triggers.”

He offers the instance of ChatGPT referencing magic and fantasy in each story or inventive suggestion simply since you talked about liking Harry Potter as soon as. Will it draw from previous reminiscences even when they’re now not related? “Our potential to overlook is a part of how we develop,” he says. “If AI solely displays who we have been, it would restrict who we develop into.”

And not using a approach to rank, the mannequin might floor issues that really feel random, outdated, and even inappropriate for the second.

Bringing AI reminiscence into the office

Persistent reminiscence could possibly be vastly helpful for work. Julian Wiffen, Chief of AI and Knowledge Science at Matillion, a knowledge integration platform with AI inbuilt, sees sturdy use instances: “It might enhance continuity for long-term initiatives, cut back repeated prompts, and provide a extra tailor-made assistant expertise,” he says.

However he’s additionally cautious. “In apply, there are critical nuances that customers, and particularly firms, want to think about.” His greatest considerations listed below are privateness, management, and knowledge safety.

“I usually experiment or suppose out loud in prompts. I wouldn’t need that retained – or worse, surfaced once more in one other context,” Wiffen says. He additionally flags dangers in technical environments, the place fragments of code or delicate knowledge may carry over between initiatives, elevating IP or compliance considerations. “These points are magnified in regulated industries or collaborative settings.”

Whose reminiscence is it anyway?

OpenAI stresses that customers can nonetheless handle reminiscence – delete particular person reminiscences that are not related anymore, flip it off totally, or use the brand new “Momentary Chat” button. This now seems on the prime of the chat display for conversations that aren’t knowledgeable by previous reminiscences and will not be used to construct new ones both.

Nonetheless, Wiffen says that may not be sufficient. “What worries me is the dearth of fine-grained management and transparency,” he says. “It is usually unclear what the mannequin remembers, how lengthy it retains data, and whether or not it may be really forgotten.”

He’s additionally involved about compliance with knowledge safety legal guidelines, like GDPR: “Even well-meaning reminiscence options might by accident retain delicate private knowledge or inner data from initiatives. And from a safety standpoint, persistent reminiscence expands the assault floor.” That is seemingly why the brand new replace hasn’t rolled out globally but.

What’s the reply? “We’d like clearer guardrails, extra clear reminiscence indicators, and the power to completely management what’s remembered and what’s not,” Wiffen explains.

Not all AI remembers the identical

Different AI instruments are taking completely different approaches to reminiscence. For instance, AI assistant Claude doesn’t retailer persistent reminiscence exterior your present dialog. Meaning fewer personalization options, however extra management and privateness.

Perplexity, an AI search engine, doesn’t deal with reminiscence in any respect – it retrieves real-time net data as an alternative. Whereas Replika, AI designed for emotional companionship, goes the opposite manner, storing long-term emotional context to deepen relationships with customers.

So, every system handles reminiscence in a different way based mostly on its objectives. And the extra they find out about us, the higher they fulfill these objectives – whether or not that’s serving to us write, join, search, or really feel understood.

The query isn’t whether or not reminiscence is beneficial; I feel it clearly is. The query is whether or not we would like AI to develop into this good at fulfilling these roles.

It’s straightforward to say sure as a result of these instruments are designed to be useful, environment friendly, even indispensable. However that usefulness isn’t impartial, it’s intentional. These techniques are constructed by firms that profit once we depend on them extra.

You wouldn’t willingly quit a second mind that remembers every part about you, probably higher than you do. And that’s the purpose. That’s what the businesses behind your favourite AI instruments are relying on.

You may additionally like

Source link