Synthetic intelligence startup and MIT spinoff Liquid AI Inc. as we speak launched its first set of generative AI fashions, they usually’re notably completely different from competing fashions as a result of they’re constructed on a essentially new structure.

The new models are being referred to as “Liquid Basis Fashions,” or LFMs, they usually’re mentioned to ship spectacular efficiency that’s on a par with, and even superior to, among the finest giant language fashions obtainable as we speak.

The Boston-based startup was based by a group of researchers from the Massachusetts Institute of Expertise, together with Ramin Hasani, Mathias Lechner, Alexander Amini and Daniela Rus. They’re mentioned to be pioneers within the idea of “liquid neural networks,” which is a category of AI fashions that’s fairly completely different from the Generative Pre-trained Transformer-based fashions we all know and love as we speak, comparable to OpenAI’s GPT collection and Google LLC’s Gemini fashions.

The corporate’s mission is to create extremely succesful and environment friendly general-purpose fashions that can be utilized by organizations of all sizes. To try this, it’s constructing LFM-based AI programs that may work at each scale, from the community edge to enterprise-grade deployments.

What are LFMs?

In keeping with Liquid, its LFMs signify a brand new era of AI programs which are designed with each efficiency and effectivity in thoughts. They use minimal system reminiscence whereas delivering distinctive computing energy, the corporate explains.

They’re grounded in dynamical programs, numerical linear algebra and sign processing. That makes them best for dealing with numerous forms of sequential information, together with textual content, audio, pictures, video and alerts.

Liquid AI first made headlines in December when it raised $37.6 million in seed funding. On the time, it defined that its LFMs are based mostly on a more moderen, Liquid Neural Community structure that was initially developed at MIT’s Pc Science and Synthetic Intelligence Laboratory. LNNs are based mostly on the idea of synthetic neurons, or nodes for reworking information.

Whereas conventional deep studying fashions want 1000’s of neurons to carry out computing duties, LNNs can obtain the identical efficiency with considerably fewer. It does this by combining these neurons with revolutionary mathematical formulations, enabling it to do way more with much less.

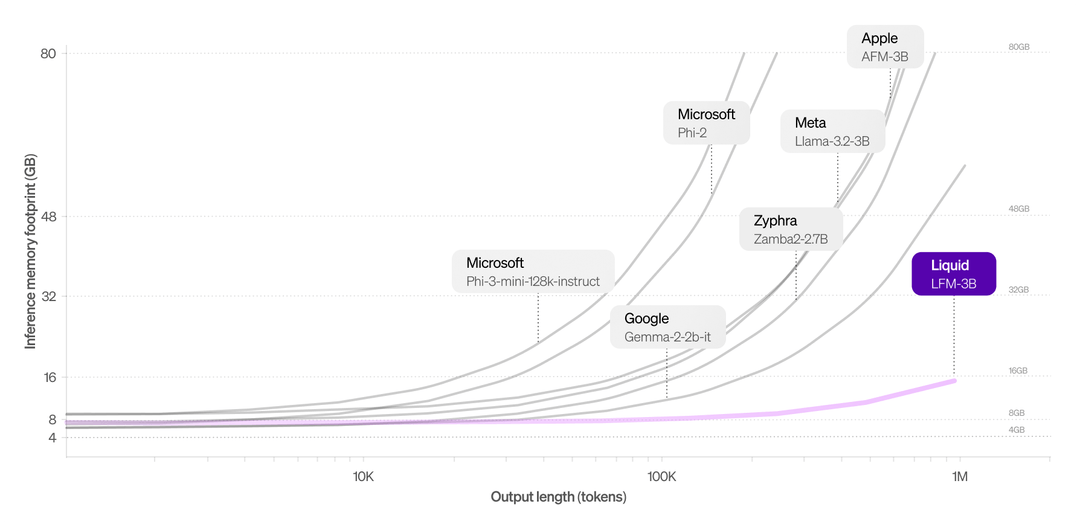

The startup says its LFMs retain this adaptable and environment friendly functionality, which allows them to carry out real-time changes throughout inference with out the big computational overheads related to conventional LLMs. Consequently, they’ll deal with as much as 1 million tokens effectively with none noticeable impression on reminiscence utilization.

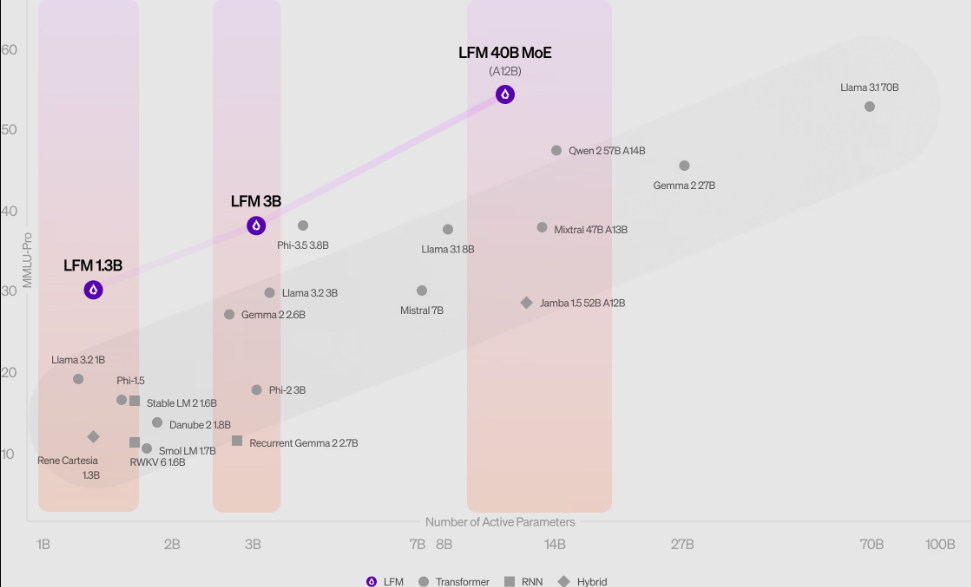

Liquid AI is kicking off with a household of three fashions at launch, together with LFM-1B, which is a dense mannequin with 1.3 billion parameters, designed for resource-constrained environments. Barely extra highly effective is LFM-3B, which has 3.1 billion parameters and is aimed toward edge deployments, comparable to cellular purposes, robots and drones. Lastly, there’s LFM-40B, which is a vastly extra highly effective ” combination of consultants” mannequin with 40.3 billion parameters, designed to deployed on cloud servers as a way to deal with essentially the most complicated use circumstances.

The startup reckons its new fashions have already proven “state-of-the-art outcomes” throughout quite a lot of essential AI benchmarks, and it believes they’re shaping as much as be formidable opponents to present generative AI fashions comparable to ChatGPT.

Whereas conventional LLMs see a pointy improve in reminiscence utilization when performing long-context processing, the LFM-3B mannequin notably maintains a a lot smaller reminiscence footprint (above) which makes it a superb selection for purposes that require giant quantities of sequential information to be processed. Instance use circumstances would possibly embrace chatbots and doc evaluation, the corporate mentioned.

Sturdy efficiency on benchmarks

When it comes to their efficiency, the LFMs delivered some spectacular outcomes, with LFM-1B outperforming transformer-based fashions in the identical measurement class. In the meantime, LFM-3B stands up properly towards fashions comparable to Microsoft Corp.’s Phi-3.5 and Meta Platforms Inc.’s Llama household. As for LFM-40B, its effectivity is such that it could possibly even outperform bigger fashions whereas sustaining an unmatched steadiness between efficiency and effectivity.

Liquid AI mentioned the LFM-1B mannequin put in an particularly dominating efficiency on benchmarks comparable to MMLU and ARC-C, setting a brand new customary for 1B-parameter fashions.

The corporate is making its fashions obtainable in early entry by way of platforms comparable to Liquid Playground, Lambda – by way of its Chat and utility programming interfaces – and Perplexity Labs. That may give organizations an opportunity to combine its fashions into numerous AI programs and see how they carry out in numerous deployment eventualities, together with edge gadgets and on-premises.

One of many issues it’s engaged on now’s optimizing the LFM fashions to run on particular {hardware} constructed by Nvidia Corp., Superior Micro Gadgets Inc., Apple Inc., Qualcomm Inc. and Cerebras Computing Inc., so customers will be capable to squeeze much more efficiency out of them by the point they attain normal availability.

The corporate says it would launch a collection of technical weblog posts that take a deep dive into the mechanics of every mannequin forward of their official launch. As well as, it’s encouraging red-teaming, inviting the AI group to check its LFMs to the restrict, to see what they’ll and can’t but do.

Picture: SiliconANGLE/Microsoft Designer

Your vote of help is essential to us and it helps us hold the content material FREE.

One click on under helps our mission to offer free, deep, and related content material.

Join our community on YouTube

Be part of the group that features greater than 15,000 #CubeAlumni consultants, together with Amazon.com CEO Andy Jassy, Dell Applied sciences founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and lots of extra luminaries and consultants.

THANK YOU

Source link