Ahead-looking: Steady Diffusion is a deep studying mannequin able to turning phrases into eerie, distinctly synthetic photos. The machine studying community normally runs within the cloud and it will also be put in on a beefy PC to work offline. With additional optimizations, the mannequin will be effectively run on Android smartphones as nicely.

Qualcomm was in a position to adapt the picture creation capabilities of Stable Diffusion to a single Android smartphone powered by a Snapdragon 8 Gen 2 SoC machine. It’s a exceptional outcome which, in accordance with the San Diego-based firm, is only the start for AI purposes managed on edge computing units. No web connection is required, Qualcomm assures.

As explained on Qualcomm’s company weblog, Steady Diffusion is a big basis mannequin using a neural community educated on an enormous amount of knowledge at scale. The text-to-image generative AI accommodates one billion parameters, and it has principally been “confined” within the cloud (or on a conventional x86 laptop outfitted with a latest GPU).

Qualcomm AI Analysis employed “full-stack AI optimizations” to deploy Steady Diffusion on an Android smartphone for the very first time, at the least with the form of efficiency described by the corporate. Full-stack AI implies that Qualcomm needed to tailor the applying, the neural community mannequin, the algorithms, the software program and even the {hardware}, although some compromises had been clearly required to get the job executed.

In the beginning, Qualcomm needed to shrink the Single-precision floating-point information format (or FP32) utilized by Steady Diffusion to the lower-precision INT8 information kind. Through the use of its newly-created AI Mannequin Effectivity Toolkit’s (AIMET) post-training quantization, the corporate was in a position to tremendously improve efficiency whereas additionally saving energy and sustaining mannequin accuracy at this decrease precision without having for costly re-training.

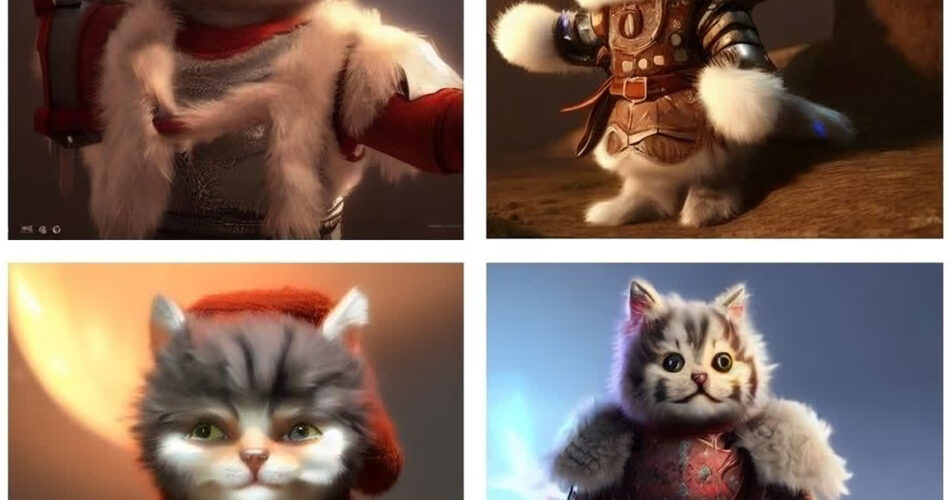

The results of this full-stack optimization was the power to run Steady Diffusion on a cellphone, producing a 512 x 512 pixel picture in below 15 seconds for 20 inference steps. That is the quickest inference on a smartphone and “akin to cloud latency,” Qualcomm said, whereas consumer enter for the textual immediate stays “utterly unconstrained.”

Operating Steady Diffusion on a cellphone is only the start, Qualcomm mentioned, as the power to run giant AI fashions on edge units gives many advantages similar to reliability, latency, privateness, effectivity, and price. Moreover, full-stack optimizations for AI-based {hardware} accelerators can simply be used for different platforms similar to laptops, XR headsets and “nearly some other machine powered by Qualcomm Applied sciences.”

Source link