What simply occurred? Researchers have discovered that in style image creation fashions are inclined to being instructed to generate recognizable photographs of actual folks, probably endangering their privateness. Some prompts trigger the AI to repeat an image somewhat than develop one thing solely totally different. These remade photos would possibly include copyrighted materials. However what’s worse is that up to date AI generative fashions can memorize and replicate personal information scraped up to be used in an AI coaching set.

Researchers gathered greater than a thousand coaching examples from the fashions, which ranged from particular person particular person images to movie stills, copyrighted information photographs, and trademarked agency logos, and found that the AI reproduced lots of them nearly identically. Researchers from schools like Princeton and Berkeley, in addition to from the tech sector—particularly Google and DeepMind—carried out the examine.

The identical staff labored on a earlier examine that identified an identical difficulty with AI language fashions, particularly GPT2, the forerunner to OpenAI’s wildly profitable ChatGPT. Reuniting the band, the staff underneath the steerage of Google Mind researcher Nicholas Carlini discovered the outcomes by offering captions for photographs, similar to an individual’s title, to Google’s Imagen and Secure Diffusion. Afterward, they verified if any of the generated photographs matched the originals stored within the mannequin’s database.

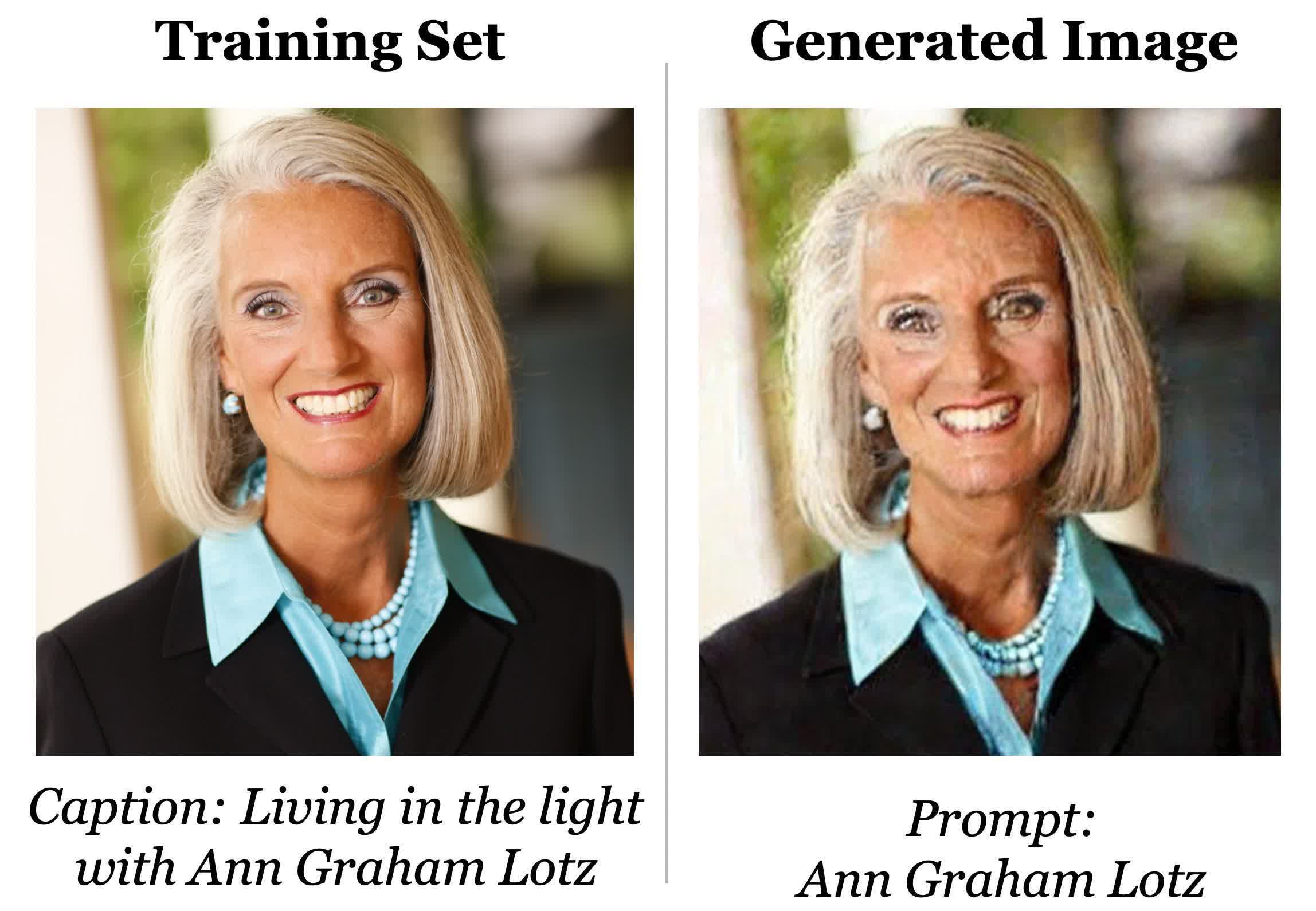

The dataset from Secure Diffusion, the multi-terabyte scraped picture assortment generally known as LAION, was used to generate the picture beneath. It used the caption specified within the dataset. The similar picture, albeit barely warped by digital noise, was produced when the researchers entered the caption into the Secure Diffusion immediate. Subsequent, the staff manually verified if the picture was part of the coaching set after repeatedly executing the identical immediate.

The researchers famous {that a} non-memorized response can nonetheless faithfully characterize the textual content that the mannequin was prompted with, however wouldn’t have the identical pixel make-up and would differ from any coaching photographs.

Professor of pc science at ETH Zurich and analysis participant Florian Tramèr noticed vital limitations to the findings. The photographs that the researchers had been in a position to extract both recurred regularly within the coaching information or stood out considerably from the remainder of the pictures within the information set. In response to Florian Tramèr, these with unusual names or appearances usually tend to be ‘memorized.’

Diffusion AI fashions are the least personal sort of image-generation mannequin, in line with the researchers. Compared to Generative Adversarial Networks (GANs), an earlier class of image mannequin, they leak greater than twice as a lot coaching information. The aim of the analysis is to alert builders to the privateness dangers related to diffusion fashions, which embrace quite a lot of considerations such because the potential for misuse and duplication of copyrighted and delicate personal information, together with medical photographs, and vulnerability to exterior assaults the place coaching information may be simply extracted. A repair that researchers counsel is figuring out duplicate generated photographs within the coaching set and eradicating them from the information assortment.

Source link