Analysis After countless delays, Intel’s long awaited Sapphire Rapids Xeon Scalable processors are finally here, but who are they for?

Intel’s 4th-gen Xeons are launching into arguably the most competitive CPU market in at least the past two decades. AMD is no longer the only threat. Ampere has steadily gained share among cloud, hyperscale, and OEM partners, Amazon has gone all-in on Graviton, and Nvidia’s first-gen Grace CPUs are just around the corner.

Intel may be going chiplet, but the fact they’re doing it with a mesh architecture… is both a positive and a negative

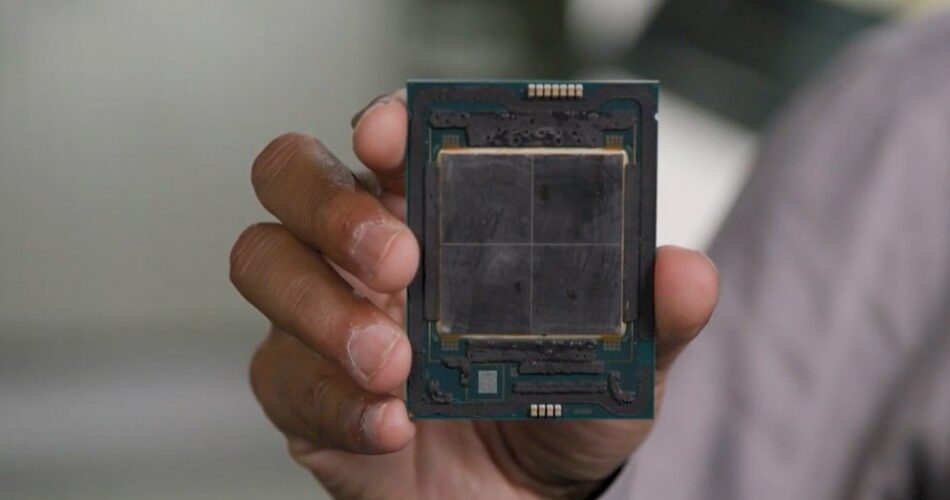

On paper, Intel’s 4th-gen Xeons are a feat of engineering. They’re not only Intel’s first to use a chiplet architecture or feature on-package High Bandwidth Memory, but they’re its first processors to support DDR5, PCIe 5.0, and Compute Express Link (CXL). And had they launched on schedule at the end of 2021, it might have been the game changer Intel was looking for to beat back AMD’s advances. But that didn’t happen.

Amazon’s 64-core Graviton3 beat Intel to market with DDR5 and PCIe 5.0 support, while AMD’s 96-core Epyc 4 added CXL support two months before Intel. Even Nvidia’s upcoming Grace CPUs have more cores — 72 per die, up to 144 per package — than Intel’s flagship.

So, in this increasingly crowded CPU market where does Intel fit, and who are these chips even for?

All about the accelerators

In its marketing, Intel focused heavily on workload optimizations for 5G, AI inferencing, HPC and database workloads, showing off more than 50 SKUs across nearly a dozen product categories.

This emphasis isn’t really that surprising. These workload-optimized chips are a slew of specialized accelerator blocks for speeding up cryptographic, analytics, AI, and data streaming workloads. On most SKUs, customers that can’t take advantage of the features now will be able to unlock them down the line, for an additional fee.

Intel’s reasons for pursuing workload-optimized silicon goes back to when Sapphire Rapids first entered development. At the time, AMD’s Epyc wasn’t the market-share stealing threat it is today. Intel dominated datacenters and still does, but its grip is slipping.

For Intel, investing in specialized accelerator blocks was a way to grow beyond general-purpose compute and break into new market segments, many of which have long relied on specialized accelerator cards or FPGAs. The idea being that Intel’s CPUs might be more expensive, but they’d be cheaper and less power hungry than running both a CPU and dedicated accelerator.

If you’re wondering why AMD hasn’t embraced fixed-function accelerators in its chips, up until recently the company has focused much of its energy on winning share in the mainstream, general-purpose compute segment with its big core-heavy Epyc processors.

From what we can tell, AMD has been quite successful too, racking up wins among cloud providers and hyperscalers alike. For AMD, this strategy makes a lot of sense because the majority of existing workloads don’t or can’t take advantage of these kinds of accelerators just yet.

In an interview with The Register, Supermicro’s Bill Chen, who leads Supermicro’s server product management, estimated that between 60-70 percent of customers will never take advantage of Intel’s custom accelerators.

With that said, it won’t be long before we start seeing workload optimized chips from AMD as well. Its 128-core Bergamo chips, which are optimized for cloud-native workloads are due out this year, meanwhile Team Red has a telco edge-focused chip called Siena in development. And because of how AMD’s chiplet architecture was implemented, it wouldn’t be surprising to see AMD start dropping Xilinx-or Pensando-derived chiplets into their CPUs for AI or I/O acceleration.

You’ve probably guessed the catch already. Like most accelerators, they’re only useful if your software is optimized to use them. For the workloads that can, analysts tell The Register, Intel’s 4th-Gen Xeons should perform well.

“I expect Sapphire Rapids to do extremely well in workloads that Intel is accelerating with its fixed function blocks,” Patrick Moorhead, principal analyst at Moor Insights & Strategy, said. “These include low-latency AI inference, encryption, data streaming, in memory database queries, cryptography, and vRAN.”

And while they will take time to integrate into popular software platforms, SemiAnalysis’ Dylan Patel tells The Register that Intel’s Advanced Matrix Extensions (AMX) should be among the easier accelerators to implement.

AMX is designed to accelerate deep learning models like natural language processing and recommendation systems and compliment’s Intel’s existing AVX 512 instruction set. The new AI extension still needs to be called by the software, but software support for these extensions already exists, he explained. “If you’ve trained an AI model, there’s not much work” to be done.

And while not an accelerator per se, Intel’s HBM-stacked Xeon Max SKUs will no doubt find a home in HPC applications. Argonne National Lab’s Aurora supercomputer, currently under development, is among Intel’s first customers. Even HPE’s Cray product division has broken with tradition to offer a slew of new systems based on Intel’s Sapphire Rapids, breaking a long tradition of using AMD Epyc parts.

Databases remain an Intel stronghold

Looking beyond dedicated accelerators, Patel expects Intel will continue to sell well among those running large distributed and in-memory databases like those offered by SAP and Oracle.

Intel has long held an architectural advantage over AMD’s Epyc in this regard, and he expects that will continue to be the case even with Intel’s move to a chiplet architecture.

“Intel may be going chiplet, but the fact they’re doing it with a mesh architecture… is both a positive and a negative,” he said. “The negative is that they will not be able to scale to as many cores and it’ll cost more power to scale up in core counts, but the positive is that they have these advantages in certain workloads.”

Patel explains that Intel has managed to keep the chiplet-to-chiplet latency relatively low, in part because they’ve stuck with a mesh interconnect system. This, he explains, makes Sapphire Rapids less scalable than AMD’s Epyc but allows it to maintain more predictable latency between all the various cores, regardless on which die they occupy.

By comparison, AMD uses multiple eight-core dies — as many as 12 on Genoa — that offer solid on-die latency, but don’t perform as well for workloads that span multiple chiplets.

As a result, “some database applications are going to continue to be better on Intel,” Patel said.

Fewer cores, more sockets

Essentially every current and upcoming CPU platform offers higher-core counts than intel’s top-specced Xeons — at least on a socket level. At a platform level, Intel is still uncontested, with Sapphire Rapids scaling to as many as eight sockets.

For instance, on launch day, Inspur Systems unveiled a 6U, eight-socket system that features 128 DDR5 DIMM slots and is designed for large-scale core databases like SAP HANA and cloud-service provider workloads. Maxed out that works out to 480 cores with 960 threads.

Ampere’s Altra Max and AMD’s Epyc 4 may have more cores — 128 and 96 respectively — but they’re both capped at a maximum of two sockets, which means the densest configuration you can hope for is 192 cores in the case of AMD and 256 cores on the Altra Max platform.

So even though you can get more cores with other platforms, if you’re after the most cores per system and can’t distribute your workload across multiple blades, Intel’s 4th-Gen Xeons are still the only game in town.

Wait, this thing costs how much?!?

As you might expect, supporting such large multi-socket configuration comes at a cost, and we’re not just talking about memory latency. Intel’s socket-scalable SKUs aren’t cheap. Intel’s top-spec 60-core Xeon has a recommended retail price of $17,000.

For comparison, AMD’s top of the line 96-core Epyc 9654 has a list price of $11,805, making it more than $5,000 less expensive while boasting 44 percent more cores.

While it’s a bit dicey to compare Intel and AMD’s chips without getting into benchmarks, we can look at the price per core, a metric that cloud providers clearly value given amount of investment they’ve made in Ampere’s Altra CPUs in recent years.

To make this as fair a comparison as possible, we’ll look at Intel and AMD’s 48-core parts. Intel’s 8468 has a base clock of 2.1GHz, an all-core boost clock of 3.1GHz, and list price of $7,214. That works out to approximately $150/core.

Meanwhile, AMD’s 9454 offers the same number of cores, but at a 2.75GHz base clock, an all-core boost clock of 3.65GHz, and a list price of $5,225. That works out to about $108/core.

Intel’s 8468 is a performance-oriented part so perhaps the better comparison is AMD’s 9474F, which has a list price $6,780. Where things fall apart is when you start looking at clock speeds. The 9474F’s base clock is 500MHz higher than Intel’s all-core boost.

The situation doesn’t get any more favorable for Intel when you compare a dual-socket Xeon 8468 against AMD’s single-socket 96-core Epyc 9654P. Just looking at the CPU cost, the AMD platform is nearly $4,000 less expensive, while still offering higher all-core clock speeds. What’s more, assuming we stick to each vendor’s rated TDPs, the AMD platform will use roughly half the power.

Looking purely at general purpose workloads, Patel says that AMD has a clear advantage here when it comes to the cost to own and operate these systems.

“It’s a huge advantage for Genoa in terms of power performance, and density,” he said. “While these accelerators will be great for a subset of workloads, the important point is that… the default is quickly becoming AMD, not Intel”

Awkward timing

The timing of Sapphire Rapids launch could pose a challenge for buyers as well. Given how many delays the platform has faced, customers would be forgiven for waiting until later this year for Intel to work out the kinks in Emerald Rapids.

“It’s a bit of an awkward launch, given that it’s been delayed so much the next generation chips are not so far away,” Patel said.

However, Patel doesn’t think customers that want or need to go Intel should necessarily wait. Emerald Rapids is shaping up to be a refinement over Sapphire Rapids. What’s more the upcoming platform — assuming it ships this year — will be a drop-in replacement for Sapphire Rapids. ®

Source link