Humans can no longer reliably tell the difference between a real human face and an image of a face generated by artificial intelligence, according to a pair of researchers.

Two boffins – Sophie Nightingale from the Department of Psychology at the UK’s Lancaster University and Hany Farid from Berkley’s Electrical Engineering and Computer Sciences Department in California – studied human evaluations of both real photographs and AI-synthesized images, leading them to conclude nobody can reliably tell the difference anymore.

In one part of the study – published in the Proceedings of the National Academy of Sciences USA – humans identified fake images on just 48.2 per cent of occasions.

In another part of the study, participants were given some training and feedback to help them spot the fakes. While that cohort did spot real humans 59 per cent of the time, their results plateaued at that point.

The third part of the study saw participants rate the faces as “trustworthy” on a scale of one to seven. Fake faces were rated as more trustworthy than the real ones.

“A smiling face is more likely to be rated as trustworthy, but 65.5 per cent of our real faces and 58.8 per cent of synthetic faces are smiling, so facial expression alone cannot explain why synthetic faces are rated as more trustworthy,” wrote the researchers.

The fake images were formed using generative adversarial networks (GANs), a class of machine learning frameworks where two neural networks play a type of contest with one another until the network trains itself to create better content.

The technique starts with a random array of pixels and iteratively learns to create a face. A discriminator, meanwhile, learns to detect the synthesized face after each iteration. If it succeeds, it penalizes the generator. Eventually, the discriminator can’t tell the difference between real and syenethesised faces and – voila! – apparently neither can a human.

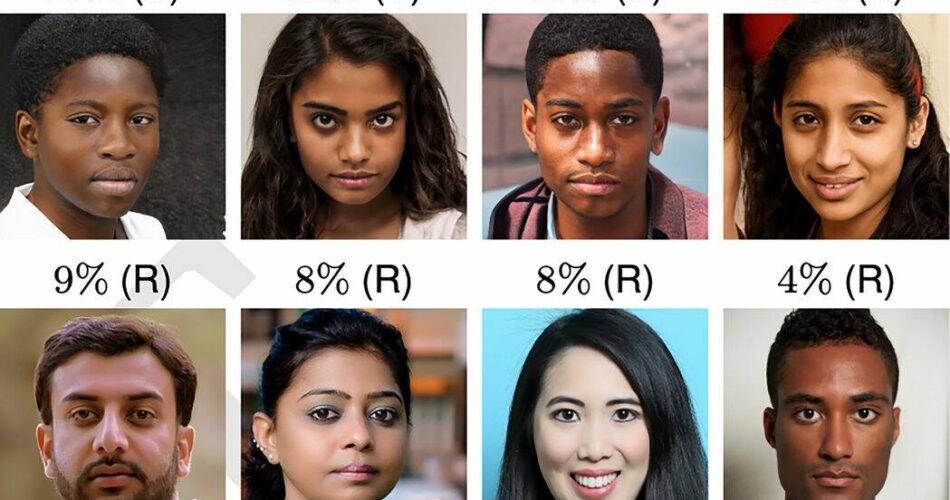

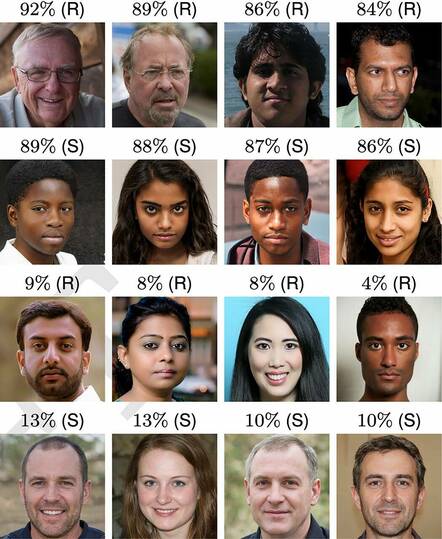

The final images used in the study included a diverse set of 400 real and 400 synthesized faces representing Black, South Asian, East Asian and White faces. Male and female faces were included – unlike previous studies that primarily used White male faces.

White faces were the least accurately classified, and male White faces were even less accurately classified than female White ones.

“We hypothesize that White faces are more difficult to classify because they are overrepresented in the StyleGAN2 training dataset and are therefore more realistic,” explained the researchers.

The scientists said that while creating realistic faces is a success, it also creates potential problems such as nonconsensual intimate imagery (often misnamed as “revenge porn”), fraud, and disinformation campaigns as nefarious use cases of fake images. Such activities, they wrote, have “serious implications for individuals, societies, and democracies.”

The authors suggested those developing such technologies should consider whether the benefits outweigh the risks – and if they don’t, just don’t create the tech. Perhaps after recognizing that tech with big downsides is irresistible to some, they then recommended parallel development of safeguards – including established guidelines that mitigate potential harm caused by synthetic media technologies.

There are currently ongoing efforts to improve detection of deepfakes and similar media, such as building prototype software capable of detecting images made with neural networks. A Michigan State University (MSU) and Facebook AI Research (FAIR) collaboration last year even suggested the architecture of the neural network used to create the images.

But The Register recommends against taking any of Meta’s deepfake debunking effort at … erm … face value. After all, its founder has been known to put out images himself that will never ever ever ever leave the uncanny valley, thereby proving that, as narrow as that valley has become as a result of this study, it’s here to stay. ®

Source link