AlphaICs says it has put its first AI chip from design to production on a shoestring budget of $10m in venture-capital funding.

The four-year-old startup on Monday announced it was sampling the chip, which is called Gluon, to customers for evaluation. The biz endured delays amid the global chip supply crunch to get completed components from TSMC, and the next step is to get customer feedback on how the chip performs, Prashant Trivedi, AlphaICs co-founder, told The Register.

The accelerator can deliver 8 TOPS of INT8 performance. The company is targeting neural-network computer-vision systems that include the recognition of faces and classification of objects in smart-city-style environments, retail settings, and autonomous robot and driving applications.

“We are starting with the AI inference chip,” Trivedi said. “As we move further we will increase the compute as well as get learning included in the chip.”

Semiconductor startups attracted close to $19.2bn in funding last year, according to data from S&P. The vast majority are fabless, and the biggest challenge for them is to get components into production, especially during the semiconductor shortage.

Chip shortages aren’t a big issue for startups as they aren’t shipping in volumes, but it is tough to get capacity at a manufacturer like TSMC on small orders, said John Abbott, an analyst at S&P Global.

“If everyone’s going through TSMC, then they haven’t got the capacity either. And then they’ll choose the best customers, the ones they really want to keep,” Abbott said. “Startups will have difficulty in getting supply.” Apple and Qualcomm are some of TSMC’s top customers and commanding production facilities at the moment.

Livin’ on the edge

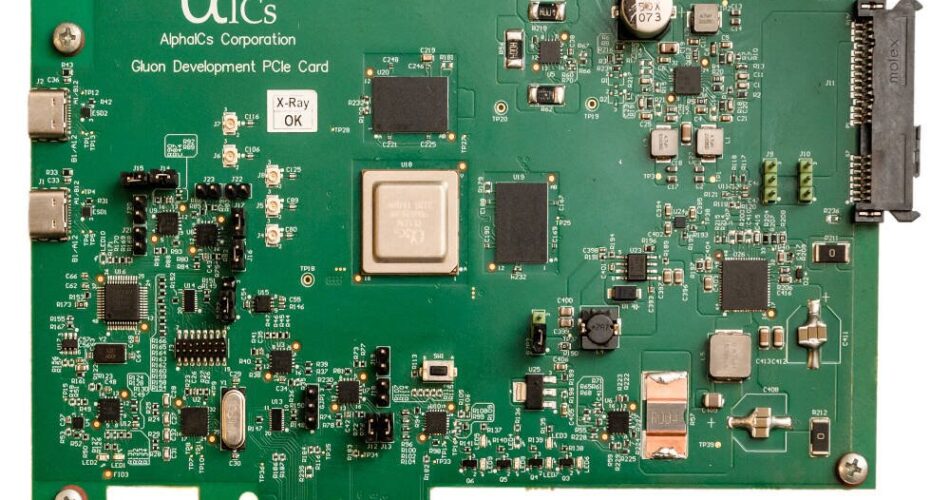

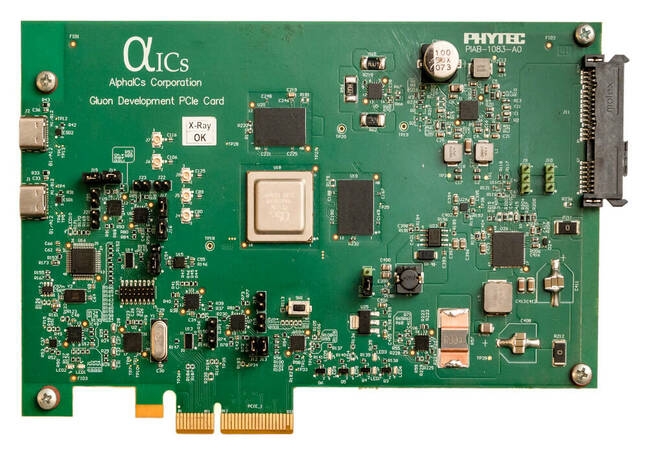

AlphaICs was co-founded in 2016 by ex-Intel executive Vinod Dham, who is known as the Father of the Pentium. The outfit bagged seed funding of around $2.5m and a further $8m in investment, and shipped FPGA-based evaluation boards that implemented its hardware designs. Its latest Gluon processor is fabbed using TSMC’s 16nm FinFET process node. It uses PCIe to interface with Linux-based host systems, and interfaces with LPDDR4 DRAM. As an accelerator, it fits into a host, receives data and trained models over PCIe and returns its predictions, as directed by its software stack. It accepts models built using TensorFlow, for one thing.

Gluon is made up of a number of what AlphaICs calls agents. Each agent has a set of tensor processing units, which do the matrix multiplications necessary for machine-learning inference, and a scalar processor core for control. This first edition of Gluon will have 16 tensor units per agent, and 16 agents.

Trivedi said Gluon uses a novel instruction set architecture, and to squeeze out extra performance it is capable of merging instructions to increase performance, and reduce requests from memory where possible. Although the aim initially is inference – getting a trained model to make a decision based on input data – the startup hopes by scaling up its agent and tensor unit count, its Gluon family will have enough TOPS of performance to do learning at the network edge. That means training a model not on a big backend server but in an embedded device.

“Gluon is primarily focused on low-power, like a three to five-watt system that can run and take three or four camera inputs and can do that job. But then going forward, we think edge learning is a pretty novel idea,” Trivedi said. Learning at edge could potentially solve a problem in AI called model drift, in which bad data can put a training model off track, he explained.

Products with built-in learning at the edge will result in the faster refinement of its decision-making, and eliminate distorted data to maintain the accuracy of larger training models, it is hoped. Model drift was an issue for some during the pandemic, with sudden changes in supply chain trends throwing AI models, which were based on long-term trends, off balance.

“You train your system on data or image distribution, when it actually goes in the field that changes. Then you get into problems if your accuracy drops, and you have to retrain it. That is something we are trying to solve with edge learning,” Trivedi said.

The company, which has offices in Silicon Valley and India and a presence in Japan, expects to ship a successor to Gluon, called Dolphin, that will deliver 32 TOPS of performance, and a 64 TOPS chip code-named Einstein in 2023. ®

Source link