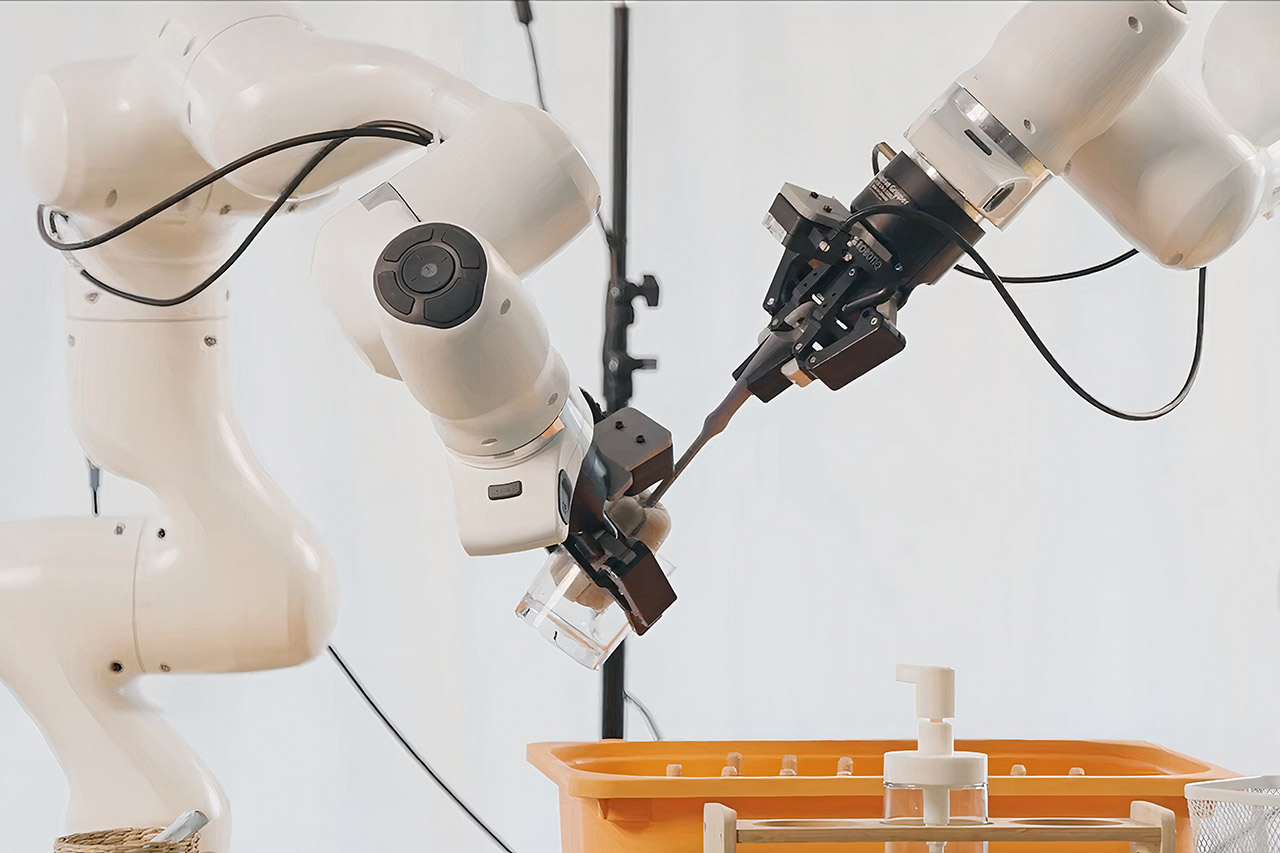

Researchers at Robbyant, an Ant Group subsidiary, have simply launched a powerful new vision-language-action mannequin after gathering 20,000 hours of real-world knowledge from 9 totally different twin arm robotic setups. It’s arguably probably the most highly effective general-purpose controller available on the market, able to dealing with a variety of manipulation jobs on a wide range of {hardware} with out the necessity for frequent retooling.

Robbyant started with a pre-trained imaginative and prescient language mannequin named Qwen2.5-VL, which is already able to decoding pictures and textual content. They then added an motion module on prime of that, which converts such understandings into correct robotic actions. The system begins with a succession of digicam views of the workspace, a pure language instruction detailing what it must do, and a illustration of the robotic’s state, together with its joints and grippers. Then, in a superb transfer, it predicts what it’s going to do subsequent and employs a method often known as move matching to make the management indicators easy and steady, relatively than yelling out step-by-step instructions.

Unitree G1 Humanoid Robot(No Secondary Development)

- Peak, width and thickness (standing): 1270x450x200mm Peak, width and thickness (folded): 690x450x300mm Weight with battery: approx. 35kg

- Complete freedom (joint motor): 23 Freedom of 1 leg: 6 Waist Freedom: 1 Freedom of 1 arm: 5

- Most knee torque: 90N.m Most arm load: 2kg Calf + thigh size: 0.6m Arm arm span: approx. 0.45m Additional massive joint motion house Lumbar Z-axis…

The extra knowledge it collects, the higher it turns into, and testing counsel that it actually takes off if you get from just a few thousand hours to twenty,000. That is just like the sample seen in language fashions as datasets develop in dimension. It seems that robotic intelligence could obtain the identical factor if we preserve feeding it extra details.

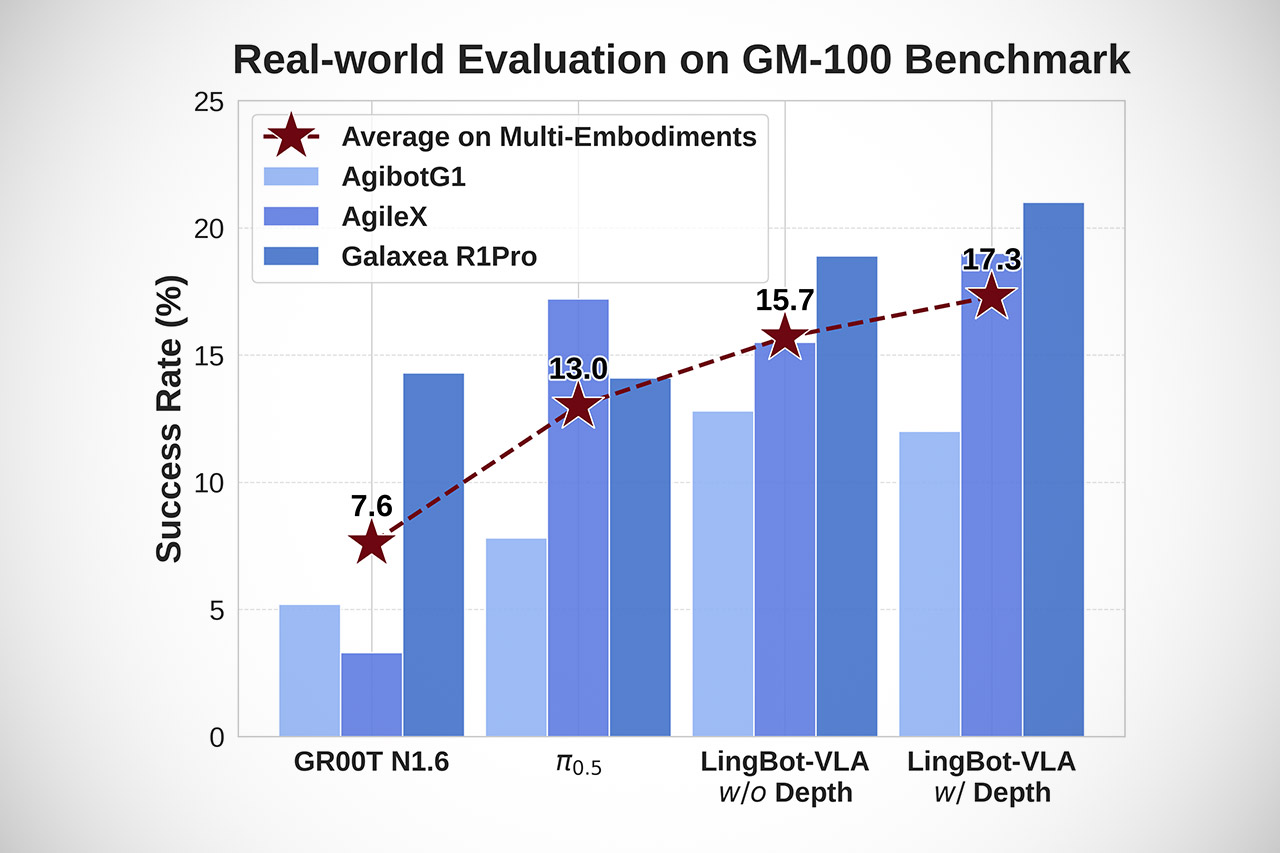

They didn’t cease there, as additionally they included depth notion, which makes a giant distinction. A companion mannequin referred to as LingBot-Depth supplies them with an correct 3D perspective of the world, and when the 2 fashions are mixed, the system improves considerably by way of spatial judgment, distance detection, and form recognition. In a real-world check with 100 powerful duties throughout three totally different robotic platforms, the depth-augmented mannequin dominated, outperforming its competitors by a number of share factors in each completion and progress standards.

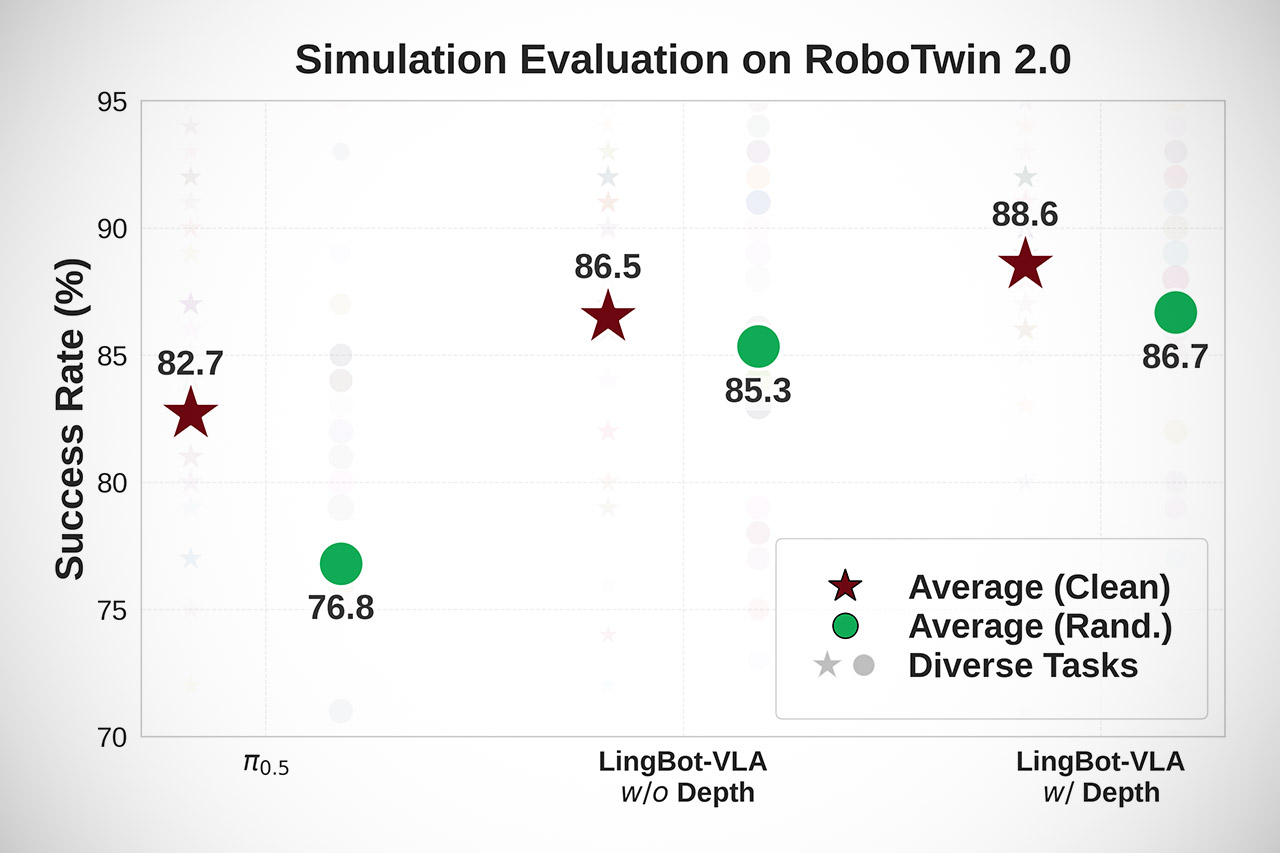

Simulator checks revealed the identical end result: the mannequin remained rock strong even when subjected to a wide range of random stimuli reminiscent of lighting, object places, visible distractions, and so forth. It surpassed different fashions by about ten factors by way of success price. That’s vital if you’re transitioning from a managed lab to a real-world setting.

Robbyant additionally ensured the system was simple to coach and use. The code now runs 1.5 to 2.8 instances sooner than rival open frameworks. Information dealing with, distributed processing, and low-level operator fusion have all been optimized to make coaching sooner and less expensive. This could make it loads simpler for people to check out new duties and equipment.

Adapting this strategy to a brand new robotic is definitely fairly easy; all you want is a tiny show with 80 demos per process at most. As soon as fine-tuned, the good things begins to occur: efficiency transfers flawlessly because the basis is already loaded with an enormous data base of objects, actions, and directions. That is all made doable by the thought of getting a single shared controller that may serve a wide range of machines; consider it as a typical software program library that simply will get put in on all of those totally different units and requires solely a small quantity of configuration.

Every little thing is totally open now. The mannequin weights have been utilized to Hugging Face in 4 parameter variants, with and with out the additional depth integration tossed in for good measure. We’ve additionally uploaded your entire coaching course of, the entire evaluation instruments, and a few benchmark knowledge on GitHub, so if you wish to broaden on this or push the boundaries even additional, you’re welcome to take action. Ant Teams researchers imagine that the one strategy to make real progress with bodily AI is to create fashions which might be high-quality, transportable, and might run on an enormous scale with out breaking the financial institution.

Source link