For the previous decade, image SEO was largely a matter of technical hygiene:

- Compressing JPEGs to appease impatient guests.

- Writing alt textual content for accessibility.

- Implementing lazy loading to maintain LCP scores within the inexperienced.

Whereas these practices stay foundational to a wholesome website, the rise of enormous, multimodal fashions resembling ChatGPT and Gemini has launched new prospects and challenges.

Multimodal search embeds content material varieties right into a shared vector house.

We at the moment are optimizing for the “machine gaze.”

Generative search makes most content material machine-readable by segmenting media into chunks and extracting textual content from visuals by way of optical character recognition (OCR).

Photos have to be legible to the machine eye.

If an AI can not parse the textual content on product packaging as a result of low distinction or hallucinates particulars due to poor decision, that could be a major problem.

This text deconstructs the machine gaze, shifting the main focus from loading pace to machine readability.

Technical hygiene nonetheless issues

Earlier than optimizing for machine comprehension, we should respect the gatekeeper: efficiency.

Photos are a double-edged sword.

They drive engagement however are sometimes the first explanation for structure instability and gradual speeds.

The usual for “ok” has moved past WebP.

As soon as the asset masses, the true work begins.

Dig deeper: How multimodal discovery is redefining SEO in the AI era

Designing for the machine eye: Pixel-level readability

To large language models (LLMs), photos, audio, and video are sources of structured knowledge.

They use a course of referred to as visible tokenization to interrupt a picture right into a grid of patches, or visible tokens, changing uncooked pixels right into a sequence of vectors.

This unified modeling permits AI to course of “an image of a [image token] on a desk” as a single coherent sentence.

These programs depend on OCR to extract textual content straight from visuals.

That is the place high quality turns into a rating issue.

If a picture is closely compressed with lossy artifacts, the ensuing visible tokens change into noisy.

Poor decision may cause the mannequin to misread these tokens, resulting in hallucinations during which the AI confidently describes objects or textual content that don’t really exist as a result of the “visible phrases” had been unclear.

Reframing alt textual content as grounding

For big language fashions, alt textual content serves a brand new perform: grounding.

It acts as a semantic signpost that forces the mannequin to resolve ambiguous visible tokens, serving to verify its interpretation of a picture.

As Zhang, Zhu, and Tambe noted:

- “By inserting textual content tokens close to related visible patches, we create semantic signposts that reveal true content-based cross-modal consideration scores, guiding the mannequin.”

Tip: By describing the bodily features of the picture – the lighting, the structure, and the textual content on the item – you present the high-quality coaching knowledge that helps the machine eye correlate visible tokens with textual content tokens.

The OCR failure factors audit

Search brokers like Google Lens and Gemini use OCR to learn elements, directions, and options straight from photos.

They’ll then reply complicated person queries.

Consequently, picture search engine marketing now extends to bodily packaging.

Present labeling rules – FDA 21 CFR 101.2 and EU 1169/2011 – permit kind sizes as small as 4.5 pt to six pt, or 0.9 mm, on compact packaging.

- “In case of packaging or containers the biggest floor of which has an space of lower than 80 cm², the x-height of the font dimension referred to in paragraph 2 shall be equal to or better than 0.9 mm.”

Whereas this satisfies the human eye, it fails the machine gaze.

The minimum pixel resolution required for OCR-readable textual content is much larger.

Character peak needs to be at the least 30 pixels.

Low contrast can also be a problem. Distinction ought to attain 40 grayscale values.

Be cautious of stylized fonts, which might trigger OCR programs to mistake a lowercase “l” for a “1” or a “b” for an “8.”

Past distinction, reflective finishes create extra issues.

Shiny packaging displays mild, producing glare that obscures textual content.

Packaging needs to be handled as a machine-readability function.

If an AI can not parse a packaging picture due to glare or a script font, it might hallucinate info or, worse, omit the product totally.

Originality as a proxy for expertise and energy

Originality can really feel like a subjective inventive trait, however it may be quantified as a measurable knowledge level.

Unique photos act as a canonical sign.

The Google Cloud Imaginative and prescient API features a function referred to as WebDetection, which returns lists of fullMatchingImages – actual duplicates discovered throughout the online – and pagesWithMatchingImages.

In case your URL has the earliest index date for a singular set of visible tokens (i.e., a particular product angle), Google credit your web page because the origin of that visible info, boosting its “expertise” rating.

Dig deeper: Visual content and SEO: How to use images and videos

Get the e-newsletter search entrepreneurs depend on.

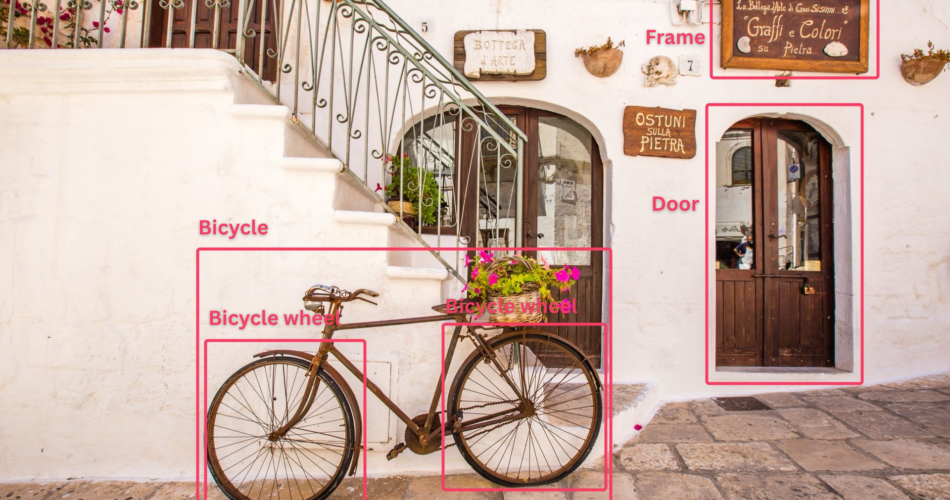

The co-occurrence audit

AI identifies each object in a picture and makes use of their relationships to deduce attributes a few model, worth level, and audience.

This makes product adjacency a rating sign. To guage it, it’s essential audit your visible entities.

You’ll be able to take a look at this utilizing instruments such because the Google Imaginative and prescient API.

For a scientific audit of a whole media library, it’s essential pull the uncooked JSON utilizing the OBJECT_LOCALIZATION function.

The API returns object labels resembling “watch,” “plastic bag” and “disposable cup.”

Google offers this example, the place the API returns the next info for the objects within the picture:

Title

mid

Rating

Bounds

Bicycle wheel

/m/01bqk0

0.89648587

(0.32076266, 0.78941387), (0.43812272, 0.78941387), (0.43812272, 0.97331065), (0.32076266, 0.97331065)

Bicycle

/m/0199g

0.886761

(0.312, 0.6616471), (0.638353, 0.6616471), (0.638353, 0.9705882), (0.312, 0.9705882)

Bicycle wheel

/m/01bqk0

0.6345275

(0.5125398, 0.760708), (0.6256646, 0.760708), (0.6256646, 0.94601655), (0.5125398, 0.94601655)

Good to know: mid comprises a machine-generated identifier (MID) akin to a label’s Google Knowledge Graph entry.

The API doesn’t know whether or not this context is nice or dangerous.

You do, so verify whether or not the visible neighbors are telling the identical story as your price ticket.

By photographing a blue leather-based watch subsequent to a classic brass compass and a heat wood-grain floor, Lord Leathercraft engineers a particular semantic sign: heritage exploration.

The co-occurrence of analog mechanics, aged metallic, and tactile suede infers a persona of timeless journey and old-world sophistication.

{Photograph} that very same watch subsequent to a neon power drink and a plastic digital stopwatch, and the narrative shifts by way of dissonance.

The visible context now indicators mass-market utility, diluting the entity’s perceived worth.

Dig deeper: How to make products machine-readable for multimodal AI search

Quantifying emotional resonance

Past objects, these fashions are more and more adept at studying sentiment.

APIs, resembling Google Cloud Imaginative and prescient, can quantify emotional attributes by assigning confidence scores to feelings like “pleasure,” “sorrow,” and “shock” detected in human faces.

This creates a brand new optimization vector: emotional alignment.

In case you are promoting enjoyable summer season outfits, however the fashions seem moody or impartial – a standard trope in high-fashion pictures – the AI might de-prioritize the picture for that question as a result of the visible sentiment conflicts with search intent.

For a fast spot verify with out writing code, use Google Cloud Vision’s live drag-and-drop demo to evaluation the 4 major feelings: pleasure, sorrow, anger, and shock.

For constructive intents, resembling “blissful household dinner,” you need the enjoyment attribute to register as VERY_LIKELY.

If it reads POSSIBLE or UNLIKELY, the sign is just too weak for the machine to confidently index the picture as blissful.

For a extra rigorous audit:

- Run a batch of photos by way of the API.

- Look particularly on the faceAnnotations object within the JSON response by sending a FACE_DETECTION function request.

- Evaluation the chance fields.

The API returns these values as enums or mounted classes.

This instance comes straight from the official documentation:

"rollAngle": 1.5912293,

"panAngle": -22.01964,

"tiltAngle": -1.4997566,

"detectionConfidence": 0.9310801,

"landmarkingConfidence": 0.5775582,

"joyLikelihood": "VERY_LIKELY",

"sorrowLikelihood": "VERY_UNLIKELY",

"angerLikelihood": "VERY_UNLIKELY",

"surpriseLikelihood": "VERY_UNLIKELY",

"underExposedLikelihood": "VERY_UNLIKELY",

"blurredLikelihood": "VERY_UNLIKELY",

"headwearLikelihood": "POSSIBLE"

The API grades emotion on a hard and fast scale.

The objective is to maneuver major photos from POSSIBLE to LIKELY or VERY_LIKELY for the goal emotion.

UNKNOWN(knowledge hole).VERY_UNLIKELY(robust unfavourable sign).UNLIKELY.POSSIBLE(impartial or ambiguous).LIKELY.VERY_LIKELY(robust constructive sign – goal this).

Use these benchmarks

You can’t optimize for emotional resonance if the machine can barely see the human.

If detectionConfidence is beneath 0.60, the AI is struggling to establish a face.

Consequently, any emotion readings tied to that face are statistically unreliable noise.

- 0.90+ (Ultimate): Excessive-definition, front-facing, well-lit. The AI is for certain. Belief the sentiment rating.

- 0.70-0.89 (Acceptable): Adequate for background faces or secondary way of life photographs.

- The face is probably going too small, blurry, side-profile, or blocked by shadows or sun shades.

Whereas Google documentation doesn’t present this steerage, and Microsoft gives limited access to its Azure AI Face service, Amazon Rekognition documentation notes that:

- “[A] decrease threshold (e.g., 80%) would possibly suffice for figuring out relations in photographs.”

Closing the semantic hole between pixels and that means

Deal with visible belongings with the identical editorial rigor and strategic intent as major content material.

The semantic hole between picture and textual content is disappearing.

Photos are processed as a part of the language sequence.

The standard, readability, and semantic accuracy of the pixels themselves now matter as a lot because the key phrases on the web page.

Contributing authors are invited to create content material for Search Engine Land and are chosen for his or her experience and contribution to the search group. Our contributors work below the oversight of the editorial staff and contributions are checked for high quality and relevance to our readers. Search Engine Land is owned by Semrush. Contributor was not requested to make any direct or oblique mentions of Semrush. The opinions they specific are their very own.

Source link