Since you’re reading a blog on advanced analytics, I’m going to assume that you have been exposed to the magical and amazing awesomeness of experimentation and testing.

It truly is the bee’s knees.

You are likely aware that there are entire sub-cultures (and their attendant Substacks) dedicated to the most granular ideas around experimentation (usually of the landing page optimization variety). There are fat books to teach you how to experiment (or die!). People have become Gurus hawking it.

The magnificent cherry on this delicious cake: It is super easy to get started. There are really easy to use free tools, or tools that are extremely affordable and also good.

ALL IT TAKES IS FIVE MINUTES!!!

And yet, chances are you really don’t know anyone directly who uses experimentation as a part of their regular business practice.

Wah wah wah waaah.

How is this possible?

It turns out experimentation, even of the simple landing page variety, is insanely difficult for reasons that have nothing to do with the capacity of tools, or the brilliance of the individual or the team sitting behind the tool (you!).

It is everything else:

Company. Processes. Ideas. Creatives. Speed. Insights worth testing. Public relations. HiPPOs. Business complexity. Execution. And more.

Today, from my blood, sweat and tears shed working on the front lines, a set of two reflections:

1. What does a robust experimentation program contain?

2. Why do so many experimentation programs end in disappointing failure?

My hope is that these reflections will inspire a stronger assessment of your company, culture, and people, which will, in turn, trigger corrective steps resulting in a regular, robust, remarkable testing program.

First, a little step back to imagine the bigger picture.

Robust & Remarkable Experimentation – so much more than LPO!

The entire online experimentation canon is filled with landing page optimization type testing.

The last couple pages of a 52,000 words book might have ideas for testing the cart and checkout pages that pay-off big, or exploring the limits of comparison tables/charts/text or support options, or even how you write support FAQs. (Premium subscribers see TMAI #257).

Yes. You can test landing pages. But, there is so, so, so much when it comes to experimentation possibilities.

So. Much!

A. As an example, we have a test in-market right now to determine how much of the money we are spending on Bing is truly incremental.

(As outlined in TMAI Premium #223, experiments that answer the priceless marketing incrementality question are the single most effective thing you can do to optimize your marketing budgets. This experimentation eats landing page optimization for breakfast, lunch, dinner, and late-night snack.)

B. Another frequent online experimentation strategy is to use matched-market tests to figure out diminishing return curves for the online-offline media-mix in our marketing plans.

(Should we spend $5 mil on Facebook and YouTube, and $10 mil on TV, or vice versa or something else?)

C. We are routinely live testing our ads on YouTube with different creatives to check for effectiveness, or the same ad against different audiences/day parts/targeting strategies to find the performance maxima. So delicious.

(Bonus read: Four types of experiments you can run on Facebook: A/B, Holdout, Brand Survey, and Campaign Budget.)

D. Perhaps one of the most difficult ones is to figure out complex relationships over long periods of time: Does brand advertising (sometimes hideously also called “upper funnel”) actually drive performance outcomes in the long run? A question with answers worth multi-million dollars.

(Any modern analysis of consumer behavior clearly identifies that the “marketing funnel” does not exist. People are not lemmings you can shove down a path convenient to lazy Marketers. Evolve: See – Think – Do – Care: An intent-centric marketing framework.)

E. Oh, oh, oh, and our long-term favorite: Does online advertising on TikTok, Snap, and Hulu actually drive phone sales in AT&T, Verizon and T-Mobile stores in the real world? The answer will surprise you – and it is priceless!

Obviously, you should also be curious about the inverse.

(Print and Billboards are surprisingly effective at driving digital outcomes! Yes. Print. Yes. Billboards! Well-designed experiments often prove us wrong – with data.)

F. One of the coolest things we’ve done over the last year is to ascertain how to get true incremental complete campaign brand lift for our large campaigns.

You, like us, probably measure change in Unaided Awareness, Consideration, Intent across individual digital channels. This is helpful and not all that difficult to accomplish. But, if you spent $20 mil across five digital platforms, what was the overall de-duped brand lift from all that money?

The answer is not easy, you can get it only via experimentation, and it is invaluable to a CMO.

These are six initial ideas from our work to stretch your mind about what a truly robust experimentation program should solve for! (The space is so stuffed with possibilities, we’ll cover more in future Premium newsletter.)

Next time you hear the phrase testing and experimentation, it is ok to think of landing page optimization first. But. To stop there would be heartbreaking. Keep going for ideas A – F above. That is how you’ll build a robust AND remarkable experimentation practice that’ll deliver a deep and profound impact.

Let me go out on a limb and say something controversial: A robust experimentation program (A – F above) holds the power to massively overshadow the impact you will deliver via a robust digital analytics program.

Note: That sentiment comes from someone who has written two best-selling books on digital analytics!

With the above broad set of exciting possibilities as context (and a new to-do list for you!), I’ll sharpen the lens a bit to focus on patterns that I’ve identified as root causes for the failure of experimentation programs.

From your efforts to build a robust landing page optimization practice to complex matched-market tests for identifying diminishing return curves these challenges cover them all.

9 Reasons Experimentation Programs Fail Gloriously!

As I’d mentioned above, the barrier to experimentation is neither tools (choose from free or extremely affordable) nor Analysts (I’m assuming you have them).

Here are a collection of underappreciated factors why neither you nor anyone you directly know have an experimentation program worth its salt:

1. Not planning for the first five tests to succeed.

I know how hard what I’m about to say is. I truly do.

Your first few tests simply can’t fail.

It takes so much to get going. Such high stakes given the people, process, permissions, politics, implementation issues involved.

You somehow pull all that off and your first test delivers inconclusive results (or “weird” results) and there is a giant disappointment (no matter how trivial the test was). You and I promised a lot to get this program going, so many employees, leaders, egos, teams were involved, it will be a big let-down.

Then your second and third test fail or are underwhelming, and suddenly no one is taking your calls or replying to your emails.

It is unlikely you’ll get to the fifth one. The experimentation program is dead.

I hear you asking: But how can you possibly guarantee the first few tests will succeed?!

Look, it will be hard. But, you can plan smart:

Test significant differences.

Don’t test on 5% of the site traffic.

For the sake of the all things holy, don’t test five shades of blue buttons.

Don’t test for a vanity metric.

Don’t pick a low traffic page/variation.

Have a hypothesis that is entirely based on an insight from your digital analytics data (for the first few) rather than opinions of your Executives (save those ideas for later).

And, all the ideas below.

Your first test delivers a winner, everyone breathes a sigh of relief.

Your fifth test delivers a winner, everyone realizes there’s something magical here, and they leave you alone with enough money and support for the program for a year. Now. You can start failing because you start bringing bold ideas – and a lot of them fail (as they should).

Bonus Tip: The more dramatic the differences you are testing, the less the sample size you’ll need, and faster the results will arrive (because the expected effect is big). Additionally, you’ll reduce the chances of surrounding noise or unexpected variables impacting your experiment.

2. Staring at the start of the conversion process, rather than close to the end.

On average, a human on an ecommerce site will go through 12–28 pages from landing page to order confirmation.

If you are testing buttons, copy, layout, offer, cross-sells / upsells, other things, on the landing page, chances are any positive influence of that excellent test variation will get washed out by the time the site visitor is on page 3 or 5 (if not sooner).

If you plan to declare success of a start of user experience test based on end of user experience result… You are going to need a massive sample to detect (a likely weak) signal. Think needing to have 800,000 humans to go through an experimental design variation on the landing page to detect an impact on conversion rate.

That will take time. Even if you get all that data, there are so many other variables changing across pages/experience for test participants that it will be really hard to establish clear causality. Bottom line: It comes with a high chance of experiment failure.

So, why not start at the end of the conversion process?

Run your first A/B test on the page with the Submit Order button.

Run your MVT test on the page that shows up after someone clicks Start Checkout.

Pick the page for the highest selling product on your site and use it for your first A/B test.

Etc.

The same goes for your Television experiments or online-to-offline conversion validations you need. Pick elements close to the end (sale) vs. at the start (do people like this creative or love it?).

The closer you are to the end, the higher the chances your test will show clear success or failure, and do so faster. This is great for earning your experimentation program executive love AND impact the real business results at the same time.

In context of website experimentation, I realize how oxymoronic it sounds to say, do your landing page optimization on the last page of the customer experience. But that, my friends, is what it takes.

3. Hyping the impact of your experiments.

This is the thing that irritates me immensely, because of just how far back it sets experimentation culturally.

This is how it happens: You test an image/button/test on the landing page. It goes well. Improves conversion rate by 1.2% (huge by the way) and that is, let’s say, $128,000 more in revenue (also wonderful).

You now take that number, annualize the impact, extend it out to all the landing pages, and go proclaim in a loud voice:

One experiment between blue and red buttons increased company revenue by $68 mil/year. ONE BUTTON!

You’ve seen bloggers, book authors and gurus claiming this in their tweets, articles and industry conference keynotes.

The Analysis Ninja that you are, you see the BS. The individual is ignoring varied influence across product portfolios, seasonality, diminishing returns, changes in the audience, and a million other things.

The $68 mil number is not helping.

Before making impossible to believe claims… Standardize the change across 10 more pages, 15 more products, for five more months, in three more countries, etc., and then start making big broad claims.

Another irritating approach taken by some to hype the impact of experiments: Adding up the impact of each experiment into a bigger total.

I’d mentioned above that you should try and make your first five tests succeed to build momentum. You do!

Test one: +$1 mil. Test two: +$2 mil. Test three, four, five: +$3, +$4, +$5 mil.

You immediately total that up and proudly start a PR campaign stating that the experimentation program added $15 mil in revenue.

Except… Here’s the thing, if you now made all five changes permanent on your site, in your mobile app, the total impact will be less than $15 mil. Often a lot less.

Each change does not stack on top of the other. Diminishing returns. Incrementality. Analysis Ninjas know the drill.

So. Don’t claim $15 mil (or more) from making all the changes permanent.

Also, the knowledgeable amongst you would have already observed that the effect of any successful experiment will wash off over time. Not to zero. But less with every passing week. Accommodate for this reality in your PR campaign.

There is a tension between wanting to start something radical and being honest with the impact. You want to hype, I understand the pressure. Even if you aren’t concerned about the company, there are books to sell, speeches to give at conferences. Resist the urge.

When you claim outlandish impact, no one believes you. And in this game, credibility is everything.

You don’t want to win the battle but lose the war.

4. Overestimating your company’s ability to have good ideas.

I’ve never started an experimentation program, worried that we won’t have enough worthy ideas to test. I’m always wrong.

Unless you are running a start-up or a 40-people mid-sized business, your experimentation program is going to rely on a large number of senior executives, the creative team, the agency, multiple marketing departments and product owners, the digital team, the legal team, the analysts, and a boatload of people to come up with ideas worth testing.

It is incredibly difficult to come up with good ideas. Among reasons I’ve jotted down from my failures:

People lack imagination.

People fight each other for what’s worthy.

People have strong opinions for what is out of bounds for testing. (Sacré bleu, sacrosanct!)

People, more than you would like to admit, insult the User’s intelligence.

People will throw a wrench in the works more often than you like.

People will come up with two great ideas for new copy when you can test six – and then they can’t supply any more for five months.

Go in realizing that it will be extraordinarily difficult for you to fill your experimentation pipeline with worthy ideas. Plan for how to overcome that challenge.

Multivariate Testing sounds good, until you can’t come up with enough ideas to fill the cells, and so you wind up filling them with low-quality ideas. Tool capabilities currently outstrip our ability to use them effectively.

One of my strategies is to create an ideas democracy.

This insight is powerful: The C-Suite has not cornered the market on great ideas for what to test. The lay-folks, your peers in other departments, those not connected to the site/app, are almost perfect for ripping the existing user experience and filling your great ideas to test bucket.

One of my solutions is to send an email out to Finance, Logistics, Customer Support, a wide diversity of employees. Invite them for an extended (free) lunch. We throw the site up on the conference room screen. Point out some challenges we see in data (the what) and ask for their hypotheses and ideas (the Why). We always left the room with 10 more ideas than we wanted!

My second source of fantastic ideas to test are the site surveys we ran. If you were unable to complete your task, what were you trying to do (or the variation, why not)? Your users will love to complain, your job is to create solutions for those complaints – and then test those solutions to see which ones reduce complaints!

My third sources of solid ideas is leveraging online usability testing. Lots of tools out there, checkout Try My UI or Userfeel. It is wonderful how seeing frustration live sparks imagination. 🙂

Plan for the problem that your company will have a hard time coming up with good ideas. Without a source – imagination – of material good ideas, the greatest experimentation intent will amount to diddly squat.

5. Measuring final outcome, instead of what job the page is trying to do.

How do you know the Multivariate Test worked?

Revenue increased.

BZZZZT.

No.

Go back to the thought expressed in #2 above: An average experience is 12 to 28 pages to conversion.

If you are experimenting on page one, imagine how difficult it will be to know on page #18 if page #1 made a difference (when any of the pages between 1 and 18 could have sucked and killed the positive impact!).

For the Primary Success Criteria, I’m a fan of measuring the job the element / button / copy / configurator was trying to do.

On a landing page, it might be just trying to reduce the 98% bounce rate. Which variation of your experiment gets it down to 60%?

On a product page, it might be increasing product bundles added to the cart instead of the individual product.

On the checkout page, it might be increasing the use of promo codes, or reduce errors in typing phone numbers or addresses.

On a mobile app, success might mean easier recovery of the password to get into the app.

So on and so forth.

I’ll designate the Primary Success of the experiment based only on the job the page/element is trying to do (i.e., the hypothesis I’m testing). If it works, the test was a success (vs. the page test is a success from what happens 28 pages later!).

In each case above, for my Secondary Success Criteria I’ll track the Conversion Rate or AOV or, for multiple purchases in a short duration of time, Repeat Purchases. But, that’s simply to see second order effects. If they are there, I take it as a bonus. If they are not there, I know there are other dilutive elements in between what I’m trying to do and the Secondary Success. Good targets/ideas for future impactful tests.

If all your experiments are tagged to deliver higher revenue/late-stage success, they’ll take too long to show signal, you will have to fix a lot more than just the landing page, and, worse, you’ll be judging a fish by its ability to climb a tree.

Never judge a fish by its ability to climb a tree!

6. (Advanced) Believing there is one right answer for everyone.

If you randomly hit a post I’ve written, it likely has this exhortation: Segment, Segment, SEGMENT!

What can I say. I’m a fan of segmentation. Because: All data in aggregate is crap.

We simply have too many different types of people, from different regions, with different preferences, with different biases, with different intent, at different stages of engagement with us, with different contexts, engaging with us.

How can there be one right answer for everyone?

Segmentation helps us tease out these elements and create variations of consumer experiences to deliver delight (which, as a side effect, delivers cash to us).

The same principle applies for A/B & MV testing.

If we test version A of the page and a very different version B of the page, in the end we might identify that version B is better by a statistically significant amount.

Great.

Knowing humanity though, it turns out that for a segment of the users version A did really well, and they disliked version B at all.

It would be totally a bonus item being able to determine who these people are and what it is about them that made them like A when most liked B.

Not being able to figure this out won’t doom your experimentation program. It might just limit how successful it is.

Hence, when the program is settled, I try to instrument the experiment and variations so that we can capture as much data about audience attributes as possible for later advanced analysis.

The ultimate digital user experience holy grail is that every person gets their own version of your website (it is so cheap to deliver!). It is rare that a site delivers on this promise/hope.

As you run your experiments, it is precisely what I’m requesting you to do.

This one strategy will set your company apart. If you can ascertain which cluster of the audience each version of the experiment works with, it holds the potential to be a competitive advantage for you!

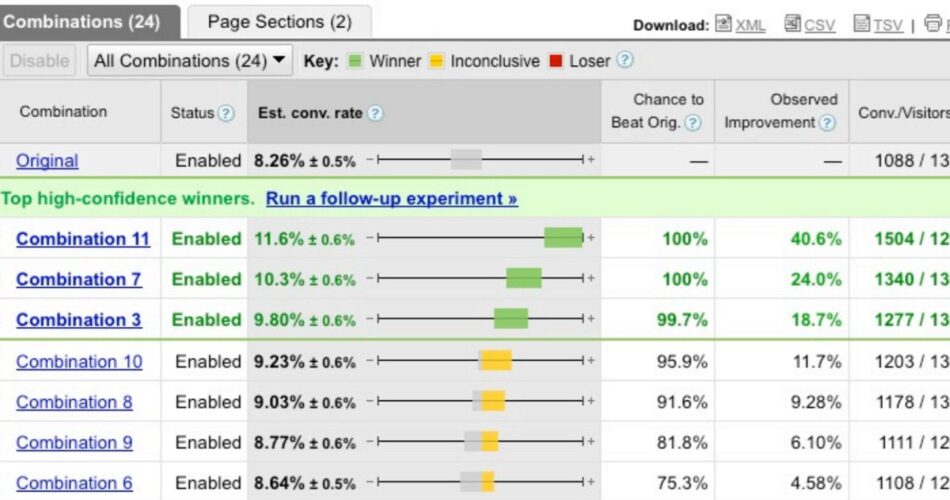

Bonus Tip: If you follow my advice above, then you’ll need a experience customization platform that allows you to deliver these unique digital experiences. And, you are in luck. Google Optimize allows you to create personalized experiences from experiments I mention above (and the results you see immediately below).

7. (Advanced) Optimizing yourself to a local maxima.

A crucial variation of #5 and #6.

An illustrative story…

When I first started A/B testing at a former employer, we did many things above, and the team did an impressive job improving the Primary Success Metric and at times the Secondary Success Metric. Hurray! Then, because I love the what but also the why, we implemented satisfaction surveys and were able to segment them by test variations.

The very first analysis identified that while we had improved Conversion Rate and Revenue via our test versions, the customer satisfaction actually plummeted!

Digging into the data made me realize, we’d minimized (in some instances eliminated) the Support options. And, 40% of the people were coming to the (ecommerce!) site for tech support.

Ooops.

Many experimentation programs don’t survive because they end up optimizing for a local maxima. They are either oblivious to the global maxima (what is the good for the entire company and not just our team) or there are cultural incentives to ignore the global maxima (in our case likelihood to recommend our company – not just buy from us once).

Be very careful. Don’t be obligated only to only your boss/team.

Think of the Senior Leader 5 (or 12) levels higher and consider what their success looks like.

To the extent possible, try to instrument your experiments to give you an additional signal (in a Secondary Success Criteria spirit) to help you discern the impact on the company’s global maxima.

In our case above, it was integrating surveys and clickstream data. You might have other excellent ideas (pass on primary keys into Salesforce!).

Global maxima, FTW. Always.

8. Building for scaled testing from day one.

Experimentation is like religion. You get it. It seems so obviously the right answer. You build for a lifetime commitment.

You start by buying the most expensive and robust tool humans have created. You bully your CMO into funding a 40 people org and a huge budget. You start building out global partnerships across the company and its complexity, and start working on scaled processes. And so much more.

Huuuuuuuuuuuuge mistake.

By the time you build out people, process, tools, across all the steps required to test at scale across all your business units (or even customer experiences), the stakes are so high (we’ve already invested 18 months, built out a 40 people organization, and spent $800k on a tool!!), every test needs to impress God herself or it feels like a failure.

Even if your first five tests succeed, unless you are shaking the very foundations of the business instantly (after 18 months of build out of course) and company profit is skyrocketing causally via your tests… Chances are, the experimentation program (and you) will still feel like a failure to the organization.

And, what are the chances that you are going to make many of the above mistakes before, during and after you are building out your Global Scaled Massive Integrated Testing Program?

Very high, my dear, very high. You are only human.

Building for scale from the start, not having earned any credibility that experimentation can actually deliver impact, is silly and an entirely avoidable mistake.

Try to.

From my first Web Analytics book, this formula works wonderfully for a successful experimentation program:

1. Start small. Start free (if you can). Prove value. Scale a bit.

2. Prove value. Scale a bit more.

3. Prove value.

4. Rinse. Repeat. Forever.

Robust and remarkable experimentation platforms are built on many points of victory along a journey filled with learnings from wins and losses.

9. (Advanced) Not realizing experimentation is not the answer.

Testing fanatics often see every problem as a nail that can be hammered with an experimentation hammer.

Only sign up to solve with experimentation, what can be solved with experimentation.

A few years back I bumped into an odd case of a team filled with smart folks constructing an experiment to prove that SEO is worth investing in. There was a big presentation to go with the idea. Robust math was laid out.

I could not shake the feeling of WTH!

When you find yourself in a situation where you are using, or are being asked to use, experimentation to prove SEO’s value… Realize the problem is company culture. Don’t use experimentation to solve that problem. It is a lose – lose.

Not that long ago, I met a team that was working on some remarkable experiments for a large company. They were doing really cool work, they were doing analysis that was truly inspiring.

I was struck by the fact that at that time their company’s only success was losing market share every single month for the last five years, to a point where they were approaching irrelevance.

Could the company not use a team of 30 people to do something else that could contribute to turning the company around? I mean, they were just doing A/B testing and even they realized that while mathematically inspiring and executionally cool, there is no way they were saving the business.

It is not lost on me that this recommendation goes against the very premise of this newsletter – to build a robust and remarkable experimentation program. But, the ability to see the big picture and demonstrate agility is a necessary skill to build for long-term success.

Bottom line.

I’ve only used or die in two of my slogans. Segment, or die. Experiment, or die.

I deeply, deeply believe in the power of A/B and Multivariate Testing. It is so worthy of every company’s love and affection. Still, over time, I’ve become a massive believer in the value of robust experimentation and testing to solve a multitude of complex business challenges. (Ideas A – F, in section one above.)

In a world filled with data with few solid answers, ideas A – F and site/app testing provide answers that can dramatically shift your business’ trajectory.

Unlock your imagination. Unlock a competitive advantage.

May the force be with you.

Special Note: All the images in this blog post are from an old presentation I love by Dan Siroker. Among his many accomplishments, he is the founder of the wonderful testing platform Optimizely.