SC25 Energy is turning into a serious headache for datacenter operators as they grapple with the right way to assist ever bigger deployments of GPU servers – a lot in order that the AI increase is now driving the adoption of a know-how as soon as thought too immature and failure-prone to benefit the chance.

We’re speaking, in fact, about co-packaged optical (CPO) switching.

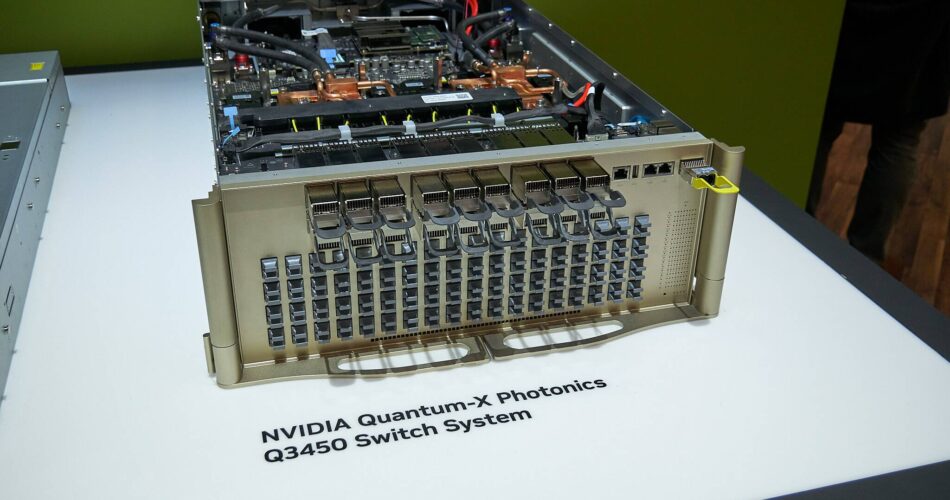

On the SC25 supercomputing convention in St. Louis this week, Nvidia revealed that GPU-bit barn operators Lambda and CoreWeave can be adopting its Quantum-X Photonics CPO switches alongside deployments on the Texas Superior Computing Heart (TACC).

Nvidia has some competitors: Broadcom confirmed off its personal Tomahawk 5- and 6-based CPO switches. However whereas CPO switching is poised to take off in 2026, getting up to now has been a journey – and it’s miles from over.

So what’s driving the CPO transition? Within the phrases of former Prime Gear host Jeremy Clarkson, “velocity and energy.”

AI networks require exceptionally quick port speeds, as much as 800 Gbps, with Nvidia already charting a course towards 1.6 Tbps ports with its next-gen ConnectX-9 NICs.

Sadly, at these speeds, direct connect copper cables can solely attain a meter or two and sometimes require costly retimers. Stitching collectively a couple of tens or lots of of 1000’s of GPUs means probably lots of of 1000’s of power-hungry pluggable transceivers.

Networking distributors like Broadcom have been toying with CPO tech for years now. Because the identify suggests, it entails transferring the optical elements historically present in pluggable transceivers into the equipment itself utilizing a sequence of photonic chiplets packaged alongside the swap ASICs. As a substitute of QSFP cages and pluggables, the fiber pairs are actually hooked up on to the swap’s entrance panel.

Whereas every transceiver does not devour that a lot energy – someplace between 9 and 15 watts relying on port velocity – that provides up fairly rapidly if you’re speaking concerning the varieties of huge non-blocking fat-tree networks utilized in AI backend networks.

A compute cluster with 128,000 GPUs may minimize the variety of pluggable transceivers from almost half 1,000,000 to round 128,000 just by transferring to CPO switches.

Nvidia estimates its Photonics switches are as a lot as 3.5x extra power environment friendly, whereas Broadcom’s knowledge suggests the tech may decrease optics energy consumption by 65 %.

What took so lengthy?

One of many largest boundaries to CPO adoption has been reliability and the blast radius once they fail.

In a standard swap, if an optical pluggable fails or degrades, you may lose a port, however you do not lose the entire swap. With CPO, if one of many photonic chiplets fails, you do not simply lose one port – you may lose 8, 16, 32, or extra.

This is likely one of the causes most CPO distributors, together with the 2 large ones — Broadcom and Nvidia — have opted for exterior laser modules.

Lasers are one of many extra failure-prone elements of an optical transceiver, so by retaining them in a bigger pluggable type issue, they don’t seem to be solely consumer serviceable, however may also compensate for failures by boosting the output of the others within the occasion of a failure.

However because it seems, many of those considerations look like unfounded. In reality, early testing by Broadcom and Meta reveals that the know-how not solely provides higher latency by eliminating the variety of electrical interfaces between the optics and swap ASIC, however can be considerably extra dependable.

Final month, Meta revealed that it had not solely deployed Broadcom’s 51.2 Tbps co-packaged optical switches, codenamed Bailly, in its datacenters, however had recorded a million cumulative gadget hours of flap-free operation at 400 Gbps equal port speeds.

In the event you’re not acquainted, hyperlink flaps happen when swap ports go up and down in fast succession, introducing community instability and disrupting the circulate of knowledge.

Nvidia, in the meantime, claims its photonic networking platform is as much as 10x extra resilient, permitting for functions, like coaching workloads, to run 5x longer with out interruptions.

The present state of CPO switching

As we talked about earlier, Broadcom and Nvidia are among the many first to embrace CPO for packet switching.

At GTC this spring, you could recall that Nvidia teased its first set of CPO switches in each InfiniBand and Ethernet flavors with its Spectrum-X and Quantum-X.

Nvidia’s Quantum-X Photonics platform is totally liquid-cooled and sports activities 144 ports of 800Gbps InfiniBand utilizing 200 Gbps serializer-deserializers, placing its complete bandwidth at 115.2 Tbps.

This is a more in-depth have a look at Nvidia’s 144 Tbps Quantum-X Photonics swap, which boasts 144 800 Gbps optical InfiniBand ports – Click on to enlarge

These are the switches that TACC, Lambda, and CoreWeave have introduced plans at SC25 this week to deploy throughout their compute infrastructure going ahead.

For many who would favor to stay with Ethernet, the choices are extra numerous. Nvidia will supply a number of variations of its Spectrum-X Photonics switches relying in your most well-liked configuration. For these wanting most radix, aka a number of ports, Nvidia will supply switches with both 512 or 2,048 200 Gbps interfaces.

In the meantime, these searching for most efficiency could have the choice of both 128 or 512 800 Gbps ports.

This is a more in-depth have a look at the entrance panel of Broadcom’s 102.4 Tbps Davisson co-packaged optical swap – Click on to enlarge

Nvidia’s Photonic Ethernet equipment will not arrive till subsequent yr and is already dealing with competitors from the likes of Broadcom. Micas Networks has begun delivery a 51.2 Tbps CPO swap primarily based on Broadcom’s older Tomahawk 5 ASICs and Bailly CPO tech.

Alongside the swap, Broadcom additionally confirmed off its newest technology Davisson CPO platform, that includes a 102.4 Tbps Tomahawk 6 swap ASIC that may get away in as much as 512 200 Gbps interfaces.

What comes subsequent?

To date, Nvidia has centered most of its optics consideration on CPO switches, preferring to stay with QSFP cages and pluggable transceivers on the NIC aspect of the equation – at the least by its newly announced ConnectX-9 household of SuperNICs.

Nevertheless, Broadcom and others want to convey co-packaged optics to the accelerators themselves earlier than lengthy. You might recall that again at Sizzling Chips 2024, Broadcom detailed a 6.4 Tbps optic engine aimed toward massive scale-up compute domains.

A number of different firms are additionally trying to convey optical I/O to accelerators, together with Celestial AI, Ayar Labs, and Lightmatter, to call a couple of.

At SC25, Ayar Labs confirmed what an XPU utilizing its CPO chiplets (the eight dies positioned on the ends of the package deal) may seem like. – Click on to enlarge

Each Ayar and Lightmatter confirmed off dwell demos of their newest CPO and optical interposer tech at SC25. Within the case of Ayar, the startup confirmed off reference design developed in collaboration with Alchip to combine eight of its TeraPHY chiplets right into a single package deal utilizing a mix of UCIe-S and UCIe-A interconnects, which can ultimately present as much as 200 Tbps of bidirectional bandwidth to chip to chip connectivity.

Lightmatter, in the meantime, is approaching the optical I/O from two instructions. The primary is a CPO chiplet that the corporate claims will ship as much as 32 Tbps of bandwidth utilizing 56 Gbps NRZ, or 64 Tbps utilizing 112 Gbps PAM4.

Right here Lightmatter demonstrates knowledge transferring throughout its M1000 silicon photonic interposers. – Click on to enlarge

Moreover, Lightmatter has developed a silicon photonic interposer known as the Passage M1000 that is designed to sew a number of chiplets collectively utilizing photonic interconnects for each chip-to-chip and package-to-package communications.

Ultimately, these applied sciences might remove the necessity for pluggable optics completely, and even open the door to extra environment friendly scale-up compute domains containing 1000’s of accelerators performing as one. ®

Source link